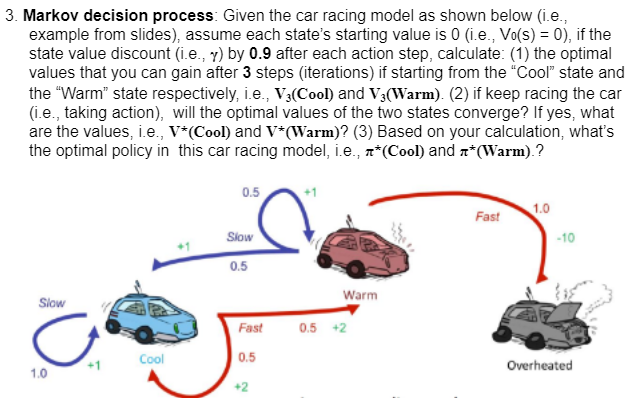

Question: Markov decision process: Given the car racing model as shown below ( i . e . , example from slides ) , assume each state's

Markov decision process: Given the car racing model as shown below ie

example from slides assume each state's starting value is ie if the

state value discount ie by after each action step, calculate: the optimal

values that you can gain after steps iterations if starting from the "Cool" state and

the "Warm" state respectively, ie and Warm if keep racing the car

ie taking action will the optimal values of the two states converge? If yes, what

are the values, ie and Based on your calculation, what's

the optimal policy in this car racing model, ie and Warm

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock