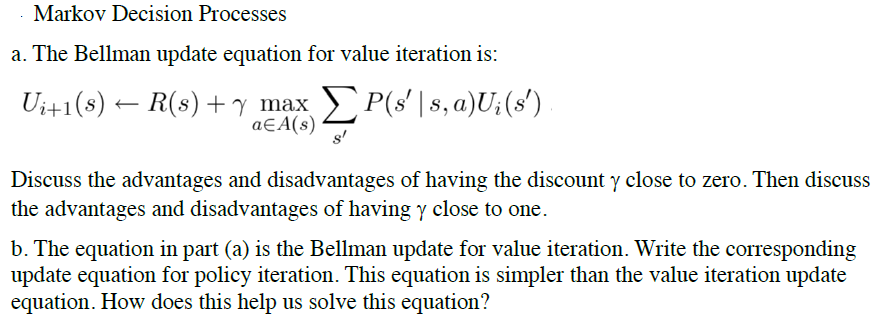

Question: Markov Decision Processes a. The Bellman update equation for value iteration is: aEA(s) Discuss the advantages and disadvantages of having the discount y close to

Markov Decision Processes a. The Bellman update equation for value iteration is: aEA(s) Discuss the advantages and disadvantages of having the discount y close to zero. Then discuss the advantages and disadvantages of having ? close to one. b. The equation in part (a) is the Bellman update for value iteration. Write the corresponding update equation for policy iteration. T1 equation. How does this help us solve this equation? on is simpler than Markov Decision Processes a. The Bellman update equation for value iteration is: aEA(s) Discuss the advantages and disadvantages of having the discount y close to zero. Then discuss the advantages and disadvantages of having ? close to one. b. The equation in part (a) is the Bellman update for value iteration. Write the corresponding update equation for policy iteration. T1 equation. How does this help us solve this equation? on is simpler than

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts