Question: Matlab code needed please Using Matlab, create a multi-layer perceptron with 3 layers: input layer, hidden layer, output layer (using a sigmoid function) Define the

Matlab code needed please

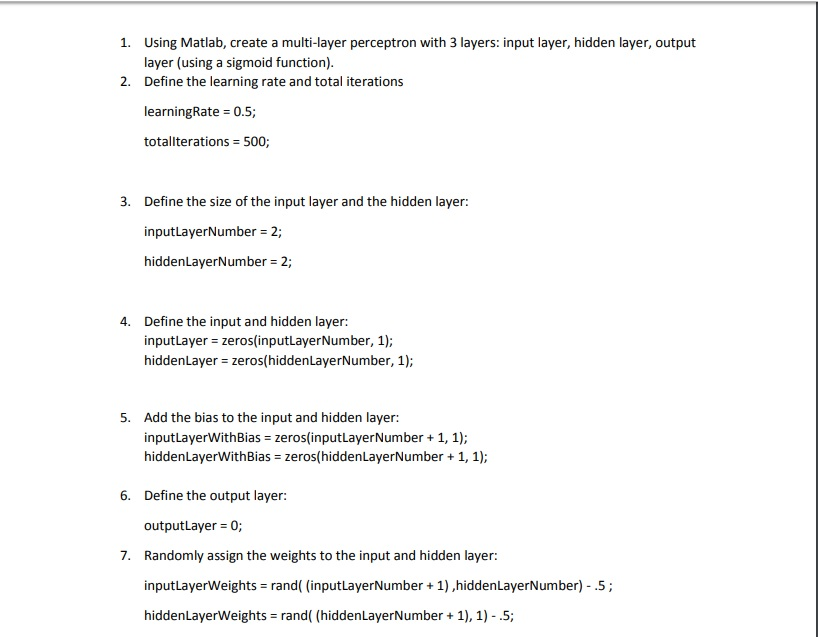

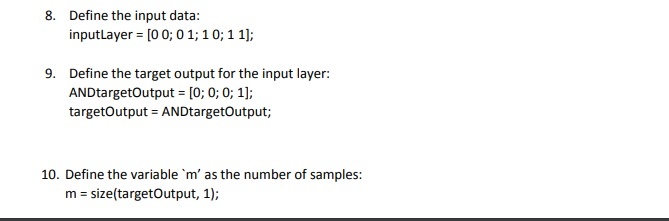

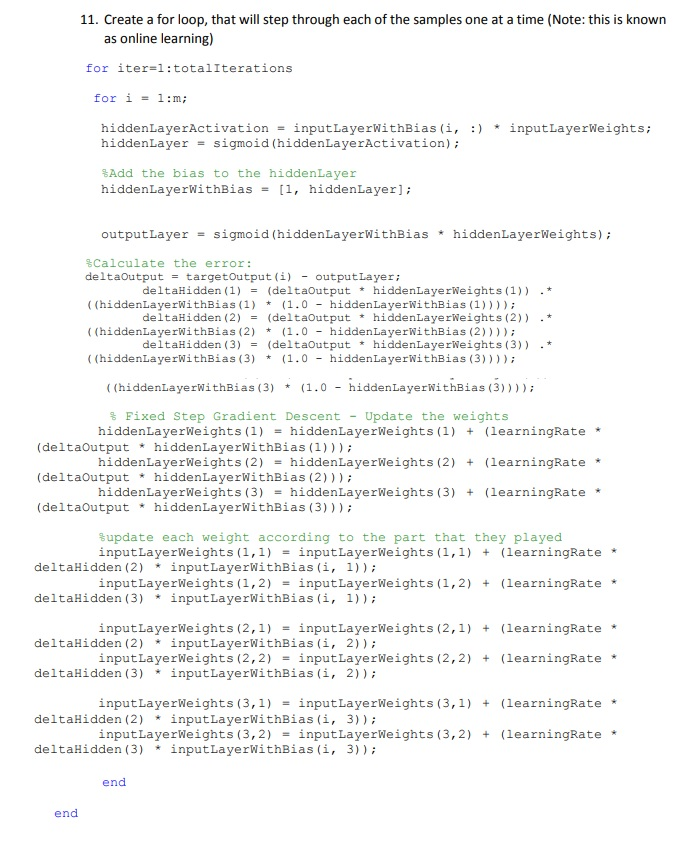

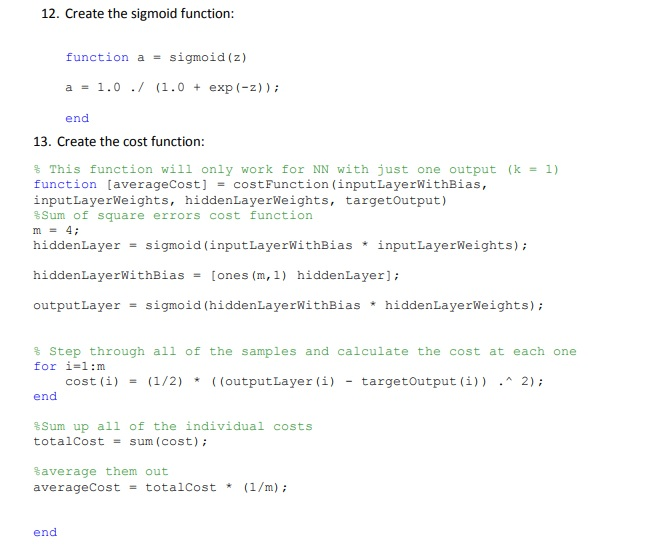

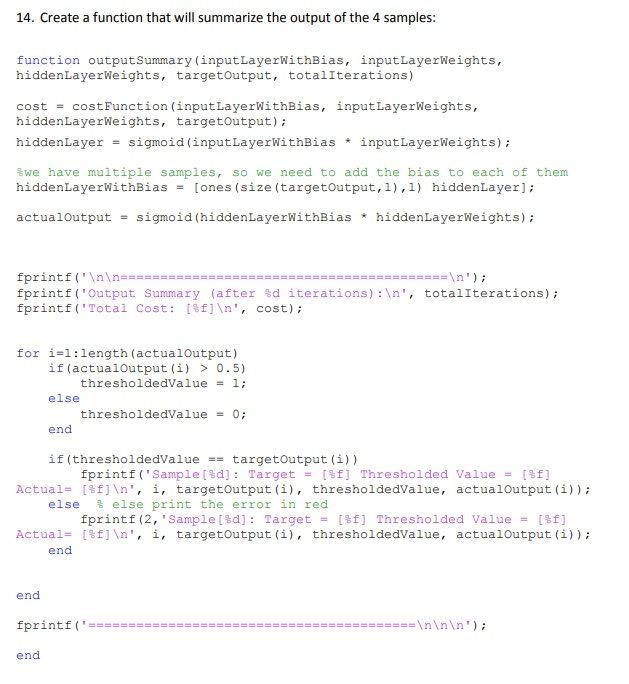

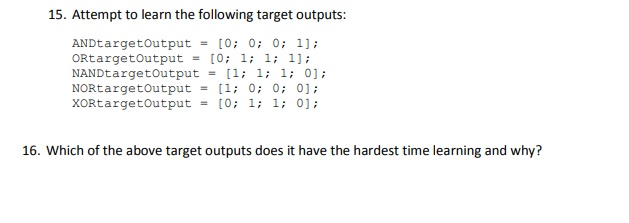

Using Matlab, create a multi-layer perceptron with 3 layers: input layer, hidden layer, output layer (using a sigmoid function) Define the learning rate and total iterations 1. 2. learningRate 0.5; totallterations 500; Define the size of the input layer and the hidden layer: inputLayerNumber 2; hiddenLayerNumber 2; 3. Define the input and hidden layer: inputLayer zeros(inputlayerNumber, 1); hiddenLayer zeros(hiddenLayerNumber, 1); 4. Add the bias to the input and hidden layer: inputLayerWithBias-zeros(inputLayerNumber 1, 1); hiddenLayerWithBias zeros(hiddenLayerNumber + 1, 1); 5. 6. Define the output layer: outputLayer 0; 7. Randomly assign the weights to the input and hidden layer: inputLayerWeights rand( (inputLayerNumber+1) ,hiddenLayerNumber)-.5; hiddenLayerWeights rand( (hiddenLayerNumber 1),1).5; 8. Define the input data: inputLayer [00; 01; 10; 11]; 9. Define the target output for the input layer: ANDtargetOutput [0; 0; 0; 1] targetOutput ANDtargetutput; 10. Define the variable 'm' as the number of samples: m size(targetOutput, 1); 11. Create a for loop, that will step through each of the samples one at a time (Note: this is known as online learning) for iter=1: total Iterations for i1:m; hiddenLayerActivation inputLayerWithBias (i, :)* inputLayerWeights: hiddenLayer = sigmoid (hiddenLayerActivation); %Add the bias to the hiddenLayer hiddenLayerWithBias = [1, hiddenLayer); outputLayer = sigmoid ( hiddenLayerWithBlas * hiddenLayerWeights) ; calculate the error: deltaoutputtargetoutput (i)- outputLayer ((hiddenLayerWithBias (1)(1.0 - hiddenLayerWithBias (1)))) ((hiddenLayerWithBias (2) (1.0 - hiddenLayerWithBias (2)))) ((hiddenLayerWithBias (3) (1.0 - hiddenLayerWithBias (3)))) deltaHidden (1) deltaHidden (2) deltaHidden (3) (deltaOutput * (deltaOutput * (deltaOutput * hiddenLayerweights (1)) hiddenLayerweights (2)) hiddenLayerweights (3)) .* .* * = = = ( (hiddenLayerWithBias (3) (1.0 - hiddenLayerWithBias (3)))) % Fixed Step Gradient Descent - Update the weights (deltautput hiddenLayerWithBias (1))) (deltaoutput hiddenLayerWithBias (2))) (deltaoutput hiddenLayerWithBias (3))) hiddenLayerWeights (1) hiddenLayerWeights () hiddenLayerWeights (2) hiddenLayerWeights (2) hiddenLayerWeights (3)hiddenLayerWeights (3) (learningRate* (learningRate* (learningRate* update each weight according to the part that they played inputLayerHeights (1,1) inputLayerHeights (1,1) + (learningRate * = deltaHidden (2) inputLayerWithBias(i, 1)) inputLayerHeights (1,2) inputLayerWeights (1,2) + (learningRate * = deltaHidden (3) inputLayerWithBias(i, 1)) inputLayerHeights (2,1) inputLayerHeights (2,1) + (learningRate * = deltaHidden (2) inputLayerWithBias(i, 2)) inputLayerHeights (2,2) imputLayerHeights (2,2) + (learningRate * = deltaHidden (3) inputLayerWithBias(i, 2)) inputLayerHeights (3,1) inputLayerWeights (3,1) + (learningRate * = deltaHidden (2) *inputLayerWithBias(i, 3)) inputLayerHeights (3,2) inputLayerHeights (3,2) + (learningRate * = deltaHidden (3) inputLayerWithBias(i, 3)) end end 12. Create the sigmoid function: function a = sigmoid (z) a 1.0 ./ (1.0 + exp (-z)); end 13. Create the cost function: % This function will only work for NN with just one output (k 1) function [averageCost]-costFunction (inputLayerWithBias, inputLayerWeights, hiddenLayerWeights, targetoutput) %sum of square errors cost function m=4; hiddenLayer = sigmoid (inputlayerMithBias * inputLayerWeights); hiddenLayerWithBias [ones (n, 1) hiddenayer] ; = outputLayer = sigmoid (hiddenLayerWithBias * hiddenLayerWeights); % step through all of the samples and calculate the cost at each one for i=1 : m end %sum up all of the individual costs cost (i) (1/2) ((outputLayer (i) -targe toutput (i)) .^ 2); = * tota!cost sum (cost) ; = %average them out averageCost totalCost(1/m); end 14. Create a function that will summarize the output of the 4 samples function outputSummary (inputLayerWithBias, inputLayerWeights, hiddenLayerWeights, targetoutput, totalIterations) cost costFunction (inputLayerWithBias, inputLayerWeights, hiddenLayerWeights, targetoutput) hiddenLayer sigmoid (inputLayerWithBiasinputLayerWeights) %we have multiple samples, so we need to add the bias to each of them hiddenLayerWithBias = [ones (size (targetOutput,1),1) hiddenLayer); actualoutput = sigmoid (hiddenLayerWithBias * hiddenLayerWeights); fprintf nn- fprintf("Output Summary (after %d iterations) : ', fprintf ('Total Cost: [%f] ', cost); totaliterations); for i-l:length (actualoutput) if (actualoutput(i) >0.5) else end if (thresholdedValuetargetoutput (i)) thresholdedvalue1; thresholdedValue0; fprintf 'Samplesd]Targetf] Thresholded Value f [%f] ', Actual- i, targetoutput (i), thresholdedValue, actualOutput (i)); else % else print the error in red fprintf (2, "Sample [%d): [%f] ', Target [Bf] Thresholded Value [%f] Actual- end i, targetoutput (i), thresholdedValue, actualOutput (i)); end fprintf ( . = ,); end 15. Attempt to learn the following target outputs: ANDtargetoutput-[0 0 0 1; ORtargetoutput-[011; 1]; NANDtargetoutput[1 1 1: 01 NORtargetoutput [1; 0; 0; 0]; XORtargetoutput [0 1 1 01 16. Which of the above target outputs does it have the hardest time learning and why

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts