Question: Mechine Learning It from 3.3 Logistic regression from Leaning From Data Exercise 3.6 ICross-entropy error measure] (a) More generally, if we are learning from data

Mechine Learning

It from 3.3 Logistic regression from Leaning From Data

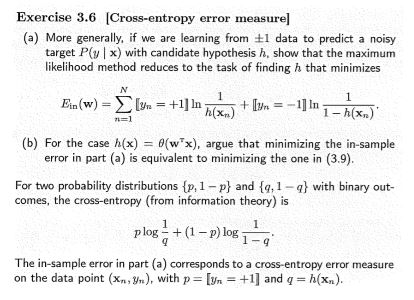

Exercise 3.6 ICross-entropy error measure] (a) More generally, if we are learning from data to predict a noisy target P(u | x) with candidate hypothesis h, show that the maximum likelihood method reduces to the task of finding h that minimizes (b) For the case h(x) = (w"x), argue that minimizing the in-sample error in part (a) is equivalent to minimizing the one in (3.9). For two probability distributions (p, 1-p and g,1- comes, the cross-entropy (from information theory) is with binary out- p log 4 +(1-n) log (1) log j The in-sample error in part (a) corresponds to a cross-entropy error measure on the data point (3mm), with p= [Yn +1] and q = h(Xn). Exercise 3.6 ICross-entropy error measure] (a) More generally, if we are learning from data to predict a noisy target P(u | x) with candidate hypothesis h, show that the maximum likelihood method reduces to the task of finding h that minimizes (b) For the case h(x) = (w"x), argue that minimizing the in-sample error in part (a) is equivalent to minimizing the one in (3.9). For two probability distributions (p, 1-p and g,1- comes, the cross-entropy (from information theory) is with binary out- p log 4 +(1-n) log (1) log j The in-sample error in part (a) corresponds to a cross-entropy error measure on the data point (3mm), with p= [Yn +1] and q = h(Xn)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts