Question: Minimizing lateness problem: Scheduling to minimize lateness Specifications: Single resource processes one job at a time. Job j requires t j units of processing time

Minimizing lateness problem: Scheduling to minimize lateness

Specifications:

Single resource processes one job at a time.

Job requires units of processing time and is due at time

If starts at time it finishes at time

Lateness: max

Goal: Schedule all jobs to minimize maximum lateness

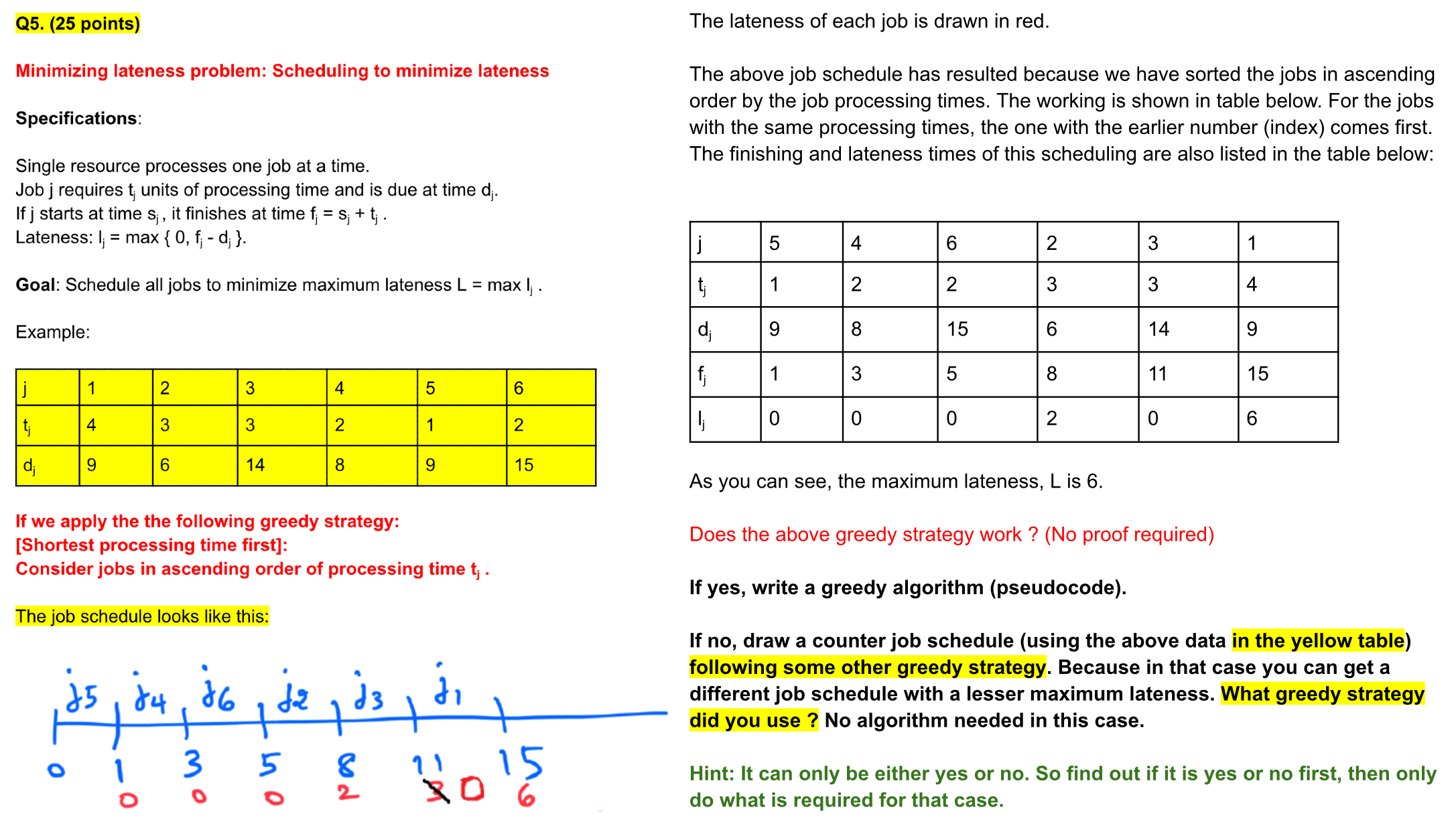

Example:

If we apply the the following greedy strategy:

Shortest processing time first:

Consider jobs in ascending order of processing time

The job schedule looks like this:

The lateness of each job is drawn in red.

The above job schedule has resulted because we have sorted the jobs in ascending

order by the job processing times. The working is shown in table below. For the jobs

with the same processing times, the one with the earlier number index comes first.

The finishing and lateness times of this scheduling are also listed in the table below:

As you can see, the maximum lateness, is

Does the above greedy strategy work? No proof required

If yes, write a greedy algorithm pseudocode

If no draw a counter job schedule using the above data in the yellow table

following some other greedy strategy. Because in that case you can get a

different job schedule with a lesser maximum lateness. What greedy strategy

did you use No algorithm needed in this case.

Hint: It can only be either yes or no So find out if it is yes or no first, then only

do what is required for that case.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock