Question: Multiple linear regression solves the minimisation problem min (y-XB)(y = X) = - ,...., where the vectors = (o ... Bp), n min (Bo

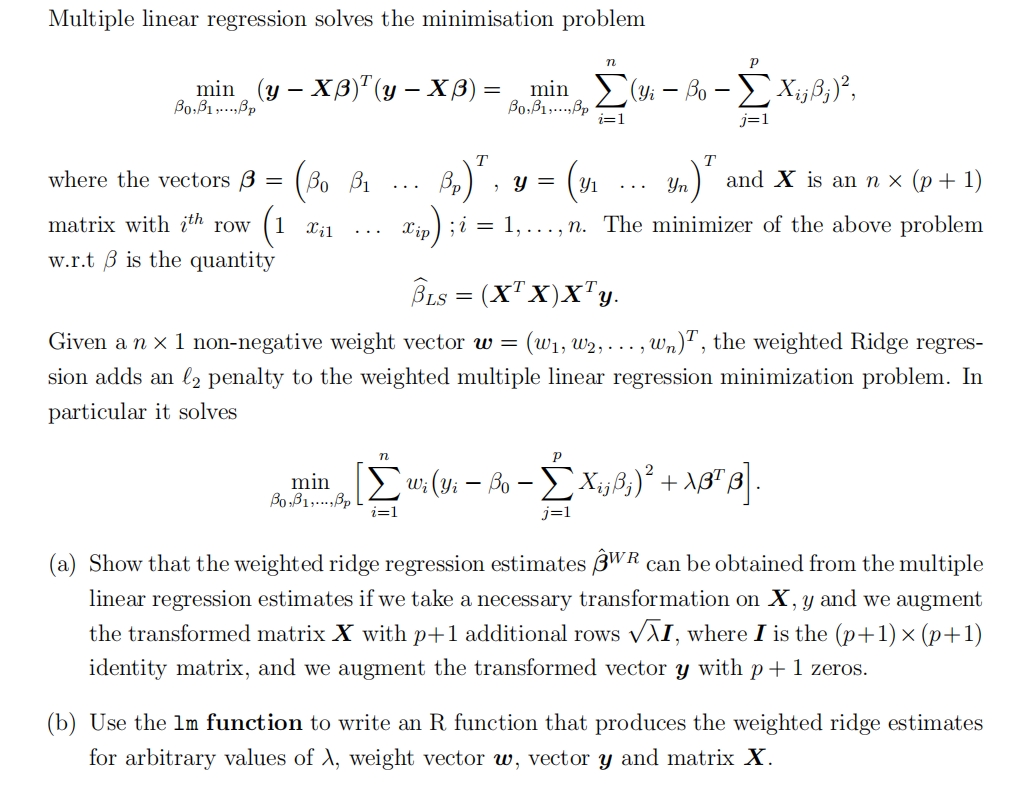

Multiple linear regression solves the minimisation problem min (y-XB)(y = X) = - ,...., where the vectors = (o ... Bp)", n min (Bo Xij;), Bo.B1Bp i=1 - T j=1 , y = (y ... Yn)" and X is an n (p+1) matrix with ith row Xil ... Xip w.r.t is the quantity i = 1,..., n. The minimizer of the above problem BLS = (XTX)XTY. Given a n x 1 non-negative weight vector w = :(W1, W2,..., Wn wn), the weighted Ridge regres- sion adds an 2 penalty to the weighted multiple linear regression minimization problem. In particular it solves min Bo B1Bp n - - [wi (yi o Xij;) + \]. i=1 Wi j=1 (a) Show that the weighted ridge regression estimates (BWR can be obtained from the multiple linear regression estimates if we take a necessary transformation on X, y and we augment the transformed matrix X with p+1 additional rows I, where I is the (p+1)(p+1) identity matrix, and we augment the transformed vector y with p + 1 zeros. (b) Use the 1m function to write an R function that produces the weighted ridge estimates for arbitrary values of X, weight vector w, vector y and matrix X.

Step by Step Solution

There are 3 Steps involved in it

To address the provided question lets dissect each part separately a Show that the weighted ridge re... View full answer

Get step-by-step solutions from verified subject matter experts