Question: Mutual Information Answer the following two questions: a) A random variable X models a roulette game with two outcomes. Let c = 0 denote the

Mutual Information

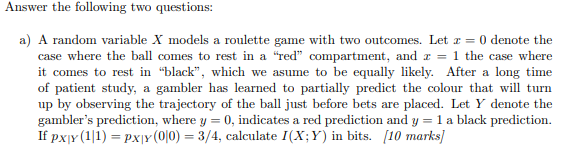

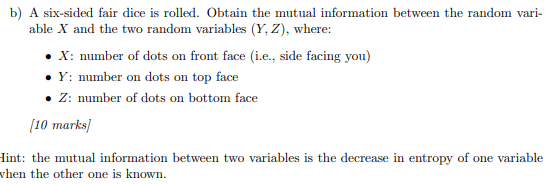

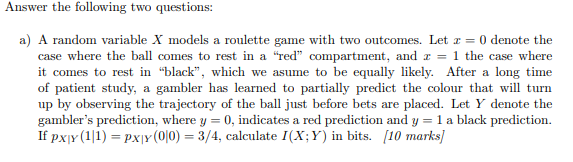

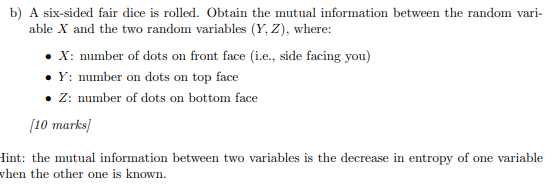

Answer the following two questions: a) A random variable X models a roulette game with two outcomes. Let c = 0 denote the case where the ball comes to rest in a "red" compartment, and c = 1 the case where it comes to rest in "black", which we asume to be equally likely. After a long time of patient study, a gambler has learned to partially predict the colour that will turn up by observing the trajectory of the ball just before bets are placed. Let Y denote the gambler's prediction, where y = 0, indicates a red prediction and y = 1 a black prediction. If pxy (1 1) = pxy(0|0) = 3/4, calculate I(X; Y) in bits. /10 marks)b) A six-sided fair dice is rolled. Obtain the mutual information between the random vari- able X and the two random variables (Y, Z), where: . X: number of dots on front face (i.e., side facing you) . Y: number on dots on top face . Z: number of dots on bottom face (10 marks) lint: the mutual information between two variables is the decrease in entropy of one variable when the other one is known

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts