Question: Naive Bayes Classifier Consider a classication problem with training data {(i1,1), - ~ . , (in, $93,, and two classes C1 and C2. The sample

Naive Bayes Classifier

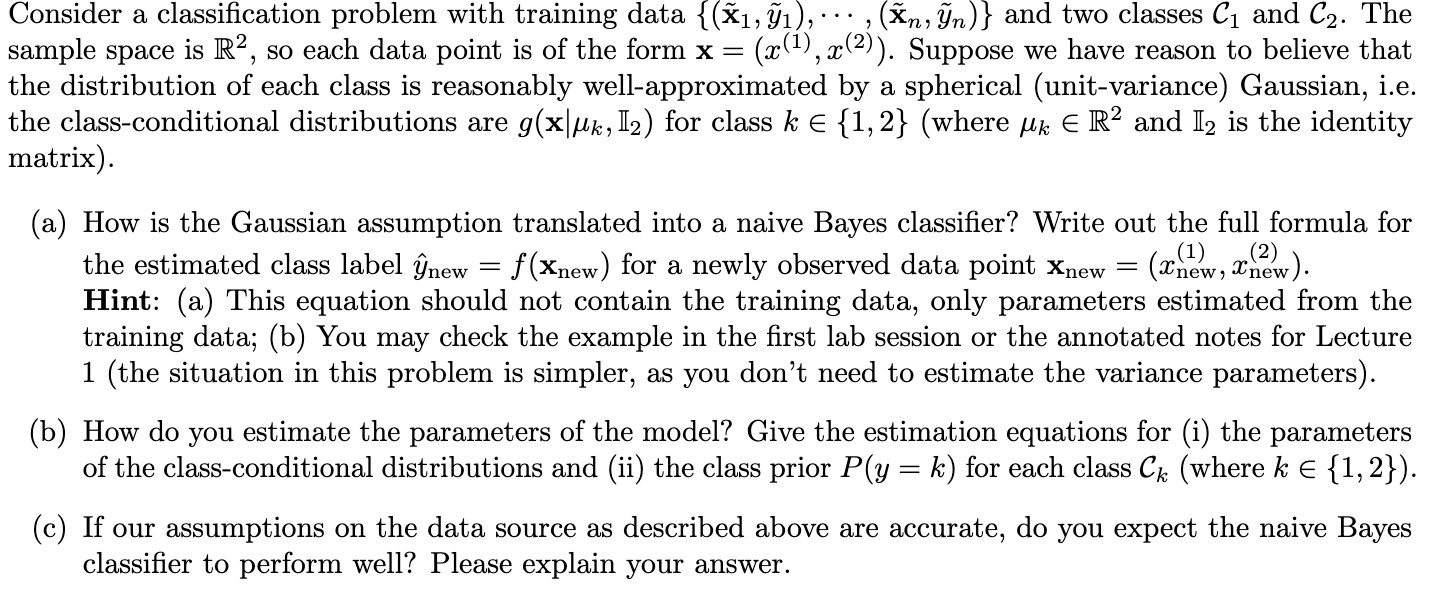

Consider a classication problem with training data {(i1,1), - ~ . , (in, $93,, and two classes C1 and C2. The sample space is R2, so each data point is of the form x : (33(1),:r(2)). Suppose we have reason to believe that the distribution of each class is reasonably well-approximated by a spherical (unit-variance) Gaussian, i.e. the class-conditional distributions are 9(Xlk, H2) for class k E {1, 2} (where pm 6 R2 and llg is the identity matrix). (a) How is the Gaussian assumption translated into a naive Bayes classier? Write out the full formula for (1) (2) ), the estimated class label gnaw = f (xnew) for a newly observed data point xnew = (anew, anew Hint: (a) This equation should not contain the training data, only parameters estimated from the training data, (b) You may check the example in the rst lab session or the annotated notes for Lecture 1 (the situation in this problem is simpler, as you don't need to estimate the variance parameters). (b) How do you estimate the parameters of the model? Give the estimation equations for (i) the parameters of the classconditional distributions and (ii) the class prior P (y : k) for each class 6;, (where k E {1, 2}). (c) If our assumptions on the data source as described above are accurate, do you expect the naive Bayes classier to perform well? Please explain your

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts