Question: ONLY ONE OF THE OPTIONS IS CORRECT ANSWER, COMPUTER VISION, CONVOLUTIONAL NEURAL NETWORKS juppose that the input to a self-attertion layer is a sequerce {x1,x2,x3,x4},

ONLY ONE OF THE OPTIONS IS CORRECT ANSWER, COMPUTER VISION, CONVOLUTIONAL NEURAL NETWORKS

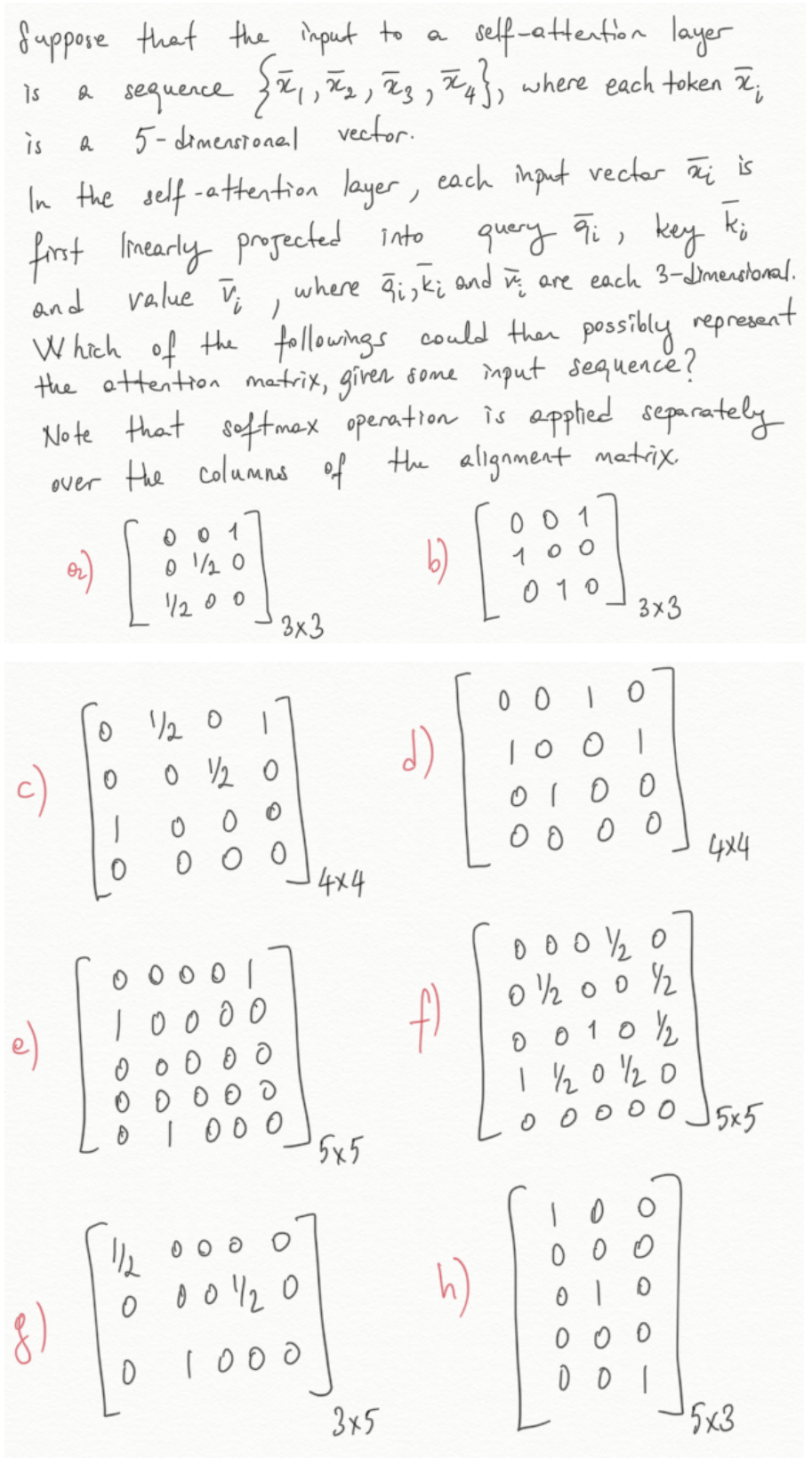

juppose that the input to a self-attertion layer is a sequerce {x1,x2,x3,x4}, where each token xi is a 5 -dimensional vector. In the self-attention layer, each input vector xi is first linearly projected into query qi, key ki and value vi, where qi,ki and vi are each 3-dimensional. Which of the followings could then possibly represent the attention matrix, given some input sequence? Note that softmax operation is apphied separately over the columns of the alignment matrix. or) 001/201/2010033 b) 01000110033 c) 00101/200001/200100044d010000101000010044 e) 010000000100000000001000055f0001001/201/20001001/2001/2001/21/20055 f)1/20000100001/20000 h) 10000001000000153

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts