Question: please answer( C and D only) as soon as possible thank you Consider a small cluster with 20 machines: 19 DataNodes and 1 NameNode. Each

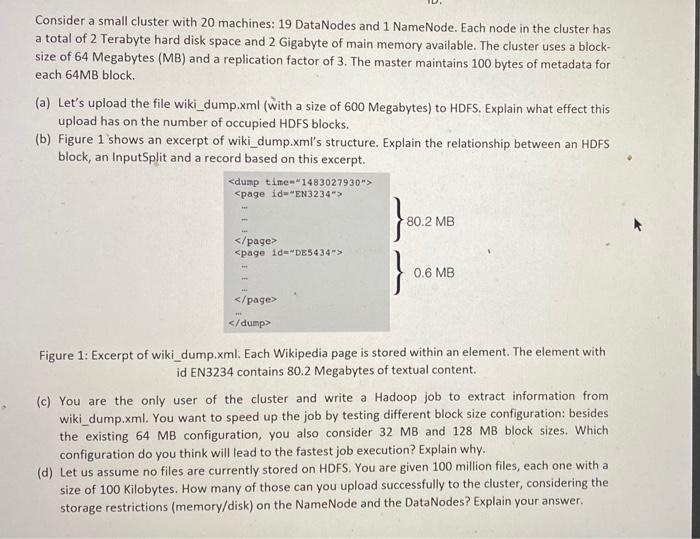

Consider a small cluster with 20 machines: 19 DataNodes and 1 NameNode. Each node in the cluster has a total of 2 Terabyte hard disk space and 2 Gigabyte of main memory available. The cluster uses a block- size of 64 Megabytes (MB) and a replication factor of 3. The master maintains 100 bytes of metadata for each 64MB block (a) Let's upload the file wiki_dump.xml (with a size of 600 Megabytes) to HDFS. Explain what effect this upload has on the number of occupied HDFS blocks. (b) Figure 1 shows an excerpt of wiki_dump.xml's structure. Explain the relationship between an HDFS block, an InputSplit and a record based on this excerpt.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts