Question: Please answer for Exercise 4.13 Exercise 4.13 respect to w, we obtain E(w)=n=1N(yntn)n where we have made use of (4.88). We see that the factor

Please answer for Exercise 4.13

Please answer for Exercise 4.13

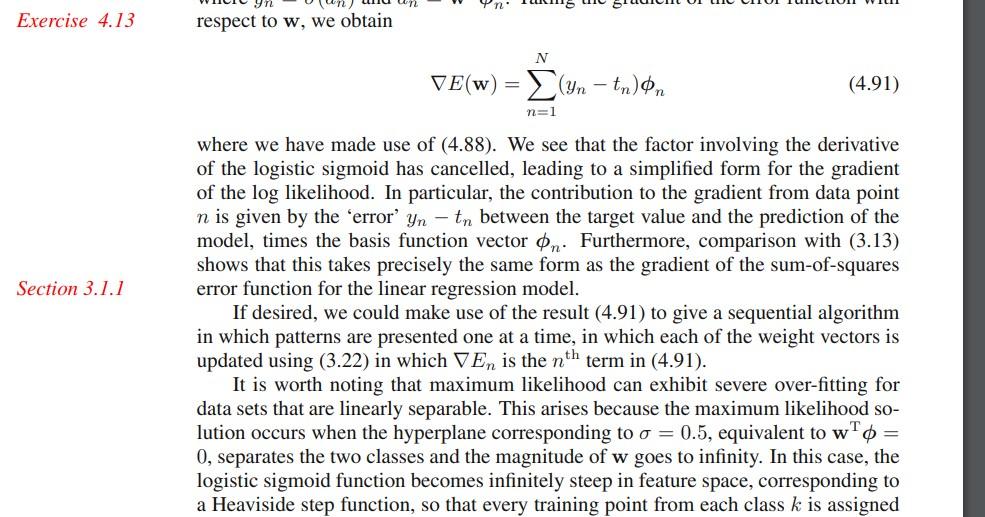

Exercise 4.13 respect to w, we obtain E(w)=n=1N(yntn)n where we have made use of (4.88). We see that the factor involving the derivative of the logistic sigmoid has cancelled, leading to a simplified form for the gradient of the log likelihood. In particular, the contribution to the gradient from data point n is given by the 'error' yntn between the target value and the prediction of the model, times the basis function vector n. Furthermore, comparison with (3.13) shows that this takes precisely the same form as the gradient of the sum-of-squares error function for the linear regression model. If desired, we could make use of the result (4.91) to give a sequential algorithm in which patterns are presented one at a time, in which each of the weight vectors is updated using (3.22) in which En is the nth term in (4.91). It is worth noting that maximum likelihood can exhibit severe over-fitting for data sets that are linearly separable. This arises because the maximum likelihood solution occurs when the hyperplane corresponding to =0.5, equivalent to wT= 0 , separates the two classes and the magnitude of w goes to infinity. In this case, the logistic sigmoid function becomes infinitely steep in feature space, corresponding to a Heaviside step function, so that every training point from each class k is assigned

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts