Question: Please answer question 4 ( 4 ) Answer why entropy is maximized in a uniform distribution. ( 2 5 points ) Self - Information In

Please answer question

Answer why entropy is maximized in a uniform distribution. points

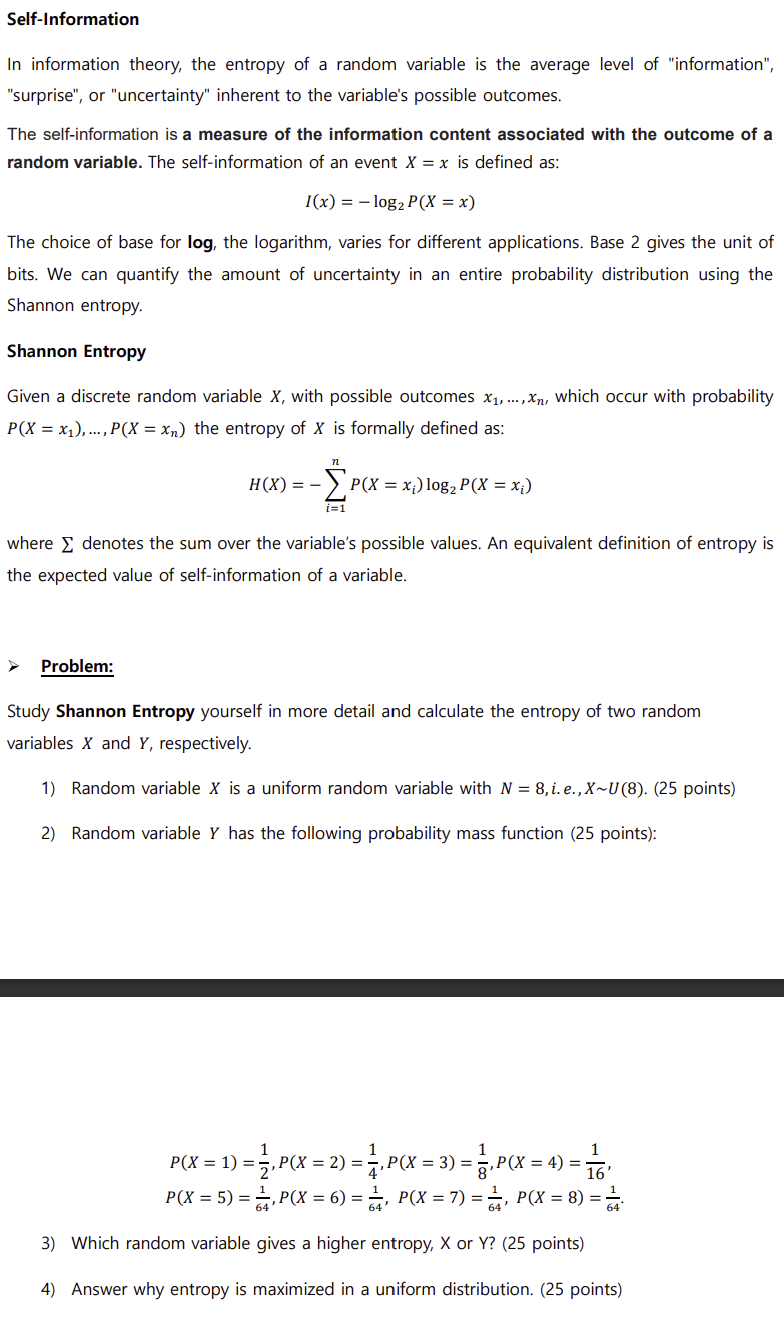

SelfInformation

In information theory, the entropy of a random variable is the average level of "information",

"surprise", or "uncertainty" inherent to the variable's possible outcomes.

The selfinformation is a measure of the information content associated with the outcome of a

random variable. The selfinformation of an event is defined as:

The choice of base for log the logarithm, varies for different applications. Base gives the unit of

bits. We can quantify the amount of uncertainty in an entire probability distribution using the

Shannon entropy.

Shannon Entropy

Given a discrete random variable with possible outcomes dots, which occur with probability

dots, the entropy of is formally defined as:

where denotes the sum over the variable's possible values. An equivalent definition of entropy is

the expected value of selfinformation of a variable.

Problem:

Study Shannon Entropy yourself in more detail and calculate the entropy of two random

variables and respectively.

Answer why entropy is maximized in a uniform distribution. points

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock