Question: Please answer the question at the bottom what is the gradient descent update to w ( 1 , 2 ) [ 1 ] with a

Please answer the question at the bottom what is the gradient descent update to w with a learning rate of a Please write it in terms of xi yi and oi and the weights. sigmoid function is the activation function for h h h and o

Q

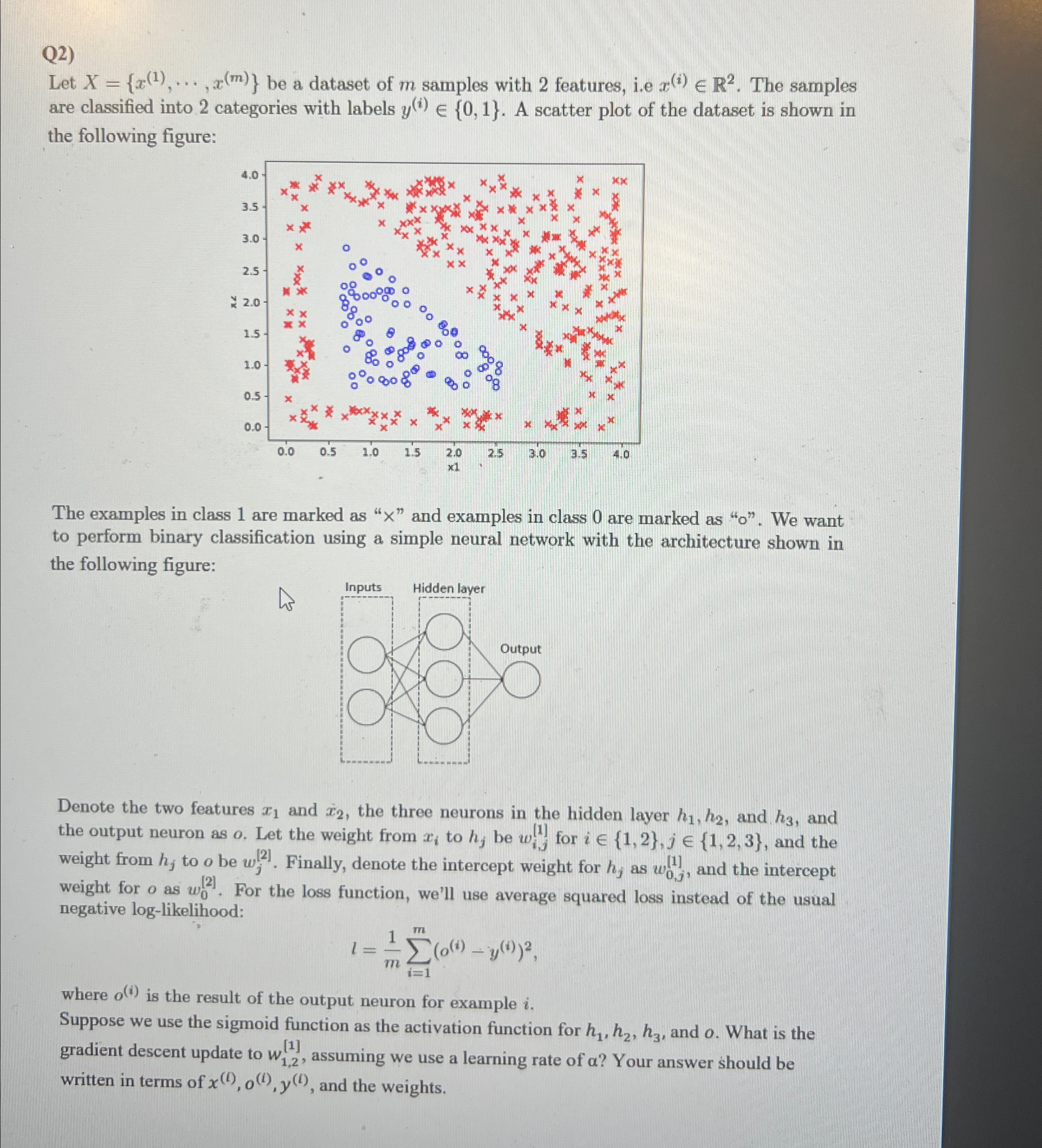

Let cdots, be a dataset of samples with features, ie The samples are classified into categories with labels A scatter plot of the dataset is shown in the following figure:

The examples in class are marked as and examples in class are marked as o We want to perform binary classification using a simple neural network with the architecture shown in the following figure:

Denote the two features and the three neurons in the hidden layer and and the output neuron as Let the weight from to be for iinjin and the weight from to be Finally, denote the intercept weight for as and the intercept weight for as For the loss function, we'll use average squared loss instead of the usual negative loglikelihood:

where is the result of the output neuron for example

Suppose we use the sigmoid function as the activation function for and What is the gradient descent update to assuming we use a learning rate of Your answer should be written in terms of and the weights.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock