Question: please answer using MatLab. this is an advanced math coding problem. code the functions for question 2 and 3. show all codes and outputs. In

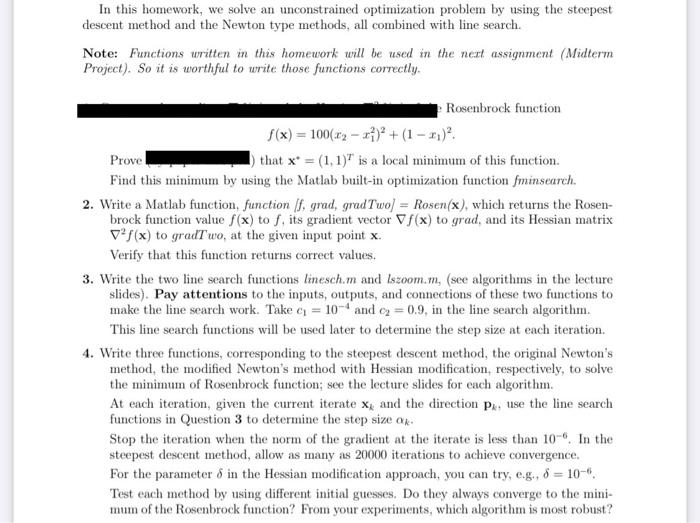

In this homework, we solve an unconstrained optimization problem by using the steepest descent method and the Newton type methods, all combined with line search. Note: Functions written in this homework will be used in the next assignment (Midterm Project). So it is worthful to write those functions correctly. Rosenbrock function F(x) = 100(02 - ) + (1 - 1) Prove that x = (1,1)" is a local minimum of this function. Find this minimum by using the Matlab built-in optimization function fminsearch. 2. Write a Matlab function, function (f. grad, grad Two) = Rosen(x), which returns the Rosen- brock function value f(x) to f, its gradient vector f(x) to grad, and its Hessian matrix vf(x) to gradt wo, at the given input point x. Verify that this function returns correct values. 3. Write the two line search functions linesch.m and Iszoom.m, (sce algorithms in the lecture slides). Pay attentions to the inputs, outputs, and connections of these two functions to make the line search work. Take c = 10- and on = 0.9, in the line search algorithm. This line search functions will be used later to determine the step size at each iteration 4. Write three functions, corresponding to the steepest descent method, the original Newton's method, the modified Newton's method with Hessian modification, respectively, to solve the minimum of Rosenbrock function; see the lecture slides for each algorithm. At each iteration, given the current iterate x and the direction Pro use the line search functions in Question 3 to determine the step size ak Stop the iteration when the norm of the gradient at the iterate is less than 10-0. In the steepest descent method, allow as many as 20000 iterations to achieve convergence. For the parameter 8 in the Hessian modification approach, you can try, e.g., 8 = 10- Test each method by using different initial guesses. Do they always converge to the mini- mum of the Rosenbrock function? From your experiments, which algorithm is most robust? In this homework, we solve an unconstrained optimization problem by using the steepest descent method and the Newton type methods, all combined with line search. Note: Functions written in this homework will be used in the next assignment (Midterm Project). So it is worthful to write those functions correctly. Rosenbrock function F(x) = 100(02 - ) + (1 - 1) Prove that x = (1,1)" is a local minimum of this function. Find this minimum by using the Matlab built-in optimization function fminsearch. 2. Write a Matlab function, function (f. grad, grad Two) = Rosen(x), which returns the Rosen- brock function value f(x) to f, its gradient vector f(x) to grad, and its Hessian matrix vf(x) to gradt wo, at the given input point x. Verify that this function returns correct values. 3. Write the two line search functions linesch.m and Iszoom.m, (sce algorithms in the lecture slides). Pay attentions to the inputs, outputs, and connections of these two functions to make the line search work. Take c = 10- and on = 0.9, in the line search algorithm. This line search functions will be used later to determine the step size at each iteration 4. Write three functions, corresponding to the steepest descent method, the original Newton's method, the modified Newton's method with Hessian modification, respectively, to solve the minimum of Rosenbrock function; see the lecture slides for each algorithm. At each iteration, given the current iterate x and the direction Pro use the line search functions in Question 3 to determine the step size ak Stop the iteration when the norm of the gradient at the iterate is less than 10-0. In the steepest descent method, allow as many as 20000 iterations to achieve convergence. For the parameter 8 in the Hessian modification approach, you can try, e.g., 8 = 10- Test each method by using different initial guesses. Do they always converge to the mini- mum of the Rosenbrock function? From your experiments, which algorithm is most robust

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts