Question: PLEASE DO NOT JUST COPY ANSWER FROM THE INTERNET. Algorithm 8.2 Boosting for Regression Trees 1. Set f(x) = 0 and ri = yi for

PLEASE DO NOT JUST COPY ANSWER FROM THE INTERNET.

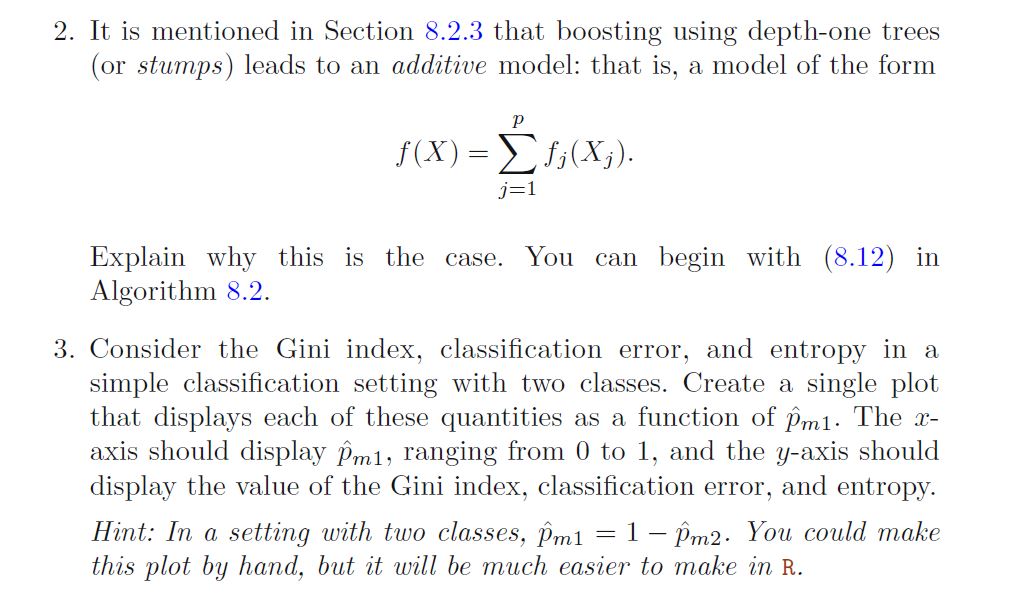

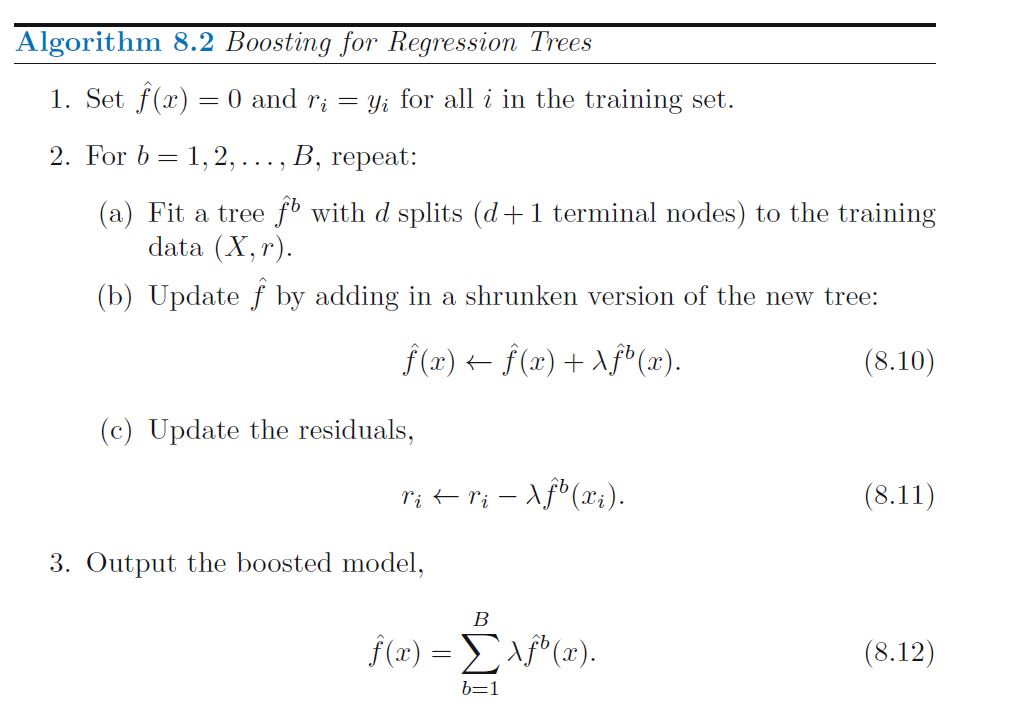

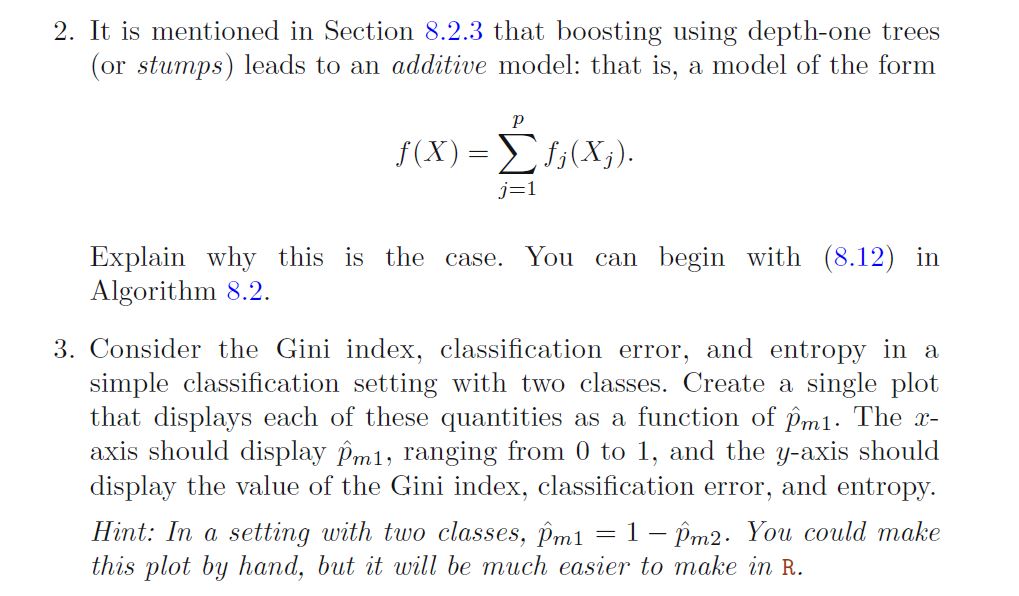

Algorithm 8.2 Boosting for Regression Trees 1. Set f(x) = 0 and ri = yi for all i in the training set. 2. For b = 1, 2, ..., B, repeat: (a) Fit a tree f with d splits (d + 1 terminal nodes) to the training data (X, r). (b) Update f by adding in a shrunken version of the new tree: f ( ac ) + f (x ) + 1fb (2 ) . (8.10) (c) Update the residuals, ritri -1f(xi). (8.11) 3. Output the boosted model, B f (x) = (8.12)2. It is mentioned in Section 8.2.3 that boosting using depthone trees (or stamps) leads to an additive model: that is, a model of the form f(X) = ijlle- 3:1 Explain why this is the case. You can begin with (8.12) in Algorithm 8.2. 3. Consider the Gini index, classication error, and entropy in a simple classication setting with two classes. Create a single plot that displays each of these quantities as a function of ml. The :1:- axis should display pml, ranging from O to 1, and the yaxis should display the value of the Gini index, classication error, and entropy. Hint: In a setting with two classes, p'ml : 1 + pm. You could make this plot by hand, but it will be much easier to make in R

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts