Question: Please explain in detail so I can comprehend, I'm having problems understanding. Thank you We have mainly focused on squared loss, but there are other

Please explain in detail so I can comprehend, I'm having problems understanding. Thank you

Please explain in detail so I can comprehend, I'm having problems understanding. Thank you

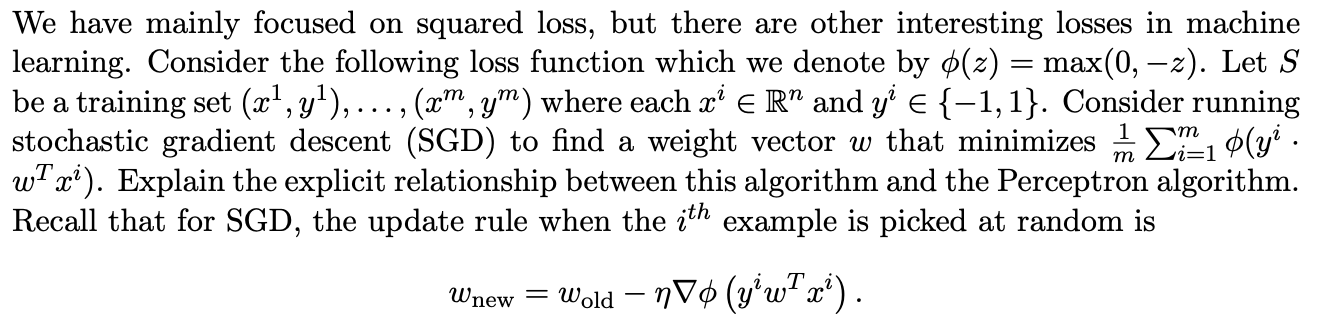

We have mainly focused on squared loss, but there are other interesting losses in machine learning. Consider the following loss function which we denote by $(z) = max(0, z). Let S be a training set (x+, y),..., (x, y) where each x' E Rand y' e{-1,1}. Consider running stochastic gradient descent (SGD) to find a weight vector w that minimizes m 21 (y wIx?). Explain the explicit relationship between this algorithm and the Perceptron algorithm. Recall that for SGD, the update rule when the ith example is picked at random is Wnew = Wold nVo (y'wIx). We have mainly focused on squared loss, but there are other interesting losses in machine learning. Consider the following loss function which we denote by $(z) = max(0, z). Let S be a training set (x+, y),..., (x, y) where each x' E Rand y' e{-1,1}. Consider running stochastic gradient descent (SGD) to find a weight vector w that minimizes m 21 (y wIx?). Explain the explicit relationship between this algorithm and the Perceptron algorithm. Recall that for SGD, the update rule when the ith example is picked at random is Wnew = Wold nVo (y'wIx)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts