Question: PLEASE HELP IN PYTHON Bagging and boosting are considered ensemble methods of machine learning. Ensemble is a concept in which multiple models, also called decision

PLEASE HELP IN PYTHON

Bagging and boosting are considered ensemble methods of machine learning. Ensemble is a concept in which multiple models, also called decision trees, are trained using the same learning algorithm to produce a better predictive performance than using a single decision tree. The ensembles take part in a bigger group of methods, called multiclassifiers, where a set of hundreds or thousands of learners with a common objective are fused together to solve the problem.

For this given code, you implement either a bagging or a boosting version to solve the problem of a pharmaceutical drug analysis. Fill out the missing code sections titled "Write Your Code Here". One section has an error stating: "DataFrame.drop takes from to positional arguments but were given". There is an excel file provided for this code. Also, try to explain each section of the code on how it works if you can. Thank you.

import pandas as pd

import seaborn as sns #for plotting

from sklearn.ensemble import RandomForestClassifier #for the model

from sklearn.tree import DecisionTreeClassifier

from sklearn.tree import exportgraphviz #plot tree

from sklearn.modelselection import traintestsplit #for data splitting

nprandom.seed #ensure reproducibility

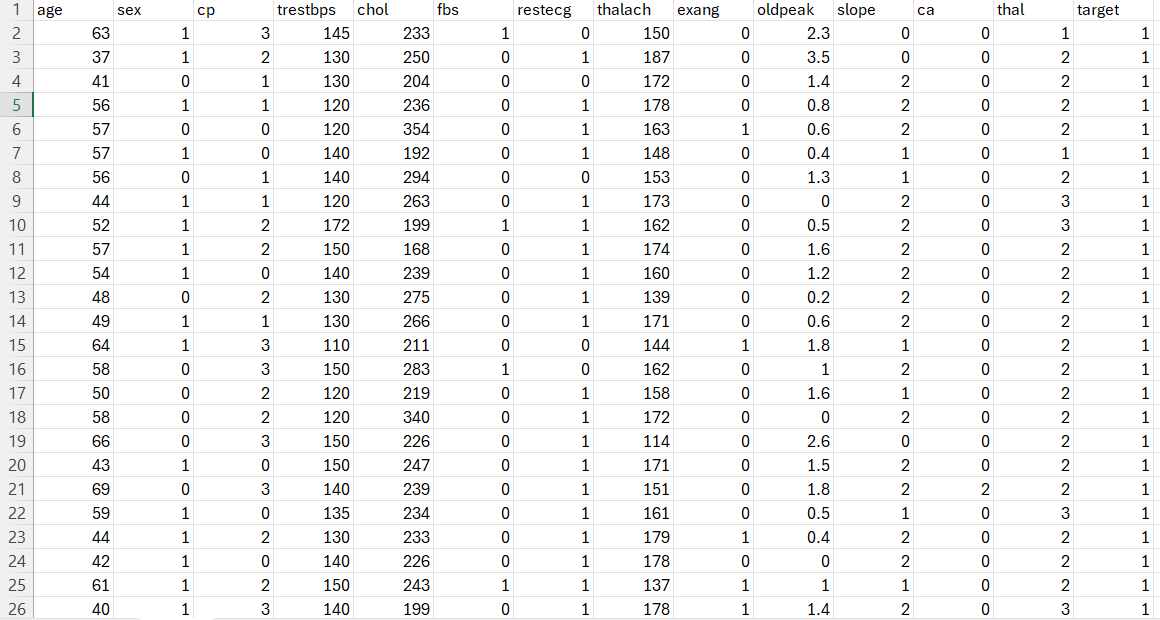

#Let's load the data. This is clinical data to predict wether a patient is likely to suufer a heart attack or not For each patient in total variables have been taken into account for the prediction. We will use all these data to train a randon forest as a predictor for heart attacks:

df pdreadcsvheartcsv

printdfshape

dfhead

#Let's have a better look at the data visualization:

Xnparraydfdfcolumns:

fa pltsubplots

fsetfigheight

fsetfigwidth

a aravel

for idx,ax in enumeratea:

if idx:

axhistlistX:idxcolorr

axsettitledfcolumnsidx

plttightlayout

#Now, let's train our random forest. Not all available data will be used for training, some will be used for testing :

Xtrain, Xtest, ytrain, ytest traintestsplitdfdroptarget dftarget testsize randomstate

model RandomForestClassifiermaxdepth

model.fitXtrain, ytrain

#There is a type error on this sections stating: DataFrame.drop takes from to positional arguments but were given

#Now, let's predict the class likely to have an attak or not for our testing data of patients, using our trained random forest:

ypredict model.predictXtest

#These are the predicted labels by the random forest:

ypredict

#and these are the actual labels of the testing data:

ytestnparraylistytest

ytest

#Let's see the difference between the predicted and the actual labels. See that, out of predictions, the random forest mistook :

sumabsypredict ytest

#Now, for boosting, let's train five random forests:

#Write your code here

let's see the predictions for each random forest:

#Write your code here

#Now, our final prediction will be an average of the predictions of the five random forests. If more than two random forests say that the label must be then, accordingly, the final prediction will be and otherwise:

#Write your code here

#See that our final averaged predictions have only mistakes, this is the boosting does improve indeed the predictions of our initial single model mistakes:

sumabsypredict nparrayfinal

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock