Question: Please help me with this 2 multiplechoice questions :D 5 points Save Answer QUESTION 2 n 1 2 3 4 0.14 D1 0.15 0.46 0.13

Please help me with this 2 multiplechoice questions :D

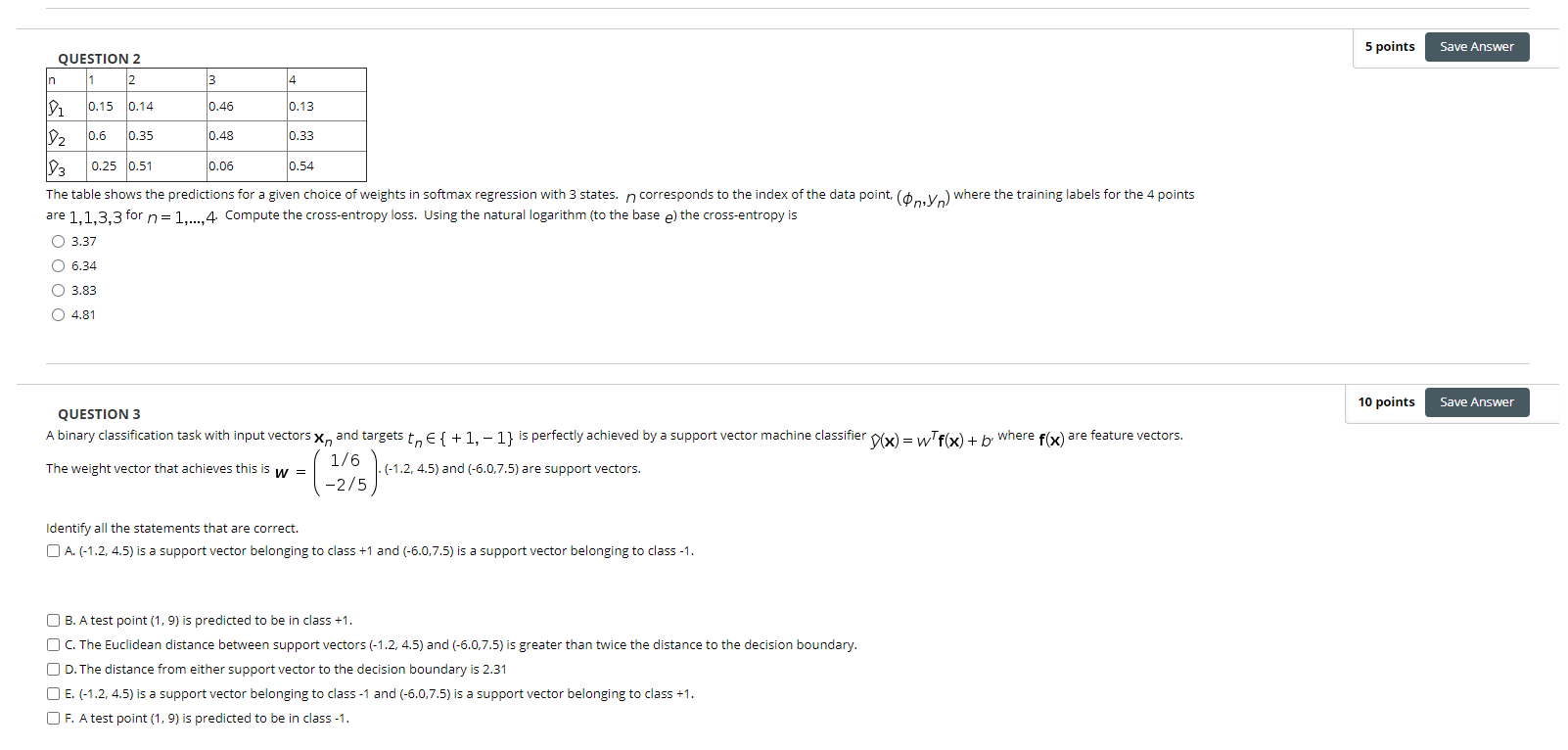

5 points Save Answer QUESTION 2 n 1 2 3 4 0.14 D1 0.15 0.46 0.13 D2 0.6 0.35 0.48 0.33 D3 0.25 0.51 0.06 0.54 The table shows the predictions for a given choice of weights in softmax regression with 3 states. n corresponds to the index of the data point (on yn) where the training labels for the 4 points are 1,1,3,3 for n= 1,...,4. Compute the cross-entropy loss. Using the natural logarithm (to the base e) the cross-entropy is O 3.37 O 6.34 O 3.83 O 4.81 10 points Save Answer QUESTION 3 A binary classification task with input vectors X, and targets tn { +1, - 1} is perfectly achieved by a support vector machine classifier D(x)=w'f(x) + b. where f(x) are feature vectors. 1/6 The weight vector that achieves this is w = (-1.2, 4.5) and (-6.0.7.5) are support vectors. -2/5 Identify all the statements that are correct. A. (-1.2, 4.5) is a support vector belonging to class +1 and (-6.0.7.5) is a support vector belonging to class -1. B. A test point (1,9) is predicted to be in class +1. OC. The Euclidean distance between support vectors (-1.2, 4.5) and (-5.0.7.5) is greater than twice the distance to the decision boundary. D. The distance from either support vector to the decision boundary is 2.31 E. (-1.2, 4.5) is a support vector belonging to class - 1 and (-5.0.7.5) is a support vector belonging to class +1. OF. A test point (1,9) is predicted to be in class -1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts