Question: please help with question 3 3. There are two algorithms called Alg1 and Alg2 for a problem of size n. Alg1 runs in n2 microseconds

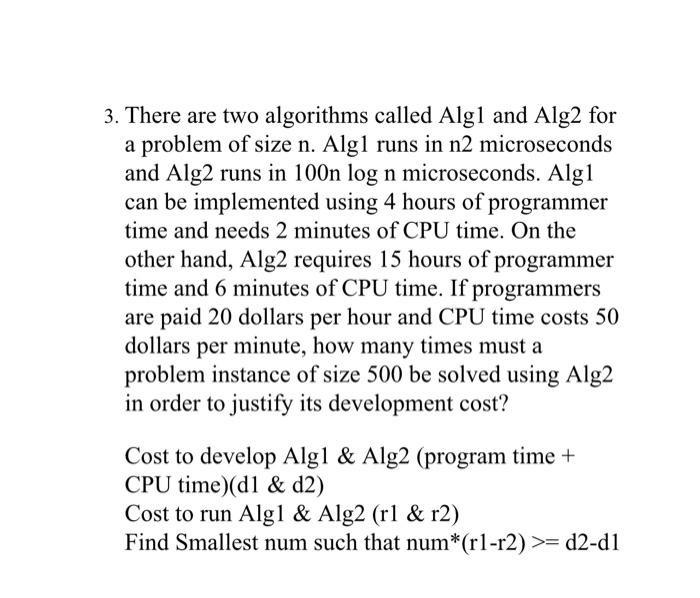

3. There are two algorithms called Alg1 and Alg2 for a problem of size n. Alg1 runs in n2 microseconds and Alg 2 runs in 100n log n microseconds. Alg 1 can be implemented using 4 hours of programmer time and needs 2 minutes of CPU time. On the other hand, Alg2 requires 15 hours of programmer time and 6 minutes of CPU time. If programmers are paid 20 dollars per hour and CPU time costs 50 dollars per minute, how many times must a problem instance of size 500 be solved using Alg2 in order to justify its development cost? Cost to develop Alg1 \& Alg2 (program time + CPU time )(d1&d2) Cost to run Alg 1&AAlg2(r1 \& r2) Find Smallest num such that num*(r1-r2) >=d2d1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts