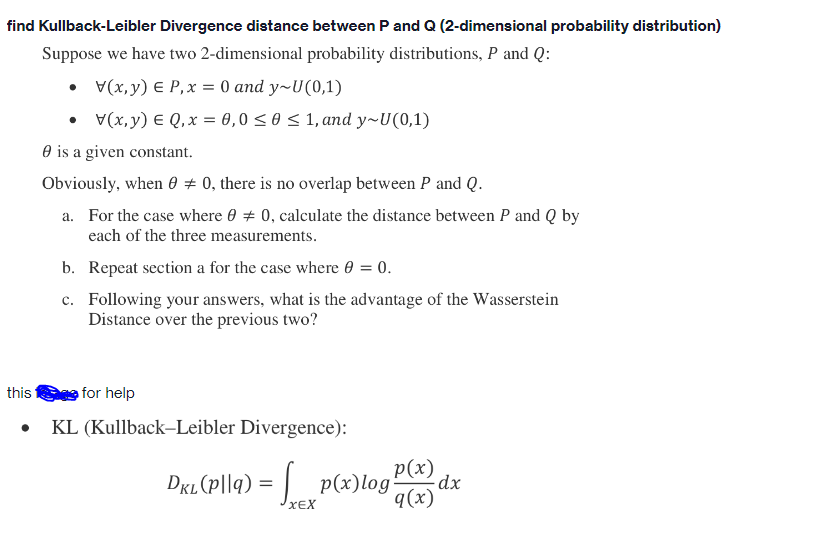

Question: please solve on page find Kullback-Leibler Divergence distance between P and Q (2-dimensional probability distribution) Suppose we have two 2-dimensional probability distributions, P and Q:

please solve on page

find Kullback-Leibler Divergence distance between P and Q (2-dimensional probability distribution) Suppose we have two 2-dimensional probability distributions, P and Q: (vo)n~x pub 0 = x'd= ((x)A . (To)n-Kpun '1 5050'0 = x03 (1'x)A . 0 is a given constant. Obviously, when 0 # 0, there is no overlap between P and Q. a. For the case where 0 # 0, calculate the distance between P and Q by each of the three measurements. b. Repeat section a for the case where 0 = 0. c. Following your answers, what is the advantage of the Wasserstein Distance over the previous two? this fee for help . KL (Kullback-Leibler Divergence): p(x) DEL (pllq) = p(x) log dx XEX q(x)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts