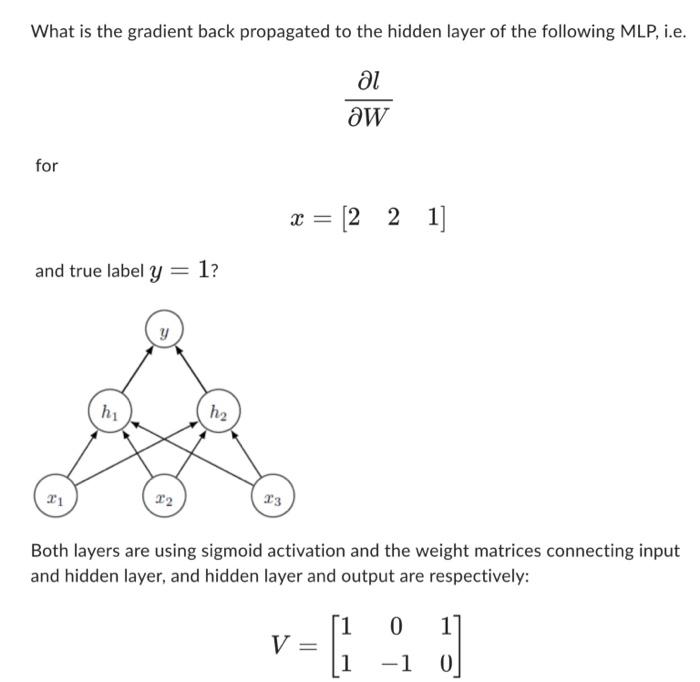

Question: please solve this What is the gradient back propagated to the hidden layer of the following MLP, i.e. Wl for x=[221] and true label y=1

![hidden layer of the following MLP, i.e. Wl for x=[221] and true](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f4539cb223c_22066f4539c4eefd.jpg)

What is the gradient back propagated to the hidden layer of the following MLP, i.e. Wl for x=[221] and true label y=1 ? Both layers are using sigmoid activation and the weight matrices connecting input and hidden layer, and hidden layer and output are respectively: V=[110110] V=[110110] and W=[01] The loss function is defined as l(y,y^)=21(yy^)2 where y^ denotes the output of the model. 0.09370.02860.08450.04430.03220.02940.04420.0397

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts