Question: Positional encoding. Consider the sentence I love Machine Learning. Here, we treat each word as a token, where I has an index value of 1

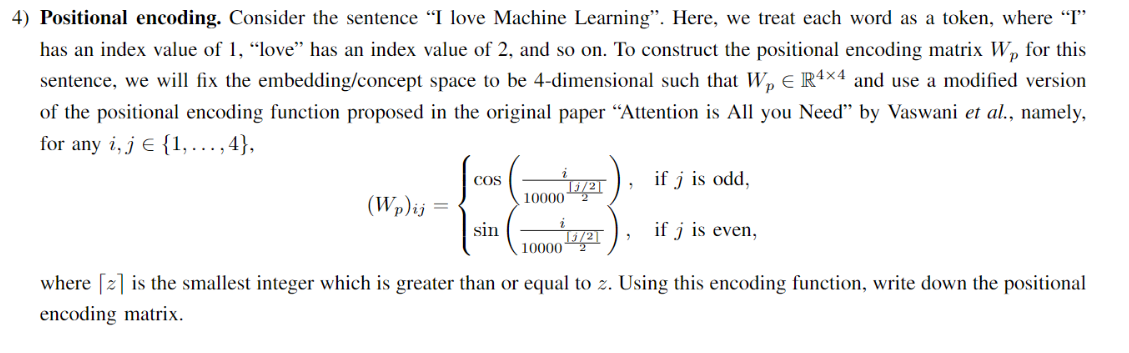

Positional encoding. Consider the sentence "I love Machine Learning". Here, we treat each word as a token, where "I"

has an index value of "love" has an index value of and so on To construct the positional encoding matrix for this

sentence, we will fix the embeddingconcept space to be dimensional such that and use a modified version

of the positional encoding function proposed in the original paper "Attention is All you Need" by Vaswani et al namely,

for any jindots,

where ~~ is the smallest integer which is greater than or equal to Using this encoding function, write down the positional

encoding matrix.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock