Question: Preface For the final exam / project we will develop classification models using different approaches and compare their performance metrics. We will use a cleaned

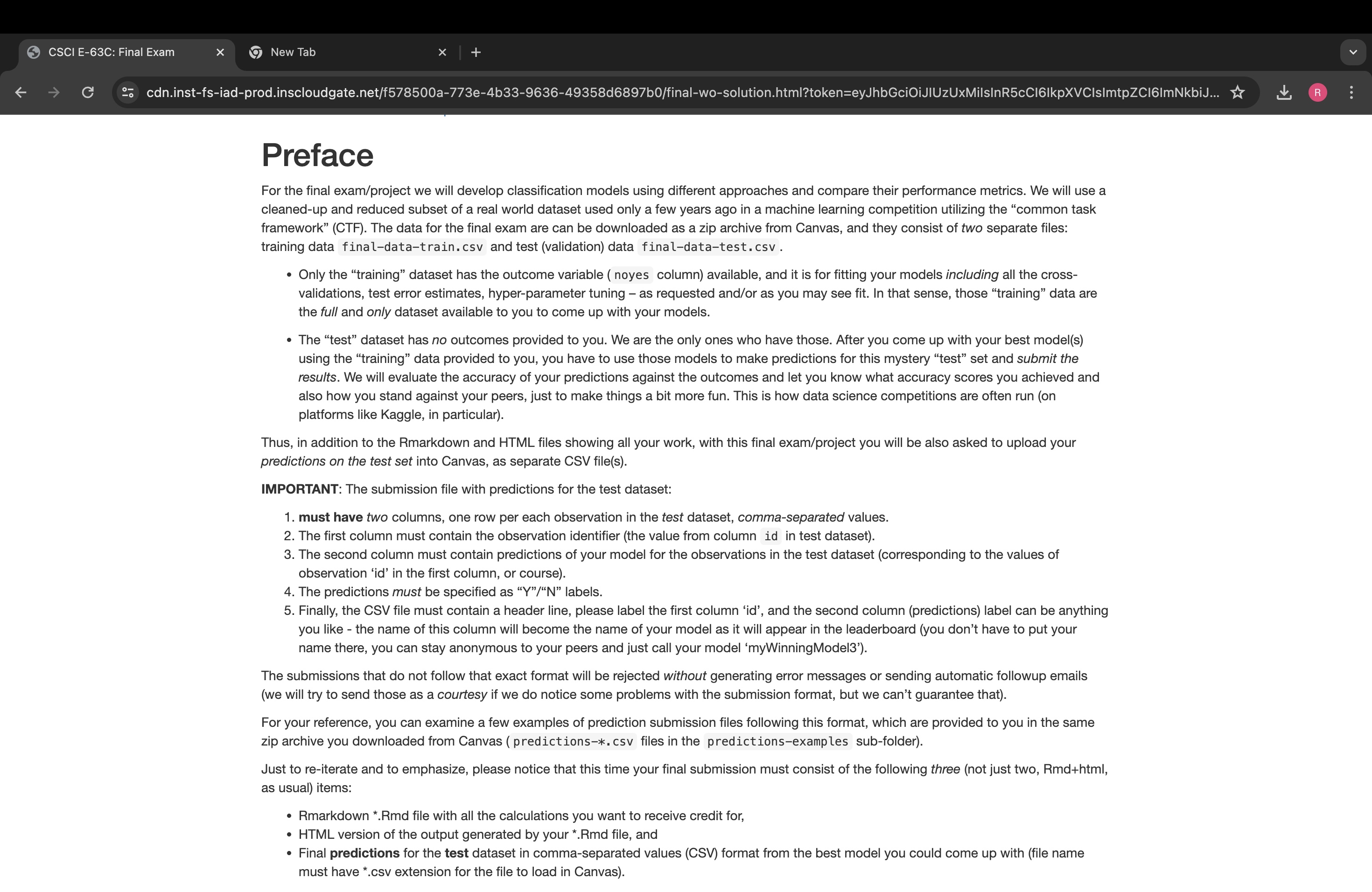

Preface

For the final examproject we will develop classification models using different approaches and compare their performance metrics. We will use a

cleanedup and reduced subset of a real world dataset used only a few years ago in a machine learning competition utilizing the "common task

framework" CTF The data for the final exam are can be downloaded as a zip archive from Canvas, and they consist of two separate files:

training data finaldatatrain.csv and test validation data finaldatatest.csv

Only the "training" dataset has the outcome variable noyes column available, and it is for fitting your models including all the cross

validations, test error estimates, hyperparameter tuning as requested andor as you may see fit. In that sense, those "training" data are

the full and only dataset available to you to come up with your models.

The "test" dataset has no outcomes provided to you. We are the only ones who have those. After you come up with your best models

using the "training" data provided to you, you have to use those models to make predictions for this mystery "test" set and submit the

results. We will evaluate the accuracy of your predictions against the outcomes and let you know what accuracy scores you achieved and

also how you stand against your peers, just to make things a bit more fun. This is how data science competitions are often run on

platforms like Kaggle, in particular

Thus, in addition to the Rmarkdown and HTML files showing all your work, with this final examproject you will be also asked to upload your

predictions on the test set into Canvas, as separate CSV files

IMPORTANT: The submission file with predictions for the test dataset:

must have two columns, one row per each observation in the test dataset, commaseparated values.

The first column must contain the observation identifier the value from column id in test dataset

The second column must contain predictions of your model for the observations in the test dataset corresponding to the values of

observation id in the first column, or course

The predictions must be specified as YN labels.

Finally, the CSV file must contain a header line, please label the first column id and the second column predictions label can be anything

you like the name of this column will become the name of your model as it will appear in the leaderboard you don't have to put your

name there, you can stay anonymous to your peers and just call your model 'myWinningModel

The submissions that do not follow that exact format will be rejected without generating error messages or sending automatic followup emails

we will try to send those as a courtesy if we do notice some problems with the submission format, but we can't guarantee that

For your reference, you can examine a few examples of prediction submission files following this format, which are provided to you in the same

zip archive you downloaded from Canvas predictions csv files in the predictionsexamples subfolder

Just to reiterate and to emphasize, please notice that this time your final submission must consist of the following three not just two, Rmdhtml

as usual items:

Rmarkdown Rmd file with all the calculations you want to receive credit for,

HTML version of the output generated by your Rmd file, and

Final predictions for the test dataset in commaseparated values CSV format from the best model you could come up with file name

must have extension for the file to load in Canvas

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock