Question: Previous code: # Some settings. learning _ rate = 0 . 0 0 0 1 iterations = 1 0 0 0 0 losses = [

Previous code:

# Some settings.

learningrate

iterations

losses

# Gradient descent algorithm for linear SVM classifier.

# Step Initialize the parameters W b

W npzeros

b

C

for i in rangeiterations:

# Step Compute the partial derivatives.

gradW gradb gradLWbXtrain, Ytrain, W b C

# Step Update the parameters.

W W learningrate gradW

b b learningrate gradb

# Track the training losses.

losses.appendLWbXtrain, Ytrain, W b C

In : # Some settings.

learningrate

iterations

losses

Gradient descent algorithm for linear SVM classifier.

Step Initialize the parameters W b

W npzeros

b

C

for i in rangeiterations:

Step Compute the partial derivatives.

gradW gradb gradLWbXtrain, Ytrain, W b C

Step Update the parameters.

W W learningrate gradW

b b learningrate gradb

Track the training losses.

losses.appendLWbXtrain, Ytrain, W b C

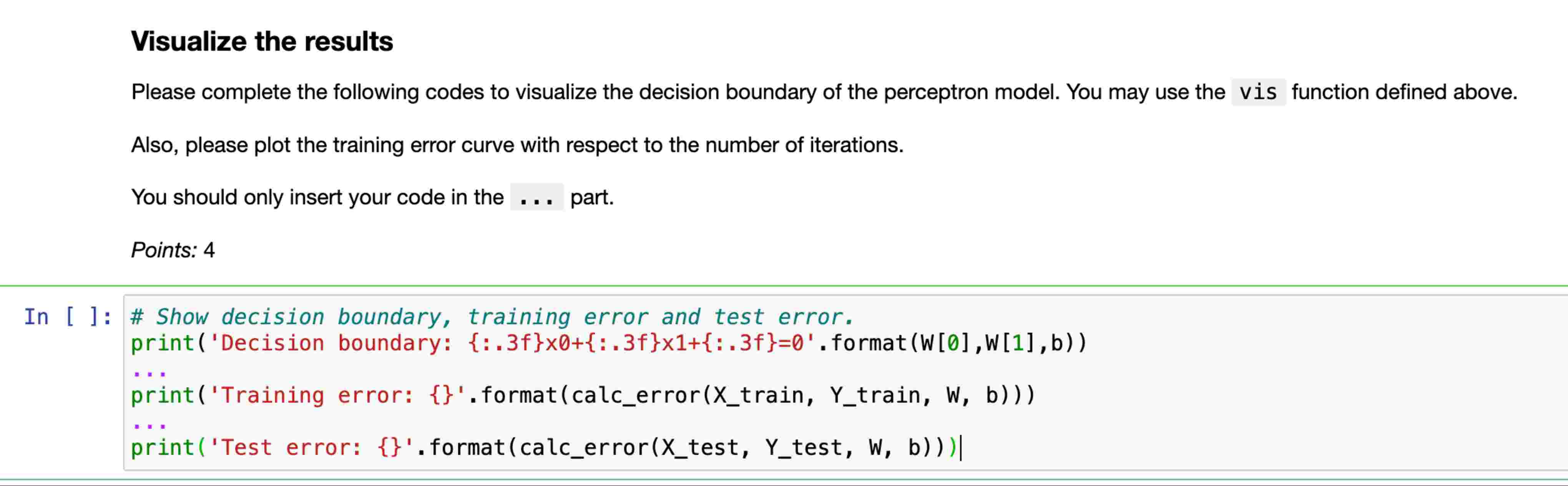

Visualize the results

Please complete the following codes to visualize the decision boundary of the perceptron model. You may use the vis function defined above.

Also, please plot the training error curve with respect to the number of iterations.

You should only insert your code in the part.

Points:

In : # Show decision boundary, training error and test error.

printDecision boundary: :ftimes:fx:fformatWWb

printTraining error: formatcalcerrorXtrain, Ytrain, W b

printTest error: formatcalcerrorXtest, Ytest, W b

In : # Some settings.

learningrate

iterations

losses

# Gradient descent algorithm for linear SVM classifier.

# Step Initialize the parameters W b

W npzeros

b

C

for i in rangeiterations:

# Step Compute the partial derivatives.

gradW gradb gradLWbXtrain, Ytrain, W b C

# Step Update the parameters.

W W learningrate gradW

b b learningrate gradb

# Track the training losses.

losses.appendLWbXtrain, Ytrain, W b C

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock