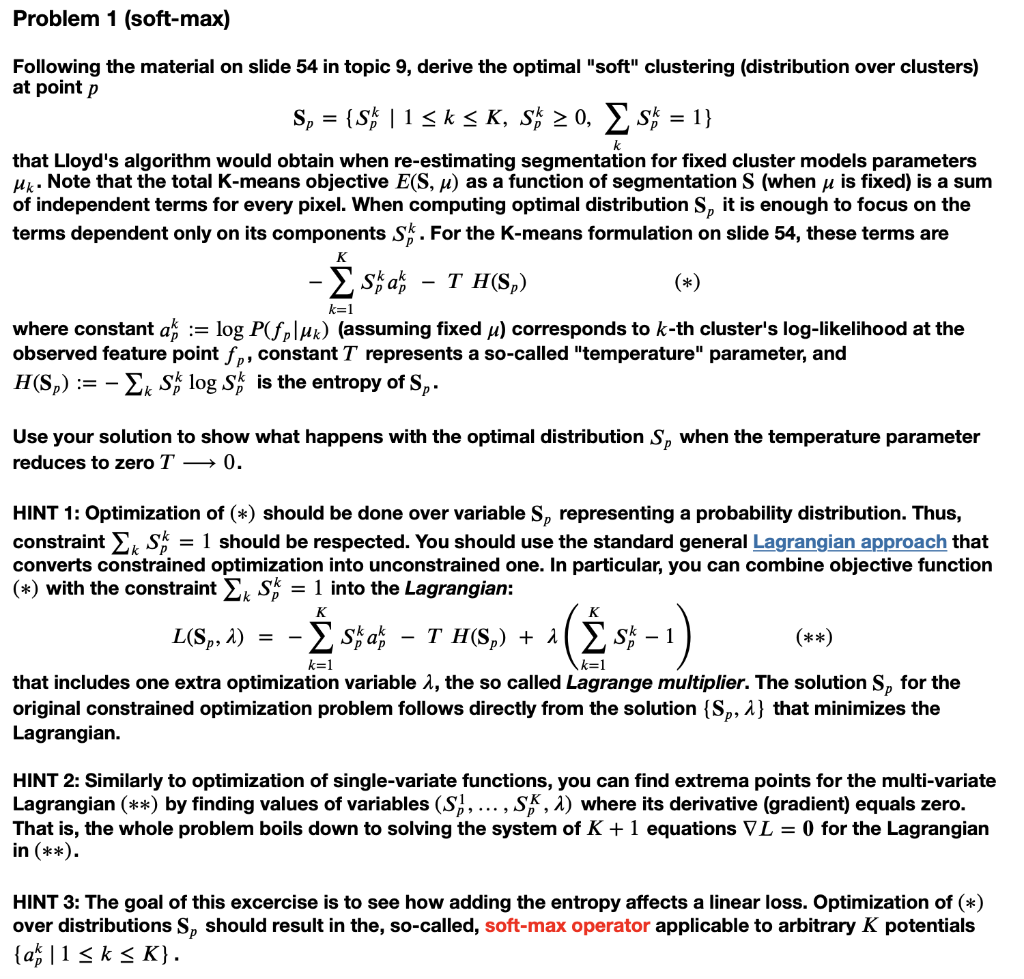

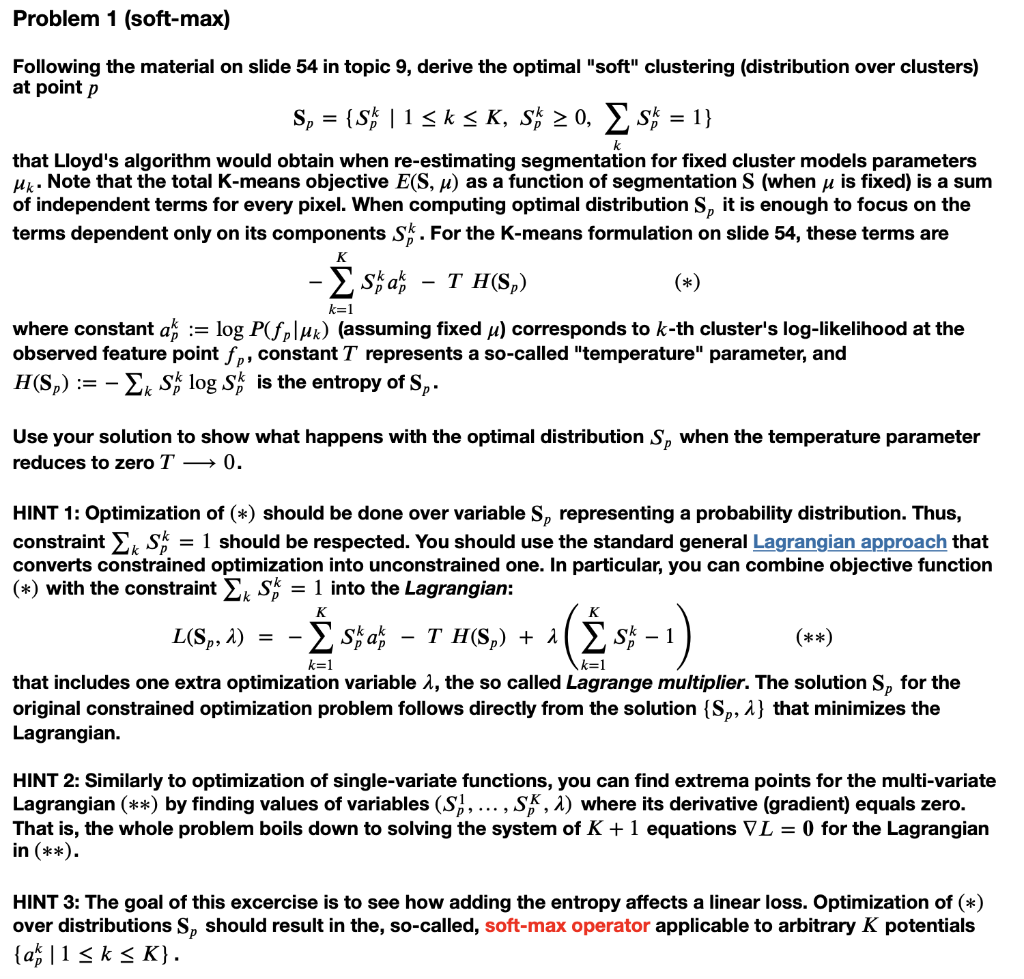

Question: Problem 1 (soft-max) = = k Following the material on slide 54 in topic 9, derive the optimal soft clustering (distribution over clusters) at point

Problem 1 (soft-max) = = k Following the material on slide 54 in topic 9, derive the optimal "soft" clustering (distribution over clusters) at point p S, = {S\ | 15k SK, SK = 0, * = 1} that Lloyd's algorithm would obtain when re-estimating segmentation for fixed cluster models parameters Mk. Note that the total K-means objective E(S, u) as a function of segmentation S (when u is fixed) is a sum of independent terms for every pixel. When computing optimal distribution S, it is enough to focus on the terms dependent only on its components S. For the K-means formulation on slide 54, these terms are sa! (*) where constant and := log P(fp\ux) (assuming fixed u) corresponds to k-th cluster's log-likelihood at the observed feature point fp, constant T represents a so-called "temperature" parameter, and H(Sp) := - Ek S log s is the entropy of S.. TH(S) k=1 Use your solution to show what happens with the optimal distribution S, when the temperature parameter reduces to zero T = 0. = HINT 1: Optimization of (*) should be done over variable S, representing a probability distribution. Thus, constraint Ek Sk = 1 should be respected. You should use the standard general Lagrangian approach that converts constrained optimization into unconstrained one. In particular, you can combine objective function (*) with the constraint Ek sx = 1 into the Lagrangian: L(Sp, 2) s; ; TH(Sp) + 1 ( 5 1 - (**) = K K k=1 k=1 that includes one extra optimization variable 1, the so called Lagrange multiplier. The solution S, for the original constrained optimization problem follows directly from the solution {Sp, 1} that minimizes the Lagrangian. HINT 2: Similarly to optimization of single-variate functions, you can find extrema points for the multi-variate Lagrangian (**) by finding values of variables (S), ..., SK, 2) where its derivative (gradient) equals zero. That is, the whole problem boils down to solving the system of K +1 equations VL = 0 for the Lagrangian in (**). HINT 3: The goal of this excercise is to see how adding the entropy affects a linear loss. Optimization of (*) over distributions Sy should result in the, so-called, soft-max operator applicable to arbitrary K potentials {ak 11 Sk SK}