Question: Problem 1 (SUBOPTIMALITY OF ID3 FOR DECISION TREES) Consider the following training set, where X = {0,1}3 and Y = {0,1}: (1,1,1),1) ((1,0,0),1) (1,1,0), 0)

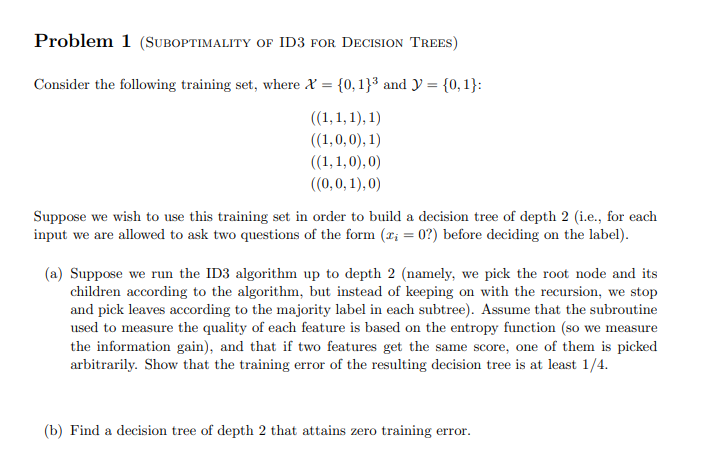

Problem 1 (SUBOPTIMALITY OF ID3 FOR DECISION TREES) Consider the following training set, where X = {0,1}3 and Y = {0,1}: (1,1,1),1) ((1,0,0),1) (1,1,0), 0) (0,0,1),0) Suppose we wish to use this training set in order to build a decision tree of depth 2 (i.e., for each input we are allowed to ask two questions of the form (Pi = 0?) before deciding on the label). (a) Suppose we run the ID3 algorithm up to depth 2 (namely, we pick the root node and its children according to the algorithm, but instead of keeping on with the recursion, we stop and pick leaves according to the majority label in each subtree). Assume that the subroutine used to measure the quality of each feature is based on the entropy function (so we measure the information gain), and that if two features get the same score, one of them is picked arbitrarily. Show that the training error of the resulting decision tree is at least 1/4. (b) Find a decision tree of depth 2 that attains zero training error. Problem 1 (SUBOPTIMALITY OF ID3 FOR DECISION TREES) Consider the following training set, where X = {0,1}3 and Y = {0,1}: (1,1,1),1) ((1,0,0),1) (1,1,0), 0) (0,0,1),0) Suppose we wish to use this training set in order to build a decision tree of depth 2 (i.e., for each input we are allowed to ask two questions of the form (Pi = 0?) before deciding on the label). (a) Suppose we run the ID3 algorithm up to depth 2 (namely, we pick the root node and its children according to the algorithm, but instead of keeping on with the recursion, we stop and pick leaves according to the majority label in each subtree). Assume that the subroutine used to measure the quality of each feature is based on the entropy function (so we measure the information gain), and that if two features get the same score, one of them is picked arbitrarily. Show that the training error of the resulting decision tree is at least 1/4. (b) Find a decision tree of depth 2 that attains zero training error

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts