Question: Problem 2 (25 Points): Suppose you are given the following data: he likes to sleep he likes to eat he likes to eat and sleep

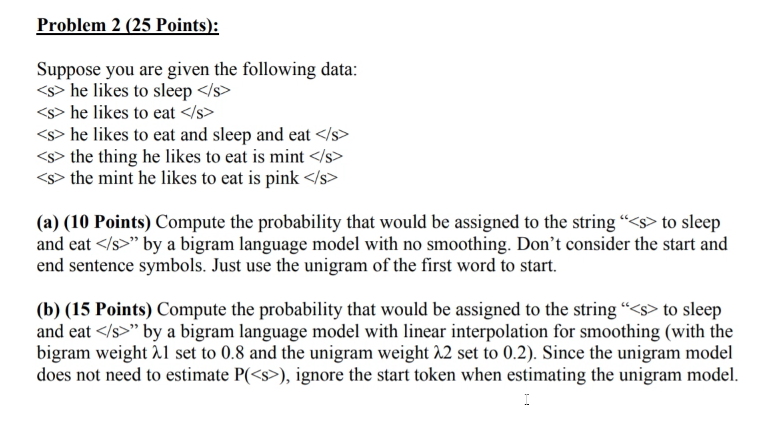

Problem 2 (25 Points): Suppose you are given the following data: he likes to sleep he likes to eat he likes to eat and sleep and eat the thing he likes to eat is mint the mint he likes to eat is pink (a) (10 Points) Compute the probability that would be assigned to the string " to sleep and eat )" by a bigram language model with no smoothing. Don't consider the start and end sentence symbols. Just use the unigram of the first word to start. (b) (15 Points) Compute the probability that would be assigned to the string" to sleep and eat " by a bigram language model with linear interpolation for smoothing (with the bigram weight l set to 0.8 and the unigram weight 22 set to 0.2). Since the unigram model does not need to estimate P(>), ignore the start token when estimating the unigram model. Problem 2 (25 Points): Suppose you are given the following data: he likes to sleep he likes to eat he likes to eat and sleep and eat the thing he likes to eat is mint the mint he likes to eat is pink (a) (10 Points) Compute the probability that would be assigned to the string " to sleep and eat )" by a bigram language model with no smoothing. Don't consider the start and end sentence symbols. Just use the unigram of the first word to start. (b) (15 Points) Compute the probability that would be assigned to the string" to sleep and eat " by a bigram language model with linear interpolation for smoothing (with the bigram weight l set to 0.8 and the unigram weight 22 set to 0.2). Since the unigram model does not need to estimate P(>), ignore the start token when estimating the unigram model

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts