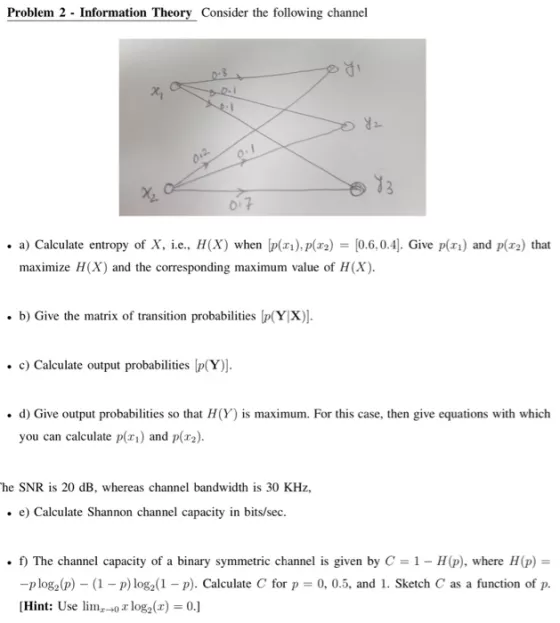

Question: Problem 2 - Information Theory Consider the following channel 012 07 a) Calculate entropy of X, i.c., H(X) when [p(x1), p(r2) = [0.6,0.4). Give

Problem 2 - Information Theory Consider the following channel 012 07 a) Calculate entropy of X, i.c., H(X) when [p(x1), p(r2) = [0.6,0.4). Give p(r1) and p(x2) that maximize H(X) and the corresponding maximum value of H(Xx). b) Give the matrix of transition probabilities [p(Y|X)]. c) Calculate output probabilities [p(Y)]. d) Give output probabilities so that H(Y) is maximum. For this case, then give equations with which you can calculate p(r) and p(r2). he SNR is 20 dB, whereas channel bandwidth is 30 KHz, e) Calculate Shannon channel capacity in bits/sec. ) The channel capacity of a binary symmetric channel is given by C = 1- H(p), where H(p) = -ploga(p) (1 p) log2(1 p). Calculate C for p = 0, 0.5, and 1. Sketch C as a function of ; (Hint: Use lim,0 T log,(x) = 0.] .

Step by Step Solution

There are 3 Steps involved in it

entaopy HCx Plxi tog bits symbol 3D Given pea P6 06104 Pa1 06 i Pb 04 HX ... View full answer

Get step-by-step solutions from verified subject matter experts