Question: Problem 3 (20 points). As we discussed in the lecture, the Perceptron algorithm will only converge if the data is linearly separable, i.e., there exists

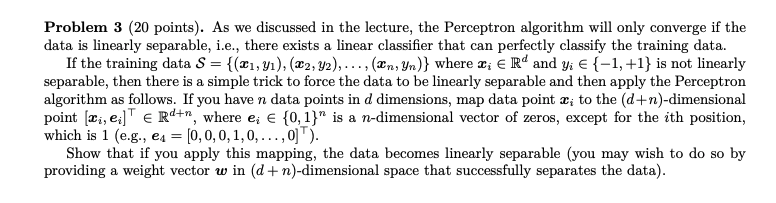

Problem 3 (20 points). As we discussed in the lecture, the Perceptron algorithm will only converge if the data is linearly separable, i.e., there exists a linear classifier that can perfectly classify the training data. If the training data S = {(x1, y), (22, y2), ..., (Xn, Yn)} where I; Rd and yi {-1, +1} is not linearly separable, then there is a simple trick to force the data to be linearly separable and then apply the Perceptron algorithm as follows. If you have n data points in d dimensions, map data point X; to the (d+n)-dimensional point (Li, ez]" e Rd+n, where e; {0,1}" is a n-dimensional vector of zeros, except for the ith position, which is 1 (e.g., e4 = [0,0,0,1,0,...,0T). Show that if you apply this mapping, the data becomes linearly separable (you may wish to do so by providing a weight vector w in (d+n)-dimensional space that successfully separates the data). Problem 3 (20 points). As we discussed in the lecture, the Perceptron algorithm will only converge if the data is linearly separable, i.e., there exists a linear classifier that can perfectly classify the training data. If the training data S = {(x1, y), (22, y2), ..., (Xn, Yn)} where I; Rd and yi {-1, +1} is not linearly separable, then there is a simple trick to force the data to be linearly separable and then apply the Perceptron algorithm as follows. If you have n data points in d dimensions, map data point X; to the (d+n)-dimensional point (Li, ez]" e Rd+n, where e; {0,1}" is a n-dimensional vector of zeros, except for the ith position, which is 1 (e.g., e4 = [0,0,0,1,0,...,0T). Show that if you apply this mapping, the data becomes linearly separable (you may wish to do so by providing a weight vector w in (d+n)-dimensional space that successfully separates the data)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts