Question: Problem 3 (25%). Robust Linear Regression Suppose we have the generative linear regression model y = XO' + , where e is the error term

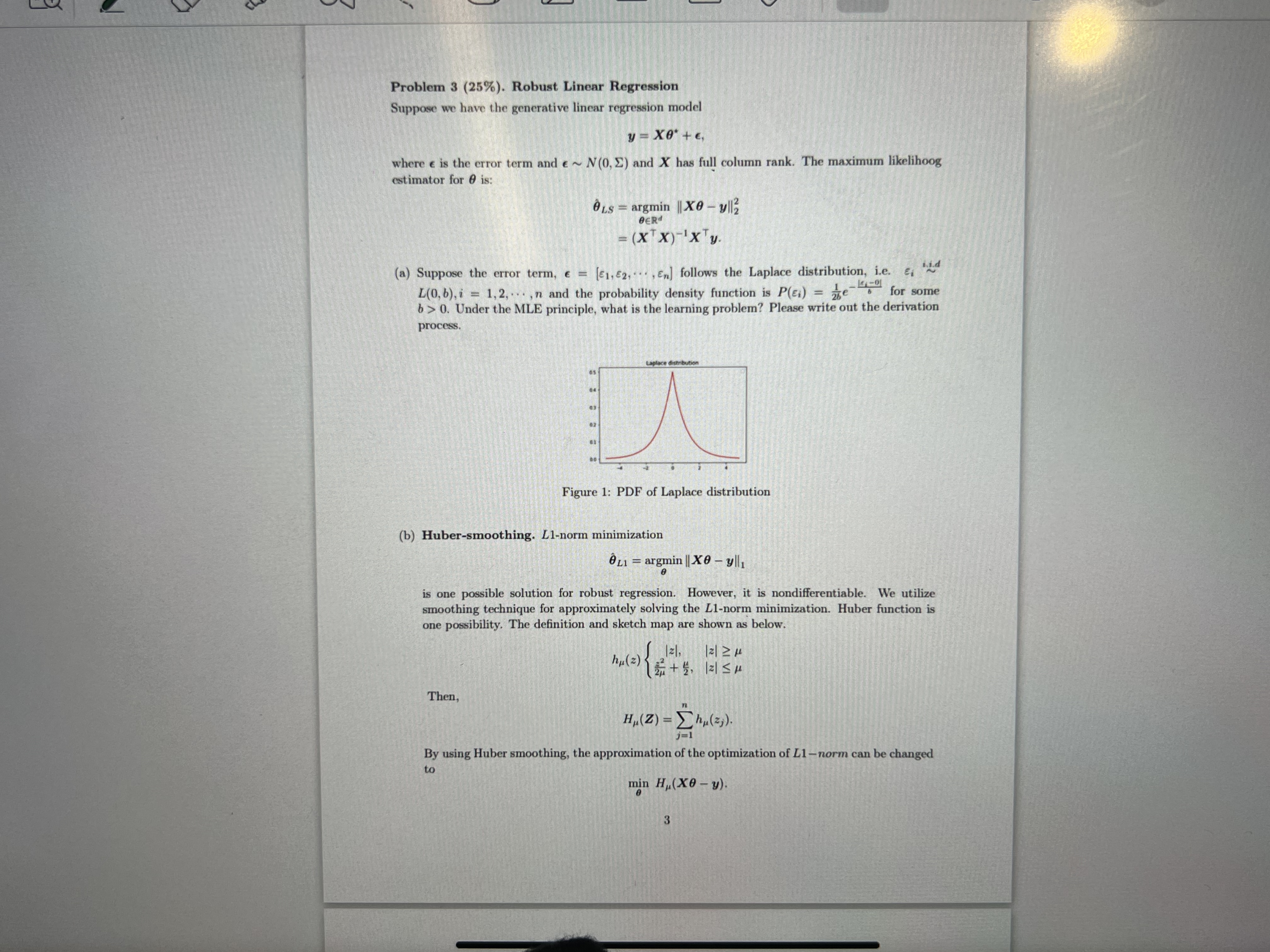

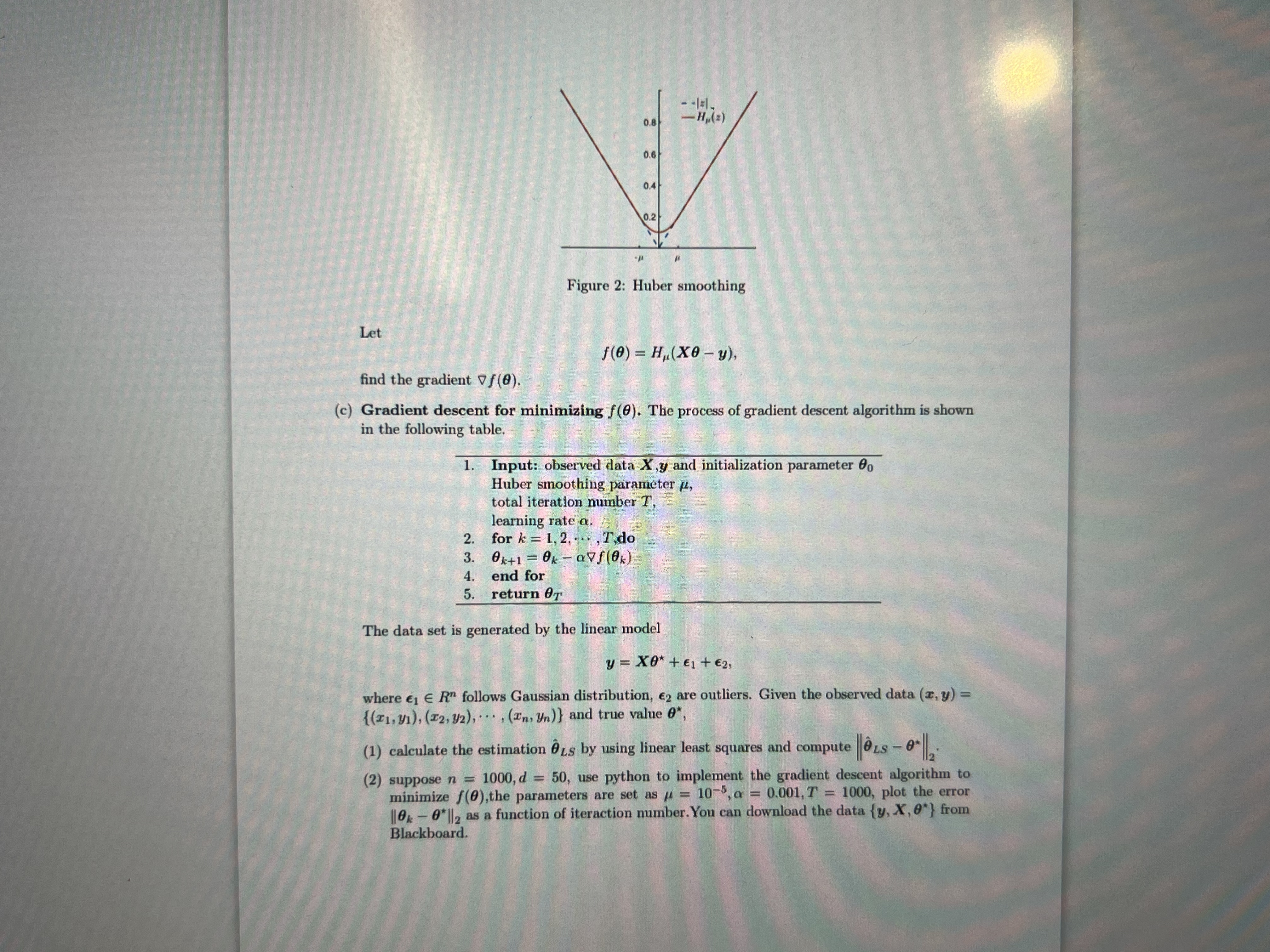

Problem 3 (25%). Robust Linear Regression Suppose we have the generative linear regression model y = XO' + , where e is the error term and e ~ N(0, E) and X has full column rank. The maximum likelihood estimator for 0 is: OLS = argmin |Xe - yll3 BERd = ( X X) X y (a) Suppose the error term, e = [1, E2, ' '' , En] follows the Laplace distribution, i.e. e, bid L(0, b), i = 1,2, ... , n and the probability density function is P() = me . for some b > 0. Under the MLE principle, what is the learning problem? Please write out the derivation process. Figure 1: PDF of Laplace distribution (b) Huber-smoothing. L1-norm minimization OL1 = argmin ||X0 - yll1 is one possible solution for robust regression. However, it is nondifferentiable. We utilize smoothing technique for approximately solving the L1-norm minimization. Huber function is one possibility. The definition and sketch map are shown as below. hu ( 2 ) 1 2+ 4, 121 5 / Then, H, (Z) = Zhu(z, ). j=1 By using Huber smoothing, the approximation of the optimization of L1-norm can be changed to min Hu(X0 - y).\f

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts