Question: Problem 3: Cache Overview (25 points) A. In the following questions please choose the proper number of underlined options in order to best complete the

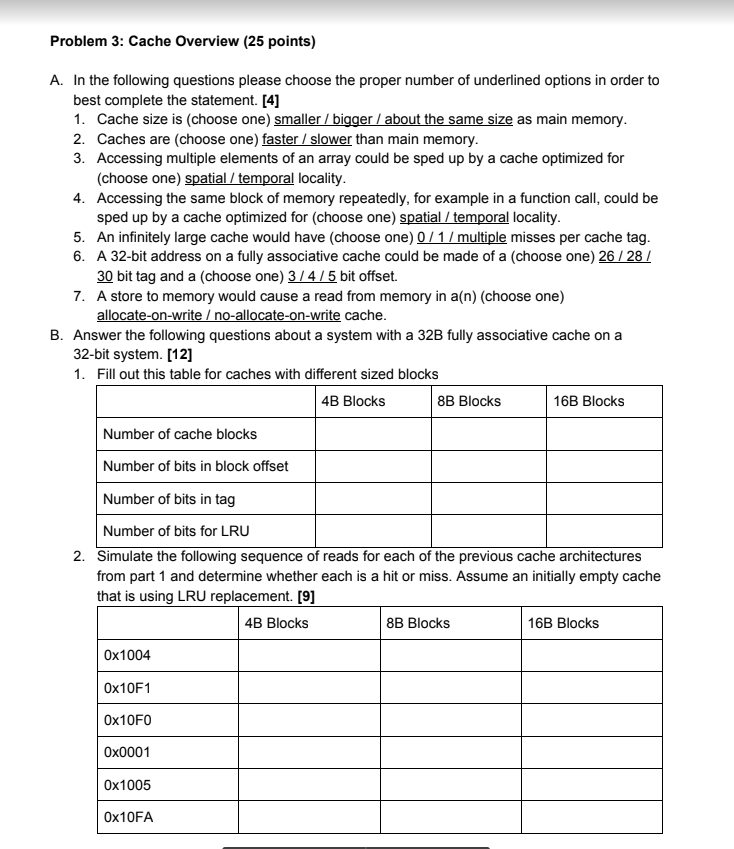

Problem 3: Cache Overview (25 points) A. In the following questions please choose the proper number of underlined options in order to best complete the statement. [4] 1. Cache size is (choose one) smaller / bigger / about the same size as main memory. 2. Caches are (choose one) faster / slower than main memory. 3. Accessing multiple elements of an array could be sped up by a cache optimized for (choose one) spatial / temporal locality. 4. Accessing the same block of memory repeatedly, for example in a function call, could be sped up by a cache optimized for (choose one) spatial / temporal locality. 5. An infinitely large cache would have (choose one) 0 / 1 / multiple misses per cache tag. 6. A 32-bit address on a fully associative cache could be made of a (choose one) 26 / 28 / 30 bit tag and a (choose one) 3 / 4 / 5 bit offset. 7. A store to memory would cause a read from memory in a(n) (choose one) allocate-on-write / no-allocate-on-write cache. B. Answer the following questions about a system with a 32B fully associative cache on a 32-bit system. [12] 1. Fill out this table for caches with different sized blocks

4B Blocks 8B Blocks 16B Blocks

Number of cache blocks Number of bits in block offset Number of bits in tag Number of bits for LRU 2. Simulate the following sequence of reads for each of the previous cache architectures from part 1 and determine whether each is a hit or miss. Assume an initially empty cache that is using LRU replacement. [9]

4B Blocks 8B Blocks 16B Blocks

0x1004 0x10F1 0x10F0 0x0001 0x1005 0x10FA

Problem 3: Cache Overview (25 points) A. In the following questions please choose the proper number of underlined options in order to best complete the statement. [4] 1. Cache size is (choose one) smaller /bigger /about the same size as main memory 2. Caches are (choose one) faster/ slower than main memory 3. Accessing multiple elements of an array could be sped up by a cache optimized for (choose one) spatial/temporal locality Accessing the same block of memory repeatedly, for example in a function call, could be sped up by a cache optimized for (choose one) spatial/temporal locality 4. 5. An infinitely large cache would have (choose one) 0/1/multiple misses per cache tag 6. A 32-bit address on a fully associative cache could be made of a (choose one) 26/28 30 bit tag and a (choose one) 3/4/5 bit offset. A store to memory would cause a read from memory in a(n) (choose one) 7. ocate-on-Wri on-write cache B. Answer the following questions about a system with a 32B fully associative cache on a 32-bit system. [12] 1. Fill out this table for caches with different sized blocks 4B Blocks 8B Blocks 16B Blocks Number of cache blocks Number of bits in block offset Number of bits in tag Number of bits for LRU 2. Simulate the following sequence of reads for each of the previous cache architectures from part 1 and determine whether each is a hit or miss. Assume an initially empty cache that is using LRU replacement. [9] 4B Blocks 8B Blocks 16B Blocks 0x1004 0x10F1 0x10F0 0x0001 0x1005 0x10FA

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts