Question: Problem 3 (Value Iteration Using Action Value Function) ( 40pts ): Follow the notations given in the lecture note, or alternatively from Chapter 4 in

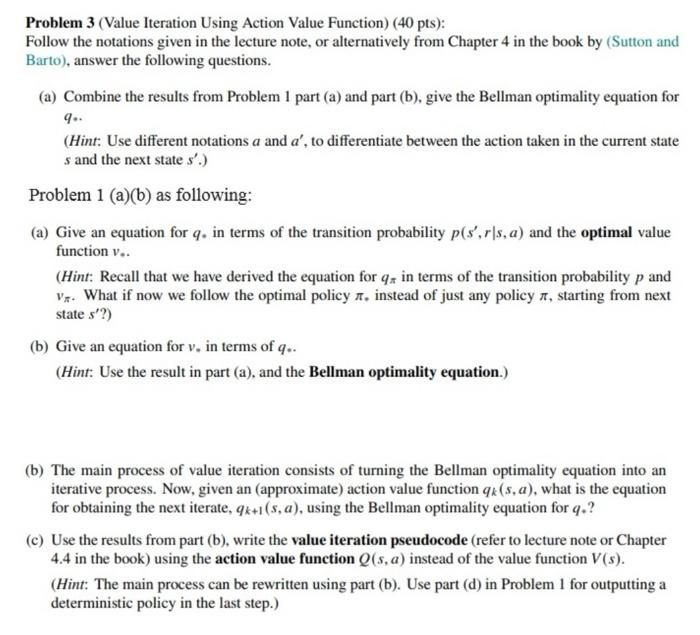

Problem 3 (Value Iteration Using Action Value Function) ( 40pts ): Follow the notations given in the lecture note, or alternatively from Chapter 4 in the book by (Sutton and Barto), answer the following questions. (a) Combine the results from Problem 1 part (a) and part (b), give the Bellman optimality equation for q.. (Hint: Use different notations a and a, to differentiate between the action taken in the current state s and the next state s.) Problem 1 (a)(b) as following: (a) Give an equation for q. in terms of the transition probability p(s,rs,a) and the optimal value function v. (Hint: Recall that we have derived the equation for q in terms of the transition probability p and v. What if now we follow the optimal policy , instead of just any policy , starting from next state s ?) (b) Give an equation for v. in terms of q.. (Hint: Use the result in part (a), and the Bellman optimality equation.) (b) The main process of value iteration consists of turning the Bellman optimality equation into an iterative process. Now, given an (approximate) action value function qk(s,a), what is the equation for obtaining the next iterate, qk+1(s,a), using the Bellman optimality equation for q. ? (c) Use the results from part (b), write the value iteration pseudocode (refer to lecture note or Chapter 4.4 in the book) using the action value function Q(s,a) instead of the value function V(s). (Hint: The main process can be rewritten using part (b). Use part (d) in Problem 1 for outputting a deterministic policy in the last step.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts