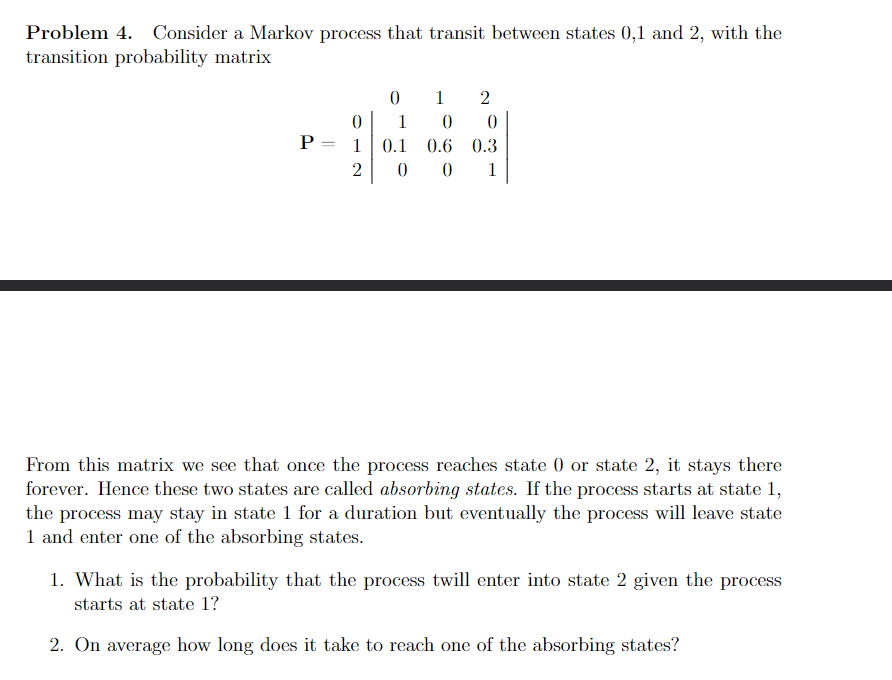

Question: Problem 4 . Consider a Markov process that transit between states 0 , 1 and 2 , with the transition probability matrix P = From

Problem Consider a Markov process that transit between states and with the

transition probability matrix

From this matrix we see that once the process reaches state or state it stays there

forever. Hence these two states are called absorbing states. If the process starts at state

the process may stay in state for a duration but eventually the process will leave state

and enter one of the absorbing states.

What is the probability that the process twill enter into state given the process

starts at state

On average how long does it take to reach one of the absorbing states?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock