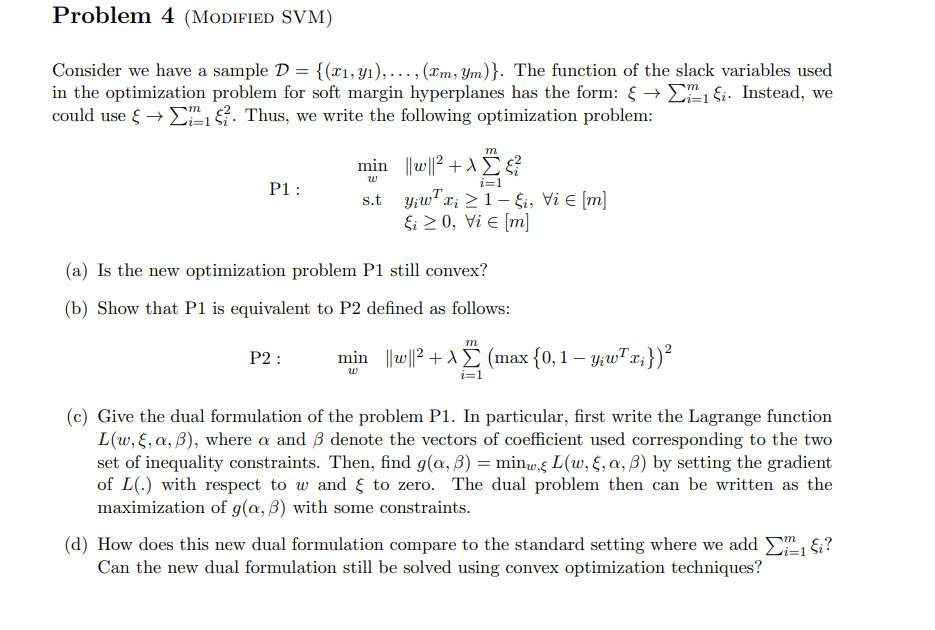

Question: Problem 4 (MODIFIED SVM) Consider we have a sample D = {(x1, y),..., (IM, Ym)}. The function of the slack variables used in the optimization

Problem 4 (MODIFIED SVM) Consider we have a sample D = {(x1, y),..., (IM, Ym)}. The function of the slack variables used in the optimization problem for soft margin hyperplanes has the form: & + fi. Instead, we could use + + $. Thus, we write the following optimization problem: m i=1 P1 : min ||W|2 + 1 & 2 st gia v >1-$, Vi [m] Si > 0, Vi [m] (a) Is the new optimization problem P1 still convex? (b) Show that P1 is equivalent to P2 defined as follows: m P2 : min ||0||2 + 1 (max {0,1 Y;WTx;})? i=1 (C) Give the dual formulation of the problem P1. In particular, first write the Lagrange function L(w, , a,B), where a and B denote the vectors of coefficient used corresponding to the two set of inequality constraints. Then, find g(a,b) = minw, L(w, f,a, B) by setting the gradient of L(.) with respect to w and to zero. The dual problem then can be written as the maximization of g(a,b) with some constraints. (d) How does this new dual formulation compare to the standard setting where we add 1 i? Can the new dual formulation still be solved using convex optimization techniques? Problem 4 (MODIFIED SVM) Consider we have a sample D = {(x1, y),..., (IM, Ym)}. The function of the slack variables used in the optimization problem for soft margin hyperplanes has the form: & + fi. Instead, we could use + + $. Thus, we write the following optimization problem: m i=1 P1 : min ||W|2 + 1 & 2 st gia v >1-$, Vi [m] Si > 0, Vi [m] (a) Is the new optimization problem P1 still convex? (b) Show that P1 is equivalent to P2 defined as follows: m P2 : min ||0||2 + 1 (max {0,1 Y;WTx;})? i=1 (C) Give the dual formulation of the problem P1. In particular, first write the Lagrange function L(w, , a,B), where a and B denote the vectors of coefficient used corresponding to the two set of inequality constraints. Then, find g(a,b) = minw, L(w, f,a, B) by setting the gradient of L(.) with respect to w and to zero. The dual problem then can be written as the maximization of g(a,b) with some constraints. (d) How does this new dual formulation compare to the standard setting where we add 1 i? Can the new dual formulation still be solved using convex optimization techniques

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts