Question: Problem 5 (Pattern recognition). Suppose an i.i.d vector of data X = (X1, ... , Xn) can belong to one of two classes Y, where

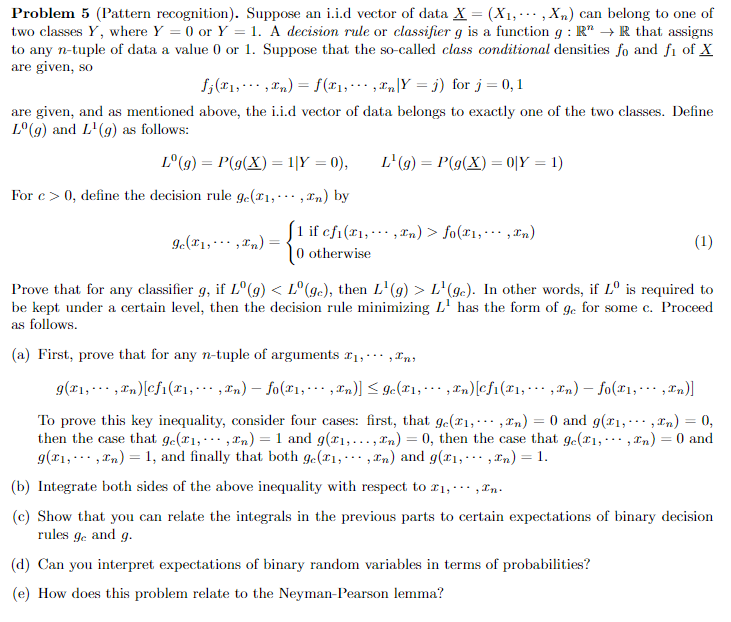

Problem 5 (Pattern recognition). Suppose an i.i.d vector of data X = (X1, ... , Xn) can belong to one of two classes Y, where Y =0 or Y = 1. A decision rule or classifier g is a function g : R" -> R that assigns to any n-tuple of data a value 0 or 1. Suppose that the so-called class conditional densities fo and fi of X are given, so fi(,..,In) = f($1, . .. ,Only = j) for j = 0, 1 are given, and as mentioned above, the i.i.d vector of data belongs to exactly one of the two classes. Define L'(g) and L' (g) as follows: L'(g) = P(g(X) = 1/Y =0), L'(g) = P(g(X) = 0|Y =1) For c > 0, define the decision rule ge(x1, . . . , In) by 1 if chi(21, ... ,In) > fo(TI, . .. , In) 10 otherwise (1) Prove that for any classifier g, if "(g) L'(ge). In other words, if L' is required to be kept under a certain level, then the decision rule minimizing _ has the form of ge for some c. Proceed as follows. (a) First, prove that for any n-tuple of arguments T], ".. ,In, 9(21, ".. , In)[cfi(21, . .. , In) - fo(21, . .., In)] ge(21, -.. , In)efi(TI, .. . , In) - fo($1, . .. ,In)] To prove this key inequality, consider four cases: first, that ge(rI, . .., In) = 0 and g(x1, . . . , In) = 0, then the case that ge(T1, . .. , In) = 1 and 9(T1, ..., In) = 0, then the case that ge(r], . .. , In) =0 and 9(TI, " .. , In) = 1, and finally that both ge(TI, . .. , In) and 9(X1, . . . , In) = 1. (b) Integrate both sides of the above inequality with respect to $1, " . . , In. (c) Show that you can relate the integrals in the previous parts to certain expectations of binary decision rules ge and g. (d) Can you interpret expectations of binary random variables in terms of probabilities? (e) How does this problem relate to the Neyman-Pearson lemma

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts