Question: Problem 6 (ADABOOST-TYPE ALGORITHMS: ALTERNATIVE OBJECTIVE FUNCTIONS) This problem studies boosting-type algorithms defined with objective functions different from that of AdaBoost. We assume that the

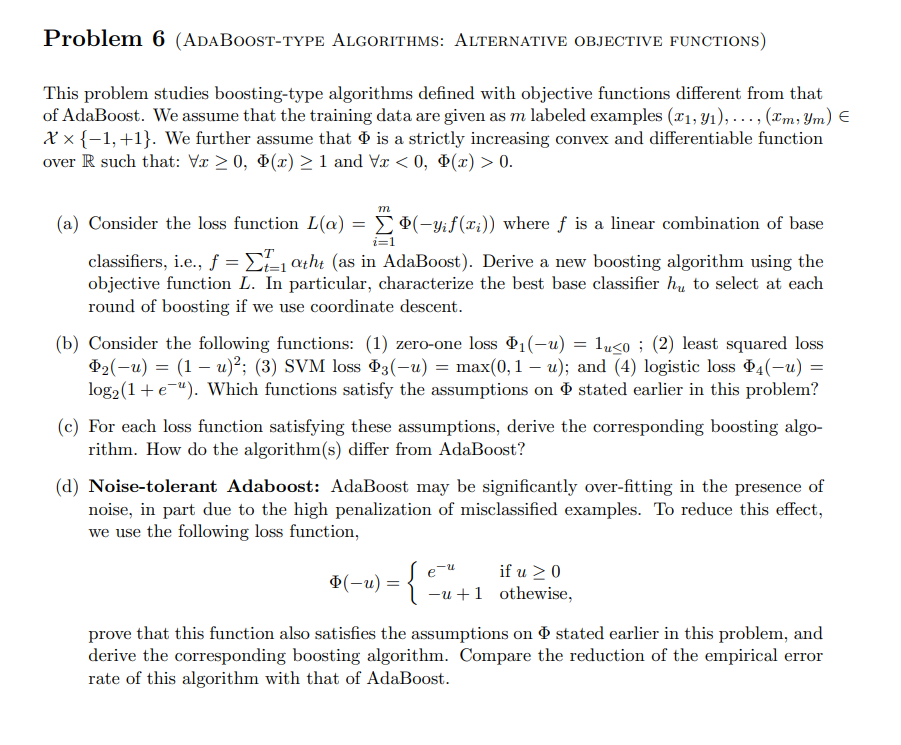

Problem 6 (ADABOOST-TYPE ALGORITHMS: ALTERNATIVE OBJECTIVE FUNCTIONS) This problem studies boosting-type algorithms defined with objective functions different from that of AdaBoost. We assume that the training data are given as m labeled examples (I1, y),..., (I'm, Ym) E Xx{-1, +1}. We further assume that is a strictly increasing convex and differentiable function over R such that: Vx > 0, 0(0) > 1 and Vx 0. m i=1 = (a) Consider the loss function L(a) = 0(-yif (xi)) where f is a linear combination of base classifiers, i.e., f = CF-1 otht (as in AdaBoost). Derive a new boosting algorithm using the objective function L. In particular, characterize the best base classifier hy to select at each round of boosting if we use coordinate descent. (b) Consider the following functions: (1) zero-one loss 1(-u) = luso ; (2) least squared loss 02(-u) = (1 u)?; (3) SVM loss 03(-u) max(0,1 u); and (4) logistic loss (4(-u) = log2 (1+e-u). Which functions satisfy the assumptions on stated earlier in this problem? (c) For each loss function satisfying these assumptions, derive the corresponding boosting algo- rithm. How do the algorithm(s) differ from AdaBoost? (d) Noise-tolerant Adaboost: AdaBoost may be significantly over-fitting in the presence of noise, in part due to the high penalization of misclassified examples. To reduce this effect, we use the following loss function, e- (-) ={ if u > 0 -u+1 othewise, prove that this function also satisfies the assumptions on stated earlier in this problem, and derive the corresponding boosting algorithm. Compare the reduction of the empirical error rate of this algorithm with that of AdaBoost. Problem 6 (ADABOOST-TYPE ALGORITHMS: ALTERNATIVE OBJECTIVE FUNCTIONS) This problem studies boosting-type algorithms defined with objective functions different from that of AdaBoost. We assume that the training data are given as m labeled examples (I1, y),..., (I'm, Ym) E Xx{-1, +1}. We further assume that is a strictly increasing convex and differentiable function over R such that: Vx > 0, 0(0) > 1 and Vx 0. m i=1 = (a) Consider the loss function L(a) = 0(-yif (xi)) where f is a linear combination of base classifiers, i.e., f = CF-1 otht (as in AdaBoost). Derive a new boosting algorithm using the objective function L. In particular, characterize the best base classifier hy to select at each round of boosting if we use coordinate descent. (b) Consider the following functions: (1) zero-one loss 1(-u) = luso ; (2) least squared loss 02(-u) = (1 u)?; (3) SVM loss 03(-u) max(0,1 u); and (4) logistic loss (4(-u) = log2 (1+e-u). Which functions satisfy the assumptions on stated earlier in this problem? (c) For each loss function satisfying these assumptions, derive the corresponding boosting algo- rithm. How do the algorithm(s) differ from AdaBoost? (d) Noise-tolerant Adaboost: AdaBoost may be significantly over-fitting in the presence of noise, in part due to the high penalization of misclassified examples. To reduce this effect, we use the following loss function, e- (-) ={ if u > 0 -u+1 othewise, prove that this function also satisfies the assumptions on stated earlier in this problem, and derive the corresponding boosting algorithm. Compare the reduction of the empirical error rate of this algorithm with that of AdaBoost

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts