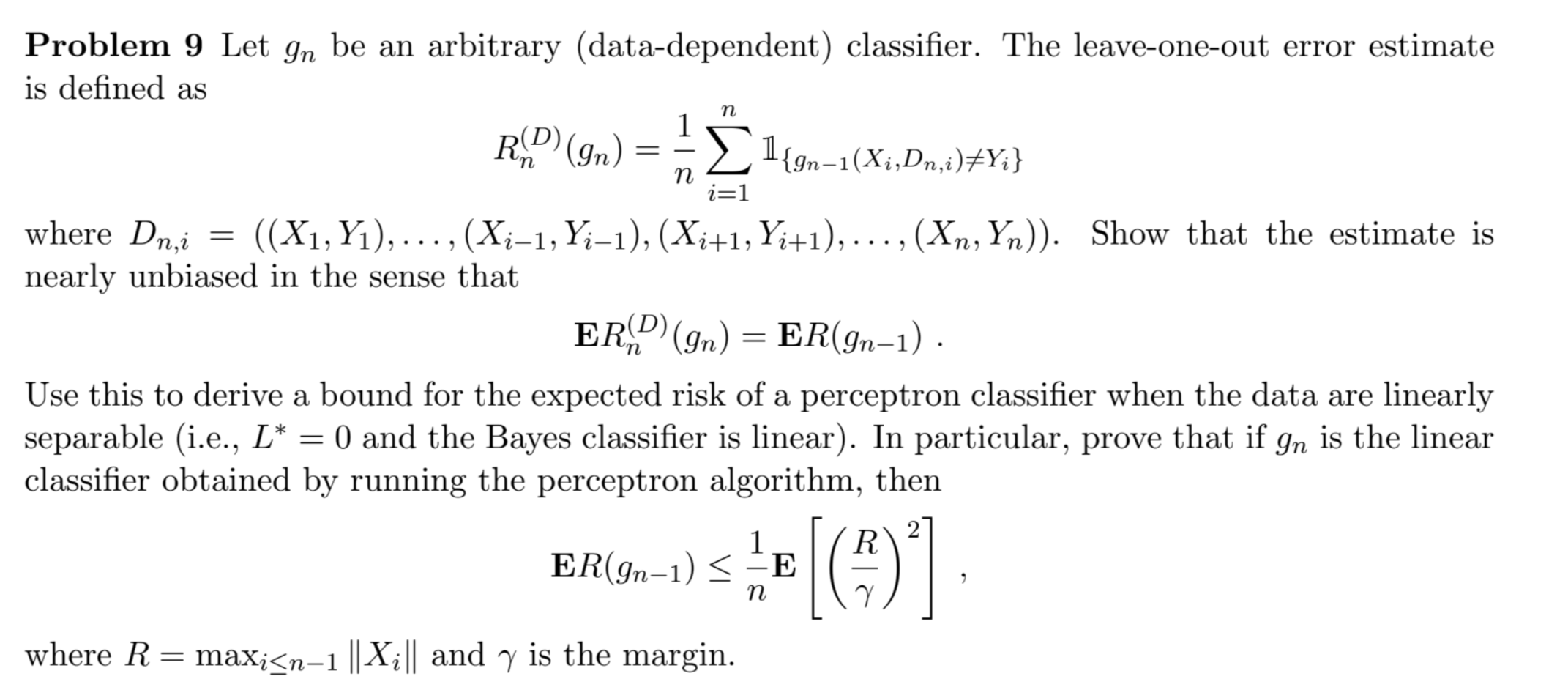

Question: Problem 9 Let g n be an arbitrary ( data - dependent ) classifier. The leave - one - out error estimate is defined as

Problem Let be an arbitrary datadependent classifier. The leaveoneout error estimate

is defined as

where dots,dots, Show that the estimate is

nearly unbiased in the sense that

Use this to derive a bound for the expected risk of a perceptron classifier when the data are linearly

separable ie and the Bayes classifier is linear In particular, prove that if is the linear

classifier obtained by running the perceptron algorithm, then

where and is the margin.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock