Question: Programming assignment (80 points) Description This is an individual assignment. Overview and Assignment Goals: The objectives of this assignment are the following Use Apache Spark

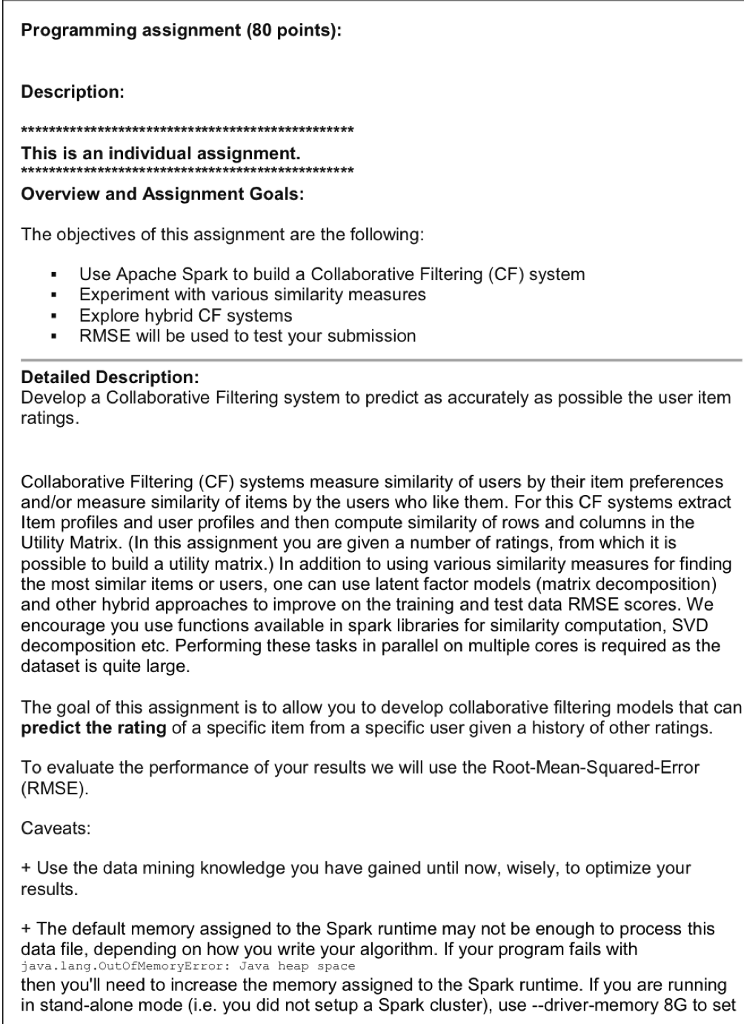

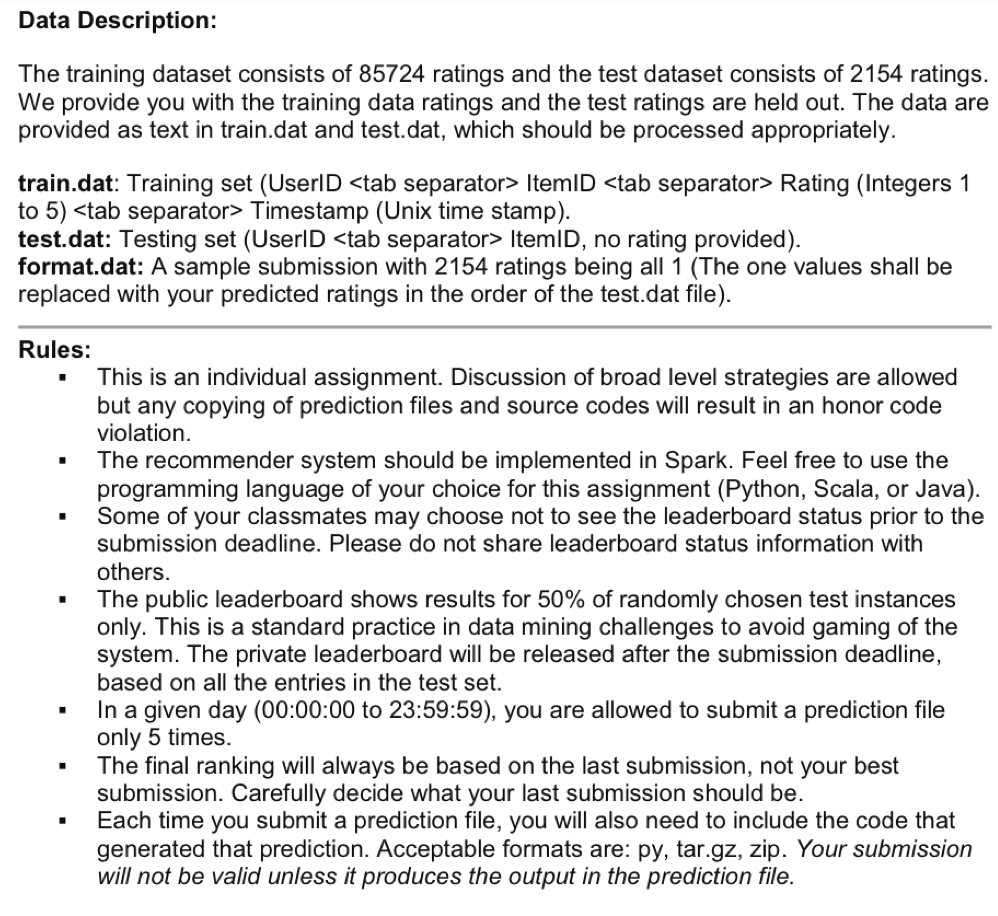

Programming assignment (80 points) Description This is an individual assignment. Overview and Assignment Goals: The objectives of this assignment are the following Use Apache Spark to build a Collaborative Filtering (CF) system Experiment with various similarity measures Explore hybrid CF systems RMSE will be used to test your submission . Detailed Description: Develop a Collaborative Filtering system to predict as accurately as possible the user item ratings Collaborative Filtering (CF) systems measure similarity of users by their item preferences and/or measure similarity of items by the users who like them. For this CF systems extract Item profiles and user profiles and then compute similarity of rows and columns in the Utility Matrix. (In this assignment you are given a number of ratings, from which it is possible to build a utility matrix.) In addition to using various similarity measures for finding the most similar items or users, one can use latent factor models (matrix decomposition) and other hybrid approaches to improve on the training and test data RMSE scores. We encourage you use functions available in spark libraries for similarity computation, SVD decomposition etc. Performing these tasks in parallel on multiple cores is required as the dataset is quite large The goal of this assignment is to allow you to develop collaborative filtering models that can predict the rating of a specific item from a specific user given a history of other ratings To evaluate the performance of your results we will use the Root-Mean-Squared-Error (RMSE) Caveats + Use the data mining knowledge you have gained until now, wisely, to optimize your results + The default memory assigned to the Spark runtime may not be enough to process this data file, depending on how you write your algorithm. If your program fails with java.lang.OutofMemoryError: Java heap space then you'll need to increase the memory assigned to the Spark runtime. If you are running in stand-alone mode (i.e. you did not setup a Spark cluster), use --driver-memory 8G to set Programming assignment (80 points) Description This is an individual assignment. Overview and Assignment Goals: The objectives of this assignment are the following Use Apache Spark to build a Collaborative Filtering (CF) system Experiment with various similarity measures Explore hybrid CF systems RMSE will be used to test your submission . Detailed Description: Develop a Collaborative Filtering system to predict as accurately as possible the user item ratings Collaborative Filtering (CF) systems measure similarity of users by their item preferences and/or measure similarity of items by the users who like them. For this CF systems extract Item profiles and user profiles and then compute similarity of rows and columns in the Utility Matrix. (In this assignment you are given a number of ratings, from which it is possible to build a utility matrix.) In addition to using various similarity measures for finding the most similar items or users, one can use latent factor models (matrix decomposition) and other hybrid approaches to improve on the training and test data RMSE scores. We encourage you use functions available in spark libraries for similarity computation, SVD decomposition etc. Performing these tasks in parallel on multiple cores is required as the dataset is quite large The goal of this assignment is to allow you to develop collaborative filtering models that can predict the rating of a specific item from a specific user given a history of other ratings To evaluate the performance of your results we will use the Root-Mean-Squared-Error (RMSE) Caveats + Use the data mining knowledge you have gained until now, wisely, to optimize your results + The default memory assigned to the Spark runtime may not be enough to process this data file, depending on how you write your algorithm. If your program fails with java.lang.OutofMemoryError: Java heap space then you'll need to increase the memory assigned to the Spark runtime. If you are running in stand-alone mode (i.e. you did not setup a Spark cluster), use --driver-memory 8G to set

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts