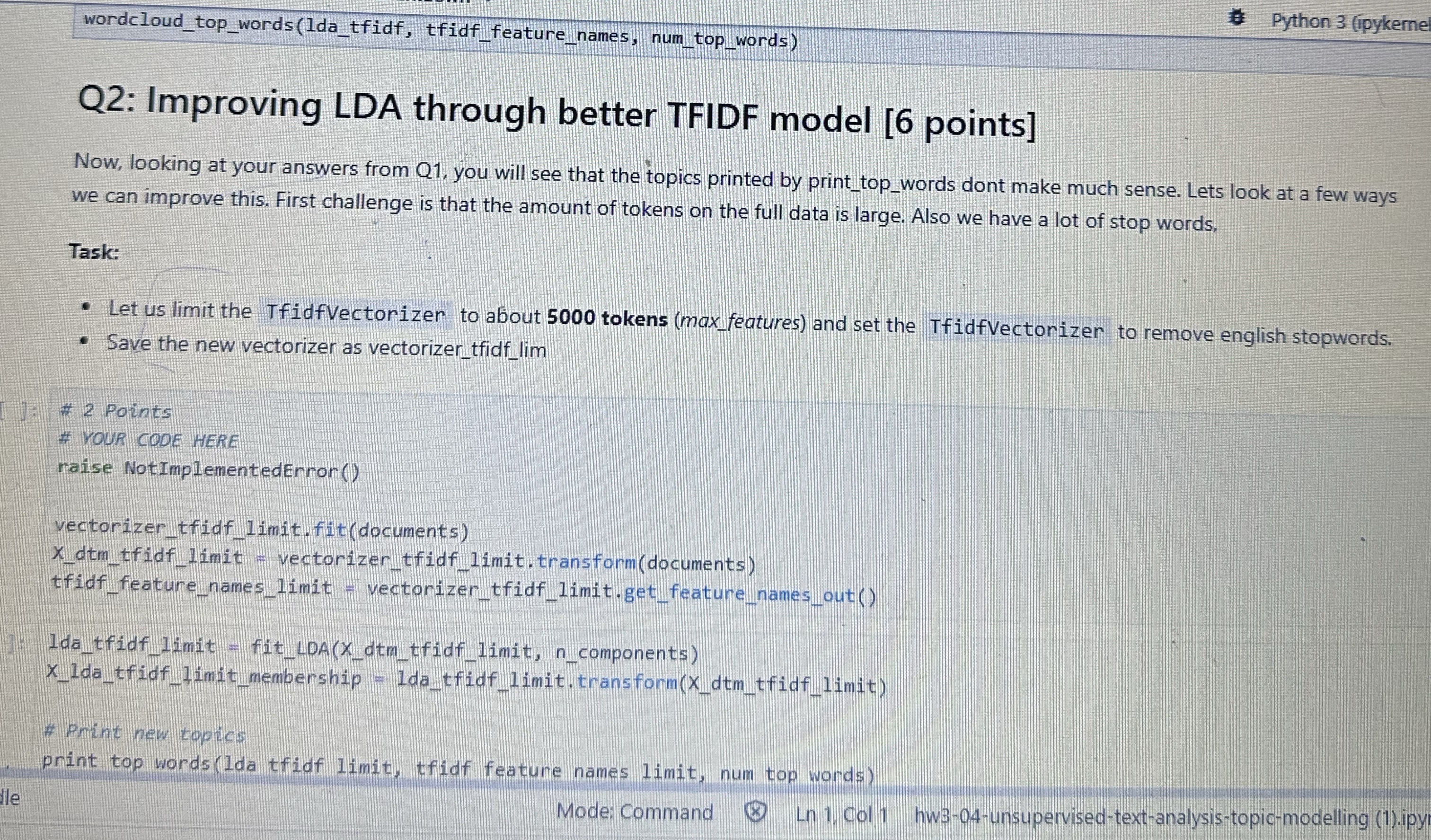

Question: Q 2 : Improving LDA through better TFIDF model [ 6 points ] Now, looking at your answers from Q 1 , you will see

Q: Improving LDA through better TFIDF model points

Now, looking at your answers from Q you will see that the topics printed by printtopwords dont make much sense. Lets look at a few ways

we can improve this. First challenge is that the amount of tokens on the full data is large. Also we have a lot of stop words,

Task:

Let us limit the TfidfVectorizer to about tokens maxfeatures and set the Tfidfvectorizer to remove english stopwords.

Save the new vectorizer as vectorizertfidflim

# Points

# YOUR CODE HERE

raise NotImplementedError

vectorizertfidflimit fitdocuments

tfidffeature nameslimit vectorizertfidflimit getfeaturenamesout

Idatfidflimit fitLDAXdtmtfidflimitcomponents

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock