Question: Q5. (30 points) Suppose we have n+ positive training examples and n negative training examples. Let C+ be the center of the positive examples and

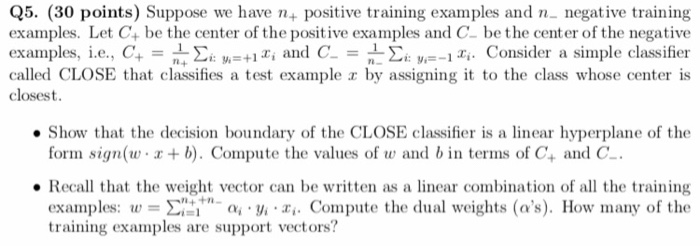

Q5. (30 points) Suppose we have n+ positive training examples and n negative training examples. Let C+ be the center of the positive examples and C be the center of the negative , C+- : ,ai and CL-r y,=-1Zp Consider a simple classifier called CLOSE that classifies a test example r by assigning it to the class whose center is examples, 1.e. closest. Show that the decision boundary of the CLOSE classifier is a linear hyperplane of the form sign(w x + b). Compute the values of w and bin terms of C+ and C . Recall that the weight vector can be written as a linear combination of all the training examples: u, 4m ai . li-zi. Compute the dual weights (as). How many of the training examples are support vectors? Q5. (30 points) Suppose we have n+ positive training examples and n negative training examples. Let C+ be the center of the positive examples and C be the center of the negative , C+- : ,ai and CL-r y,=-1Zp Consider a simple classifier called CLOSE that classifies a test example r by assigning it to the class whose center is examples, 1.e. closest. Show that the decision boundary of the CLOSE classifier is a linear hyperplane of the form sign(w x + b). Compute the values of w and bin terms of C+ and C . Recall that the weight vector can be written as a linear combination of all the training examples: u, 4m ai . li-zi. Compute the dual weights (as). How many of the training examples are support vectors

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts