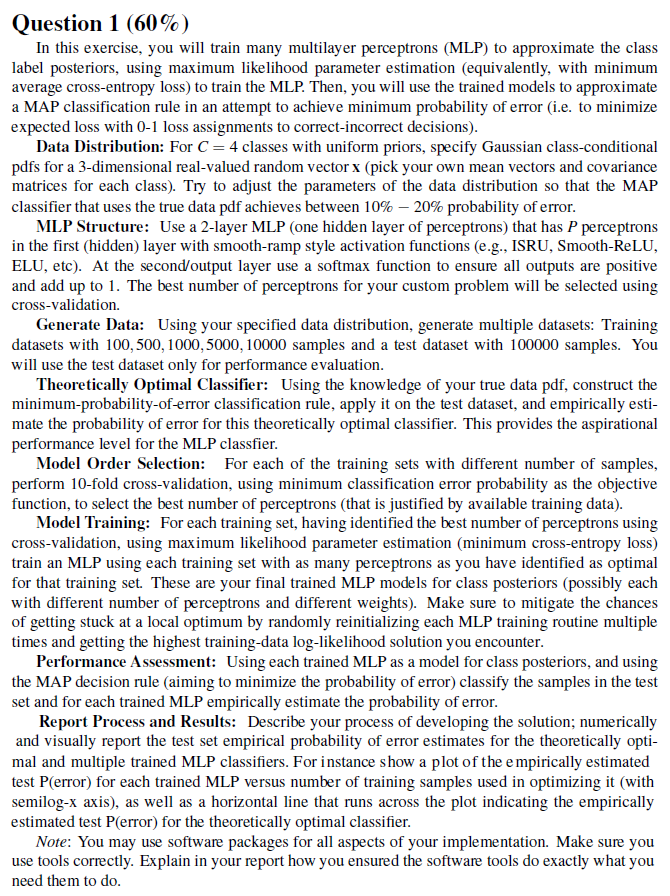

Question: Question 1 ( 6 0 % ) MUST have comprehensive code, thorough maths with explanations. In this exercise, you will train many multilayer perceptrons (

Question MUST have comprehensive code, thorough maths with explanations.

In this exercise, you will train many multilayer perceptrons MLP to approximate the class

label posteriors, using maximum likelihood parameter estimation equivalently with minimum

average crossentropy loss to train the MLP Then, you will use the trained models to approximate

a MAP classification rule in an attempt to achieve minimum probability of error ie to minimize

expected loss with loss assignments to correctincorrect decisions

Data Distribution: For C classes with uniform priors, specify Gaussian classconditional

pdfs for a dimensional realvalued random vector x pick your own mean vectors and covariance

matrices for each class Try to adjust the parameters of the data distribution so that the MAP

classifier that uses the true data pdf achieves between probability of error.

MLP Structure: Use a layer MLP one hidden layer of perceptrons that has P perceptrons

in the first hidden layer with smoothramp style activation functions eg ISRU, SmoothReLU,

ELU, etc At the secondoutput layer use a softmax function to ensure all outputs are positive

and add up to The best number of perceptrons for your custom problem will be selected using

crossvalidation.

Generate Data: Using your specified data distribution, generate multiple datasets: Training

datasets with samples and a test dataset with samples. You

will use the test dataset only for performance evaluation.

Theoretically Optimal Classifier: Using the knowledge of your true data pdf construct the

minimumprobabilityoferror classification rule, apply it on the test dataset, and empirically esti

mate the probability of error for this theoretically optimal classifier. This provides the aspirational

performance level for the MLP classfier.

Model Order Selection: For each of the training sets with different number of samples,

perform fold crossvalidation, using minimum classification error probability as the objective

function, to select the best number of perceptrons that is justified by available training data

Model Training: For each training set, having identified the best number of perceptrons using

crossvalidation, using maximum likelihood parameter estimation minimum crossentropy loss

train an MLP using each training set with as many perceptrons as you have identified as optimal

for that training set. These are your final trained MLP models for class posteriors possibly each

with different number of perceptrons and different weights Make sure to mitigate the chances

of getting stuck at a local optimum by randomly reinitializing each MLP training routine multiple

times and getting the highest trainingdata loglikelihood solution you encounter.

Performance Assessment: Using each trained MLP as a model for class posteriors, and using

the MAP decision rule aiming to minimize the probability of error classify the samples in the test

set and for each trained MLP empirically estimate the probability of error.

Report Process and Results: Describe your process of developing the solution; numerically

and visually report the test set empirical probability of error estimates for the theoretically opti

mal and multiple trained MLP classifiers. For instance show a plot of the e mpirically estimated

test P error for each trained MLP versus number of training samples used in optimizing it with

semilogx axis as well as a horizontal line that runs across the plot indicating the empirically

estimated test P error for the theoretically optimal classifier.

Note: You may use software packages for all aspects of your implementation. Make sure you

use tools correctly. Explain in your report how you ensured the software tools do exactly what you

need them to do

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock