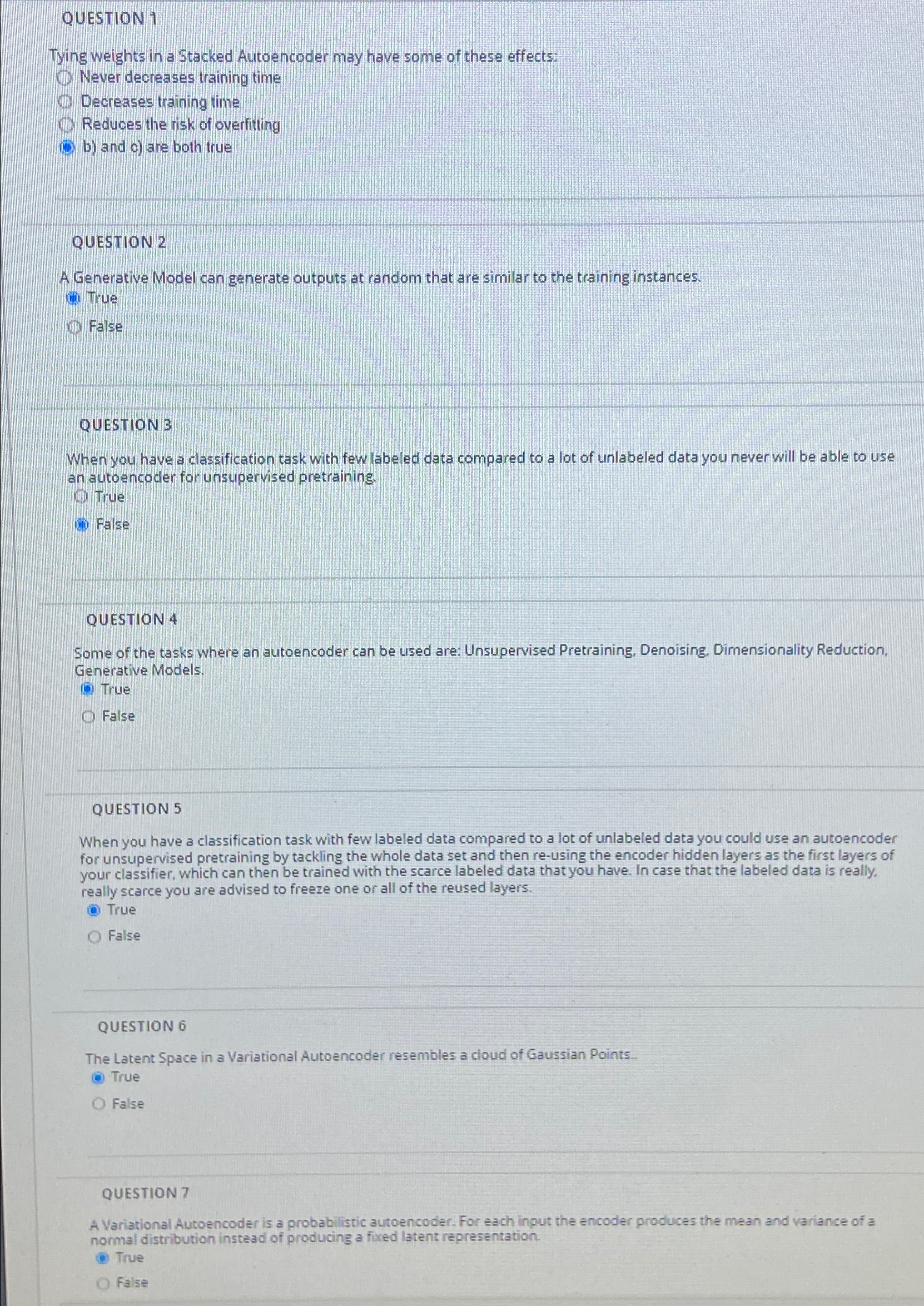

Question: QUESTION 1 Tying weights in a Stacked Autoencoder may have some of these effects: Never decreases training time Decreases training time Reduces the risk of

QUESTION

Tying weights in a Stacked Autoencoder may have some of these effects:

Never decreases training time

Decreases training time

Reduces the risk of overfitling

b and c are both true

QUESTION

A Generative Model can generate outputs at random that are similar to the training instances.

True

False

QUESTION

When you have a classification task with few labeled data compared to a lot of unlabeled data you never will be able to use an autoencoder for unsupervised pretraining.

True

False

QUESTION

Some of the tasks where an autoencoder can be used are: Unsupervised Pretraining, Denoising, Dimensionality Reduction, Generative Models.

True

False

QUESTION

When you have a classification task with few labeled data compared to a lot of unlabeled data you could use an autoencoder for unsupervised pretraining by tackling the whole data set and then reusing the encoder hidden layers as the first layers of your classifier, which can then be trained with the scarce labeled data that you have. In case that the labeled data is really, really scarce you are advised to freeze one or all of the reused layers.

True

False

QUESTION

The Latent Space in a Variational Autoencoder resembles a cloud of Gaussian Points.

True

False

QUESTION

A Variational Autoencoder is a probabilistic autoencoder. For each input the encoder produces the mean and variance of a normal distribution instead of producing a fixed latent representation.

True

False

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock