Question: Question 2 Model - Based RL: Cycle Consider an MDP with 3 states, A , B and C; and 2 actions Clockwise and Counterclockwise. We

Question ModelBased RL: Cycle

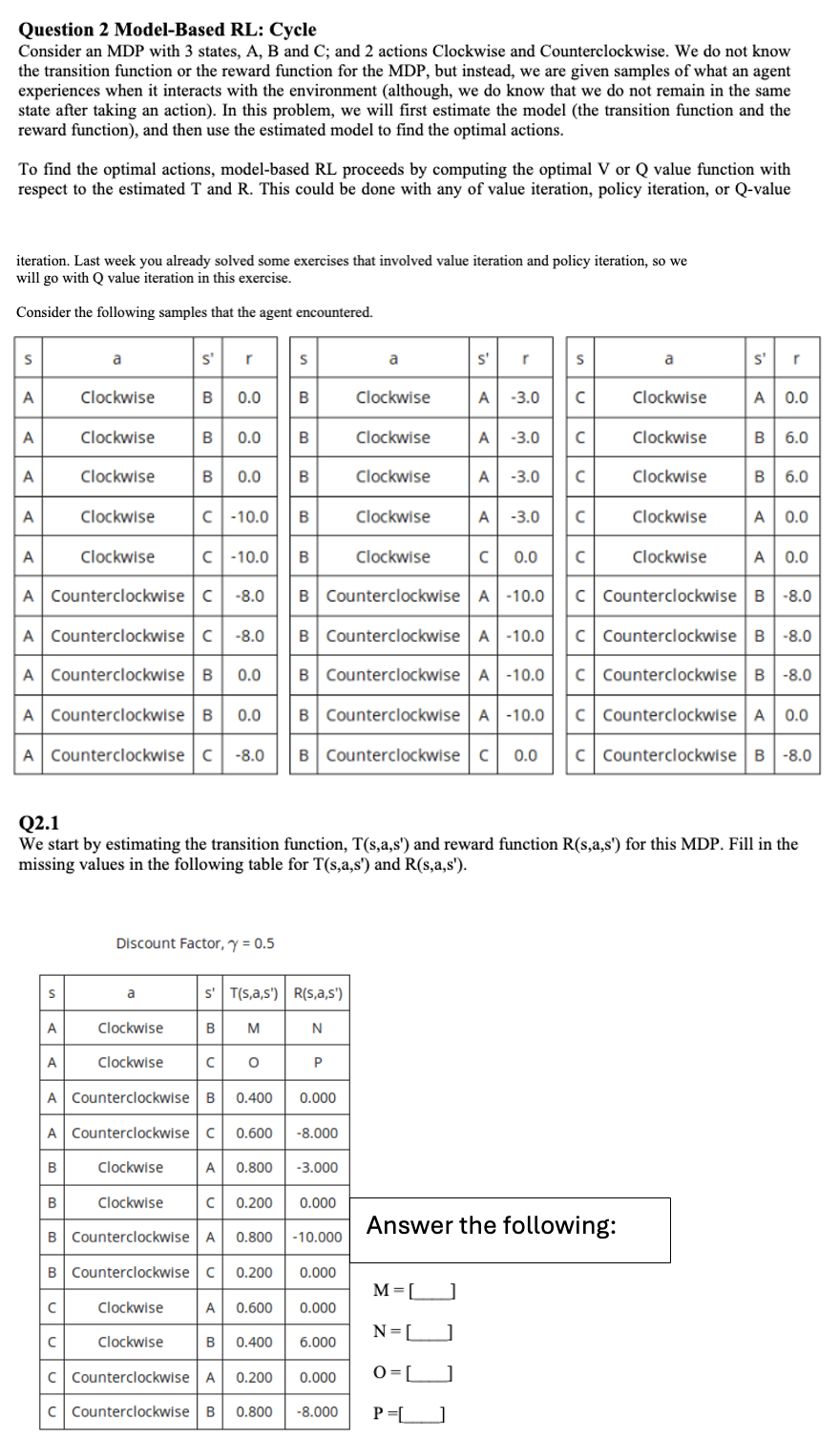

Consider an MDP with states, A B and C; and actions Clockwise and Counterclockwise. We do not know the transition function or the reward function for the MDP but instead, we are given samples of what an agent experiences when it interacts with the environment although we do know that we do not remain in the same state after taking an action In this problem, we will first estimate the model the transition function and the reward function and then use the estimated model to find the optimal actions.

To find the optimal actions, modelbased RL proceeds by computing the optimal V or Q value function with respect to the estimated T and R This could be done with any of value iteration, policy iteration, or Q value

iteration. Last week you already solved some exercises that involved value iteration and policy iteration, so we will go with Q value iteration in this exercise.

Consider the following samples that the agent encountered.

Q

We start by estimating the transition function, Tsaleftmathrmsprimeright and reward function mathrmRleftmathrmsmathrmamathrmsprimeright for this MDP Fill in the missing values in the following table for mathrmTleftmathrmsmathrmamathrmsprimeright and mathrmRleftmathrmsmathrmamathrmsprimeright

Discount Factor, gamma

Answer the following:

beginarrayl

mathrmM

mathrm~Nmid

mathrmOmid

mathrmPmid

endarray

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock