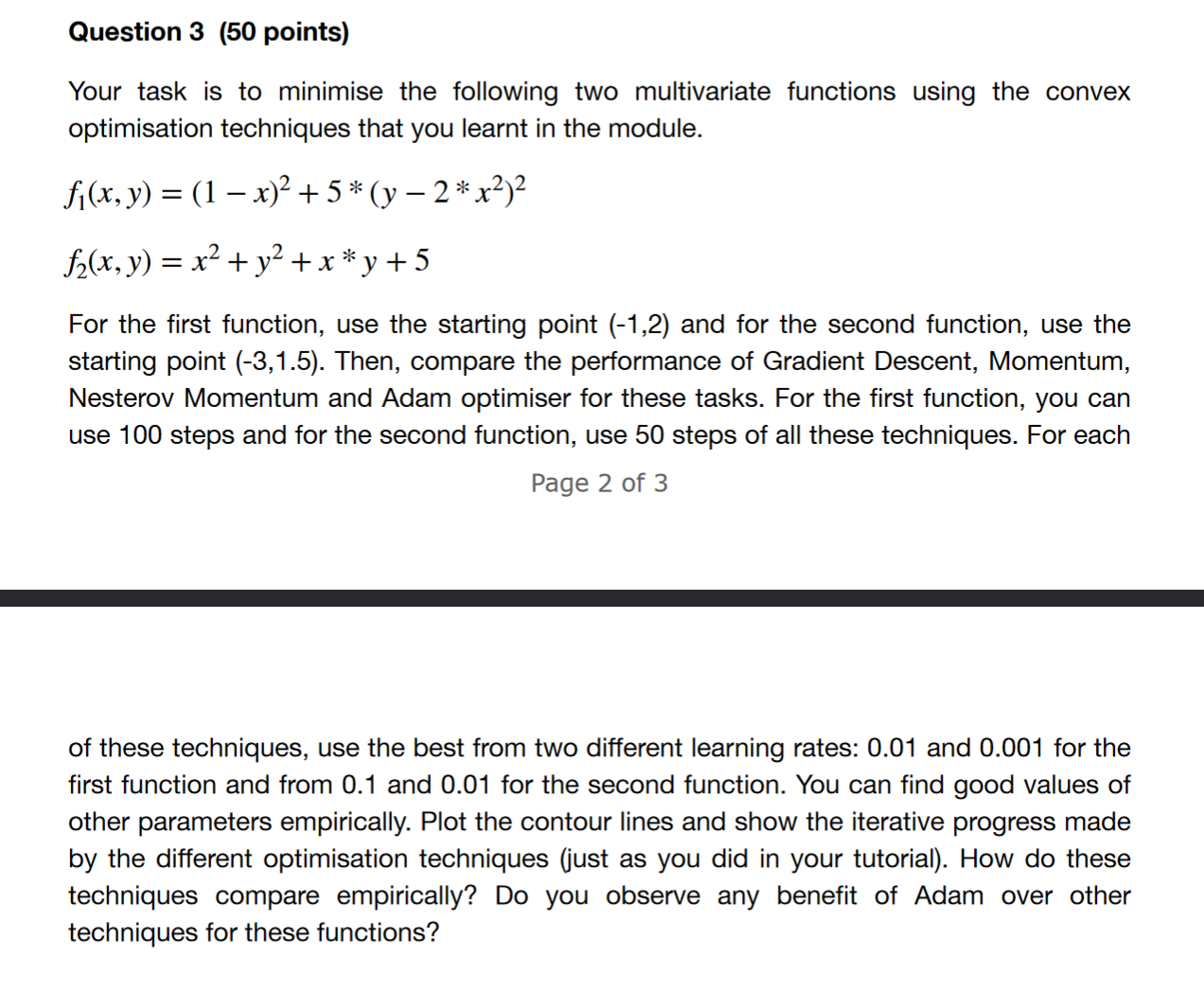

Question: Question 3 ( 5 0 points ) Your task is to minimise the following two multivariate functions using the convex optimisation techniques that you learnt

Question points

Your task is to minimise the following two multivariate functions using the convex

optimisation techniques that you learnt in the module.

For the first function, use the starting point and for the second function, use the

starting point Then, compare the performance of Gradient Descent, Momentum,

Nesterov Momentum and Adam optimiser for these tasks. For the first function, you can

use steps and for the second function, use steps of all these techniques. For each

Page of

of these techniques, use the best from two different learning rates: and for the

first function and from and for the second function. You can find good values of

other parameters empirically. Plot the contour lines and show the iterative progress made

by the different optimisation techniques just as you did in your tutorial How do these

techniques compare empirically? Do you observe any benefit of Adam over other

techniques for these functions?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock