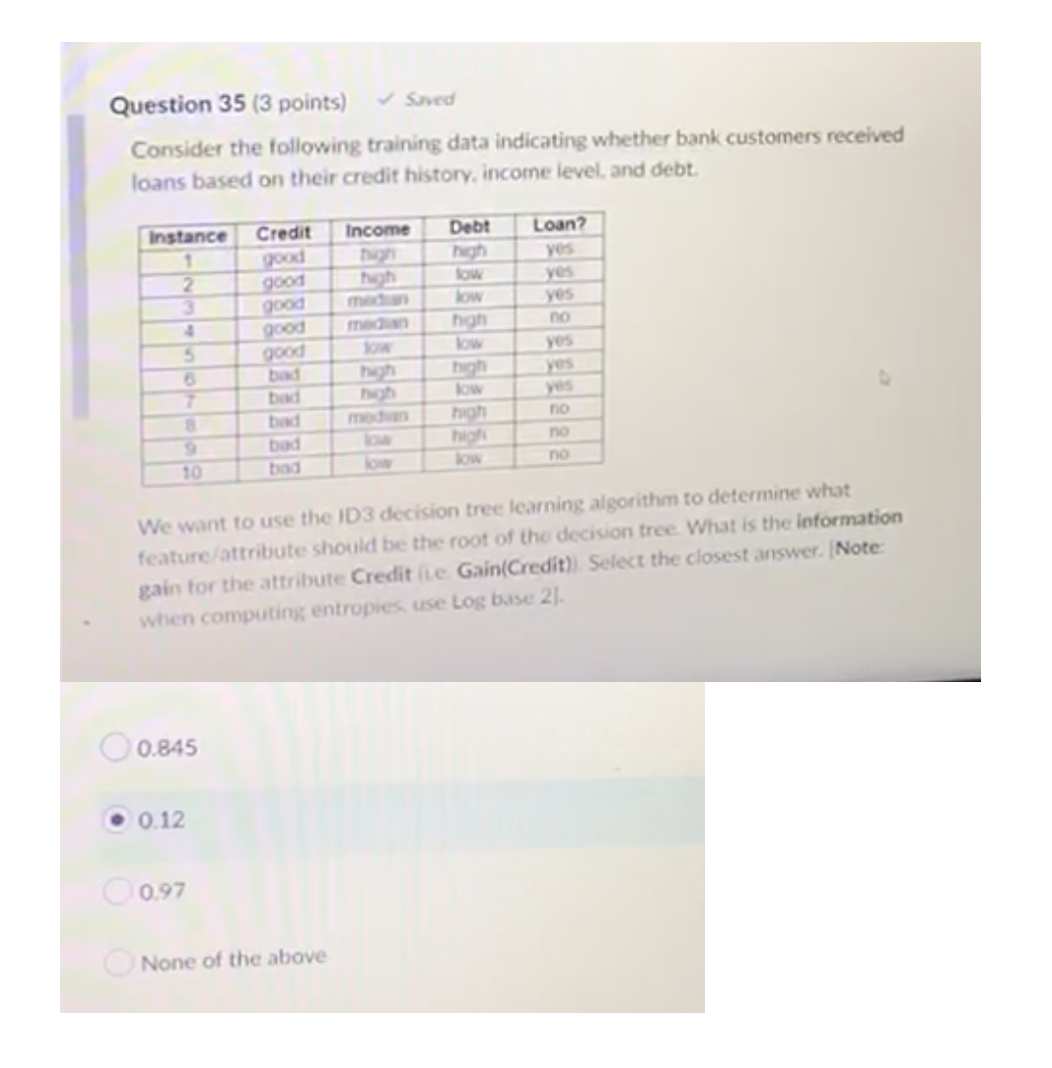

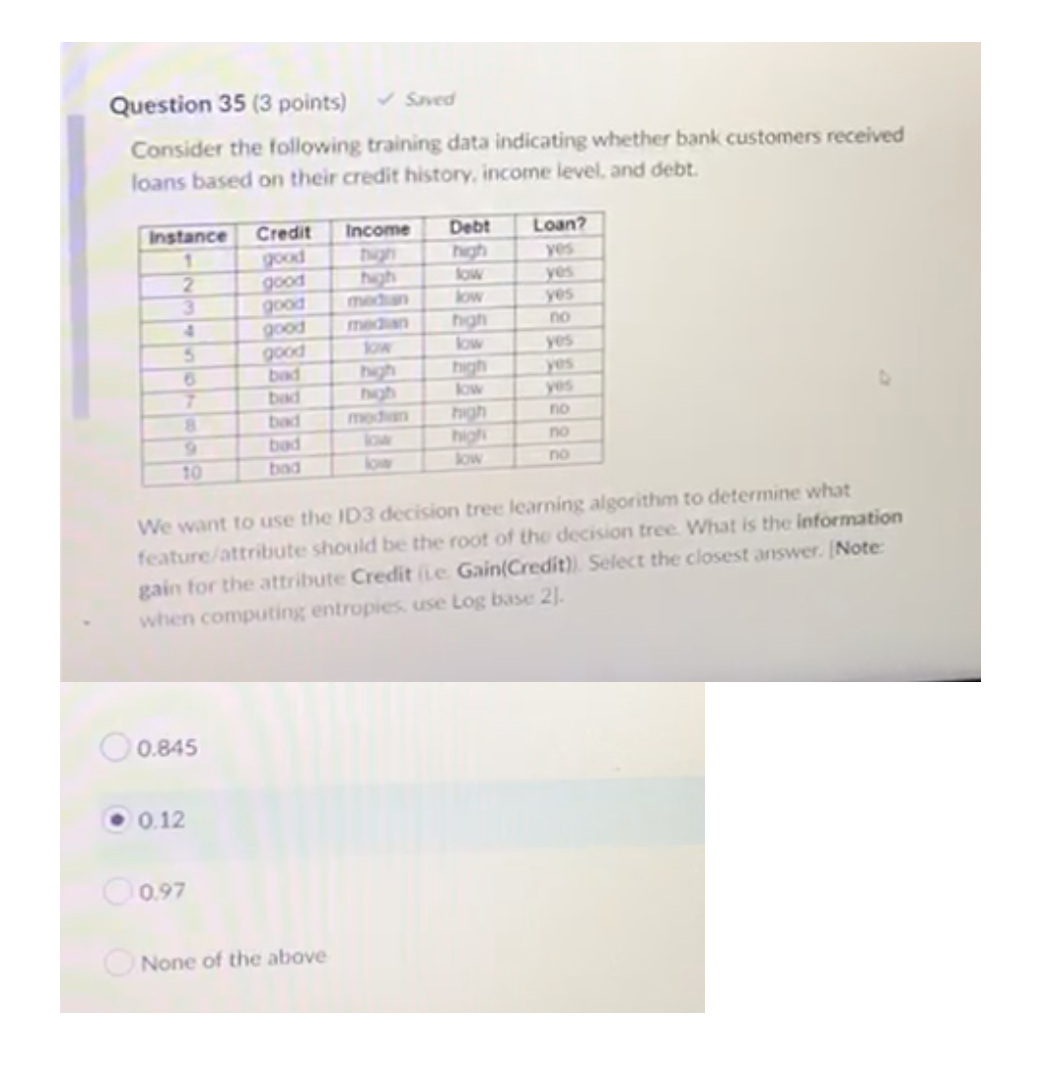

Question: Question 35 (3 points) > Saved Consider the following training data indicating whether bank customers received loans based on their credit history, income level. and

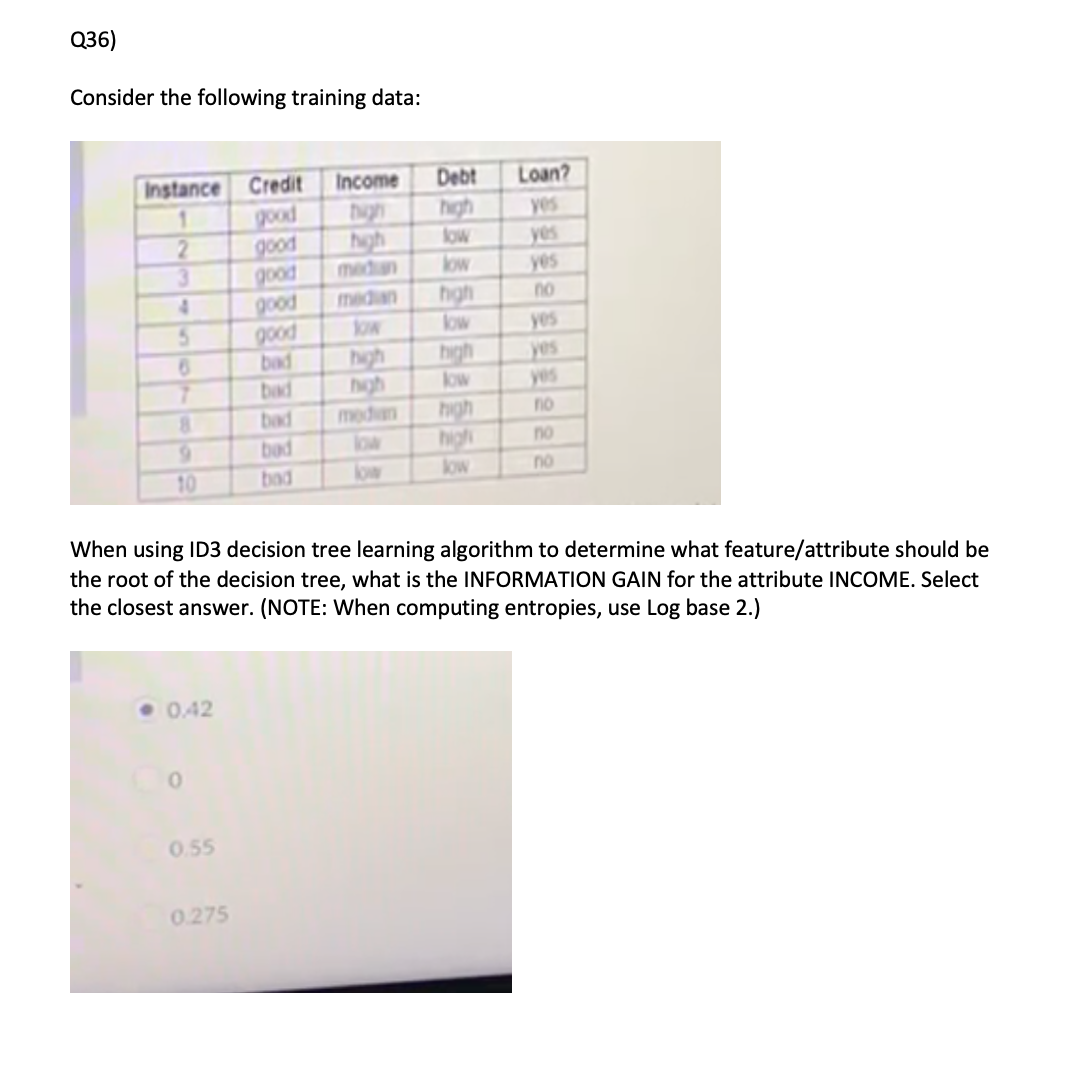

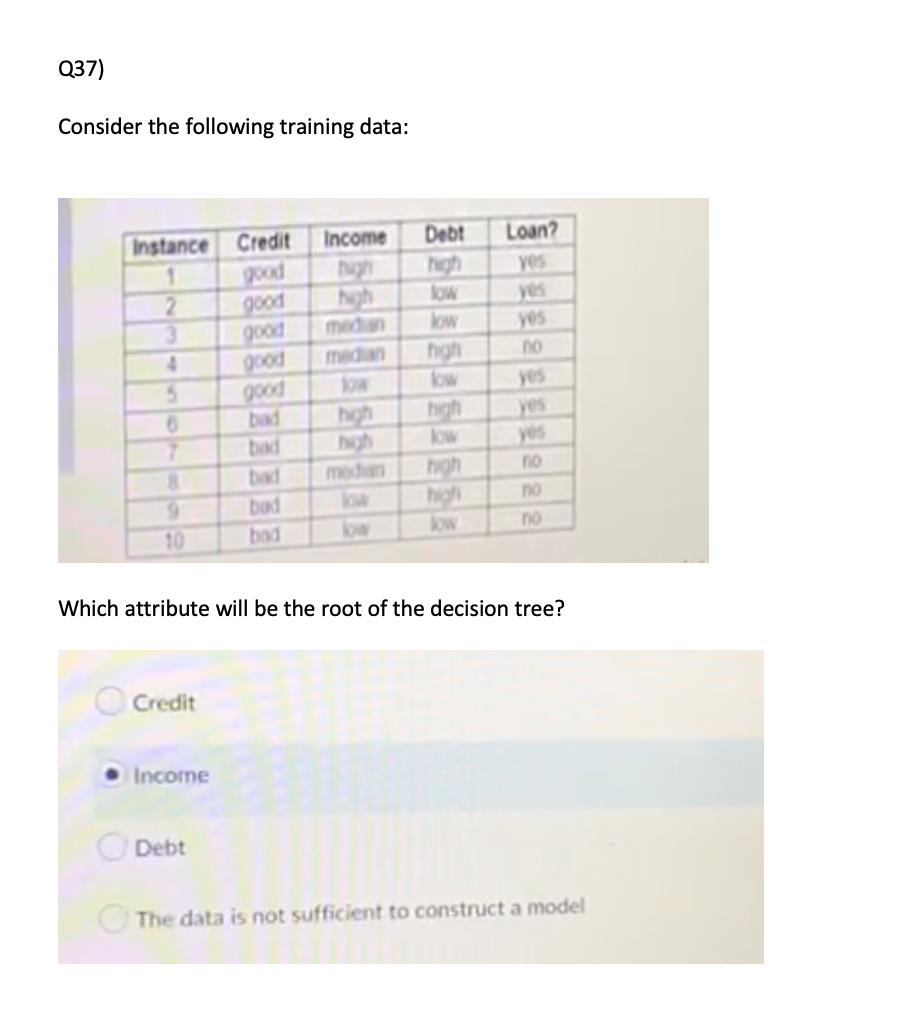

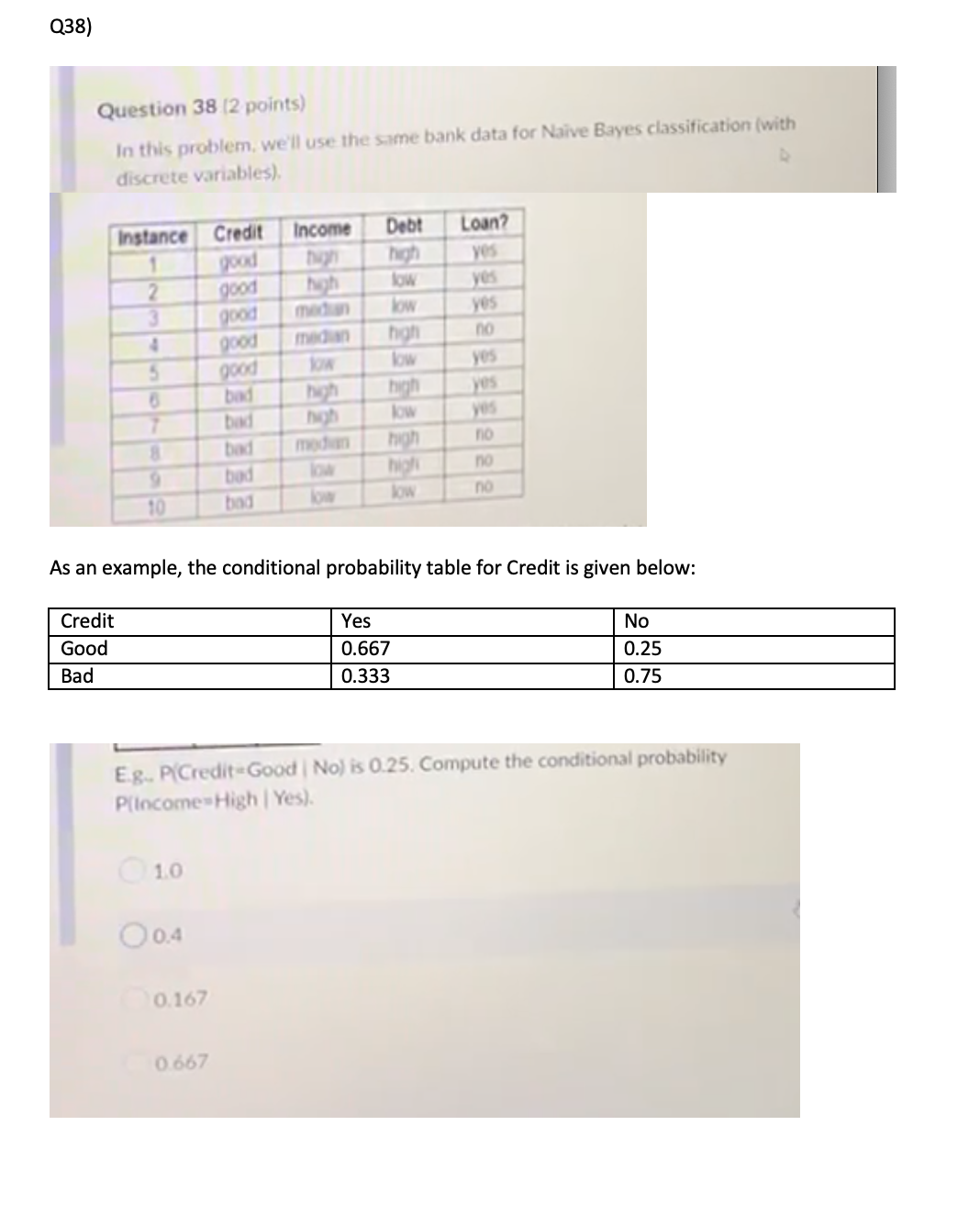

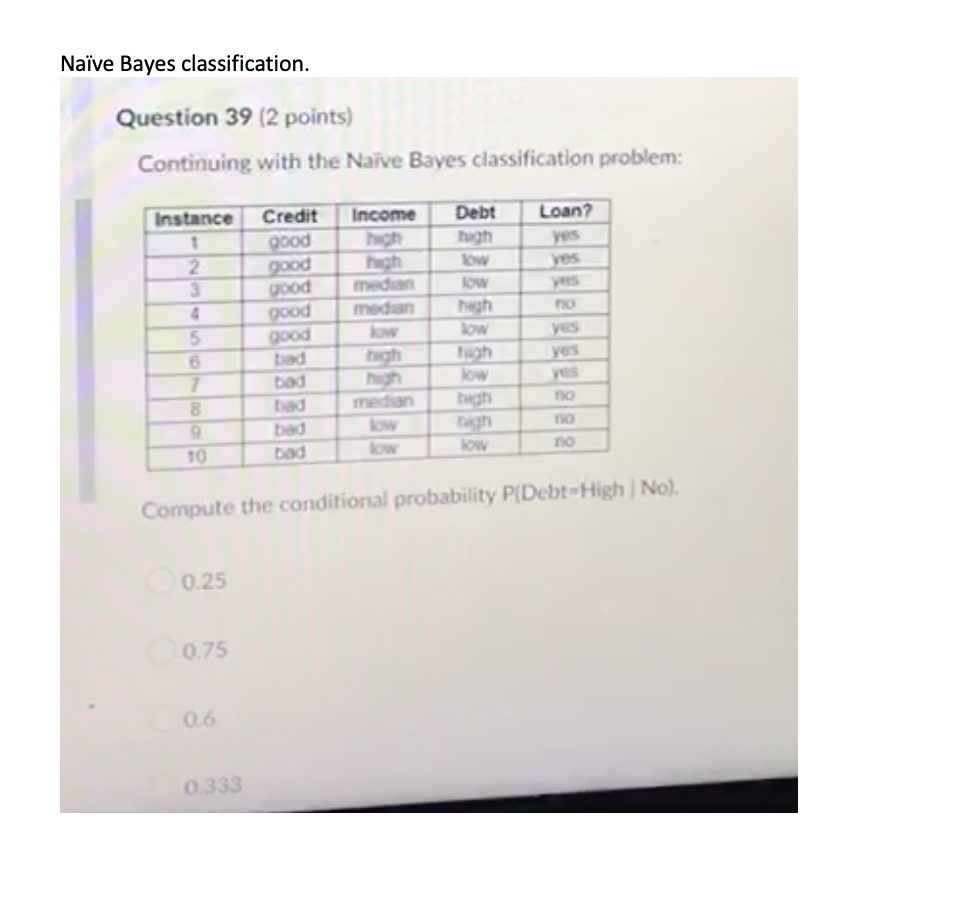

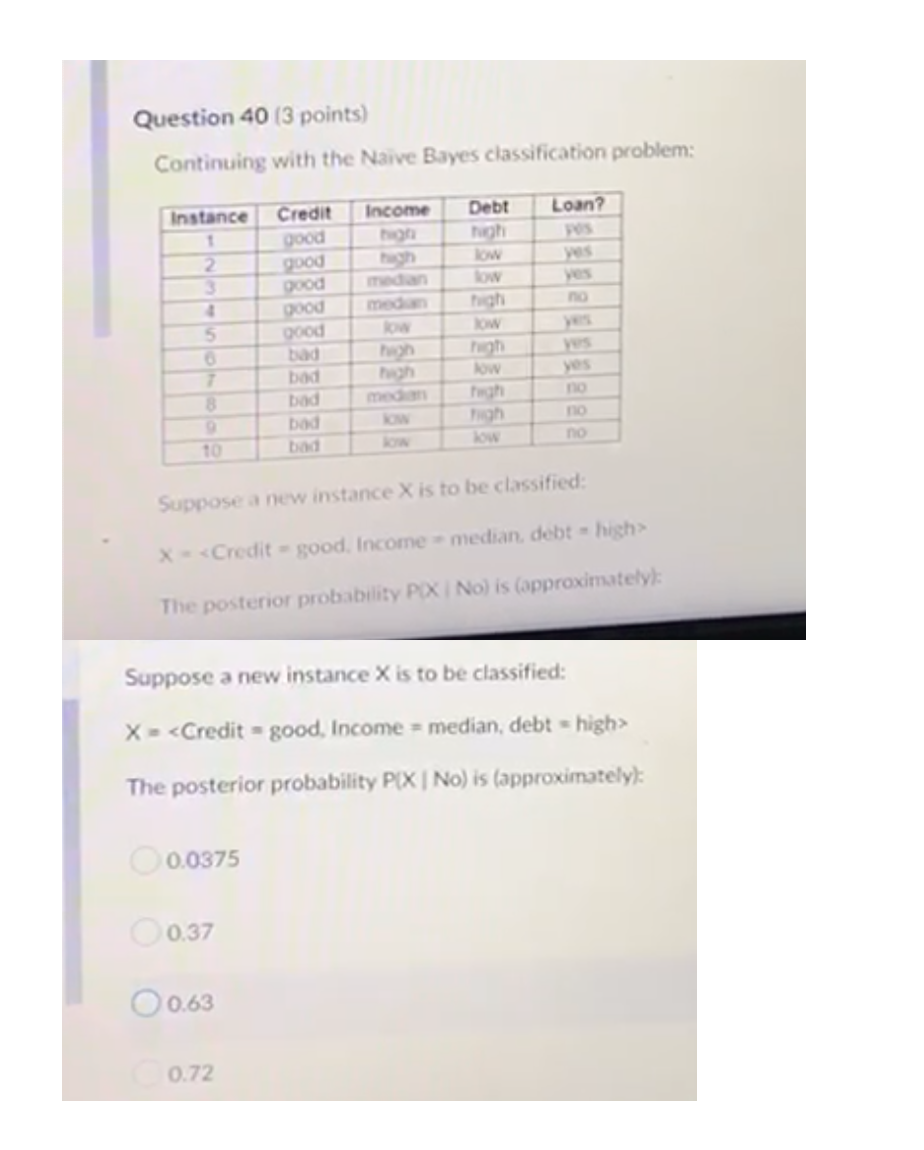

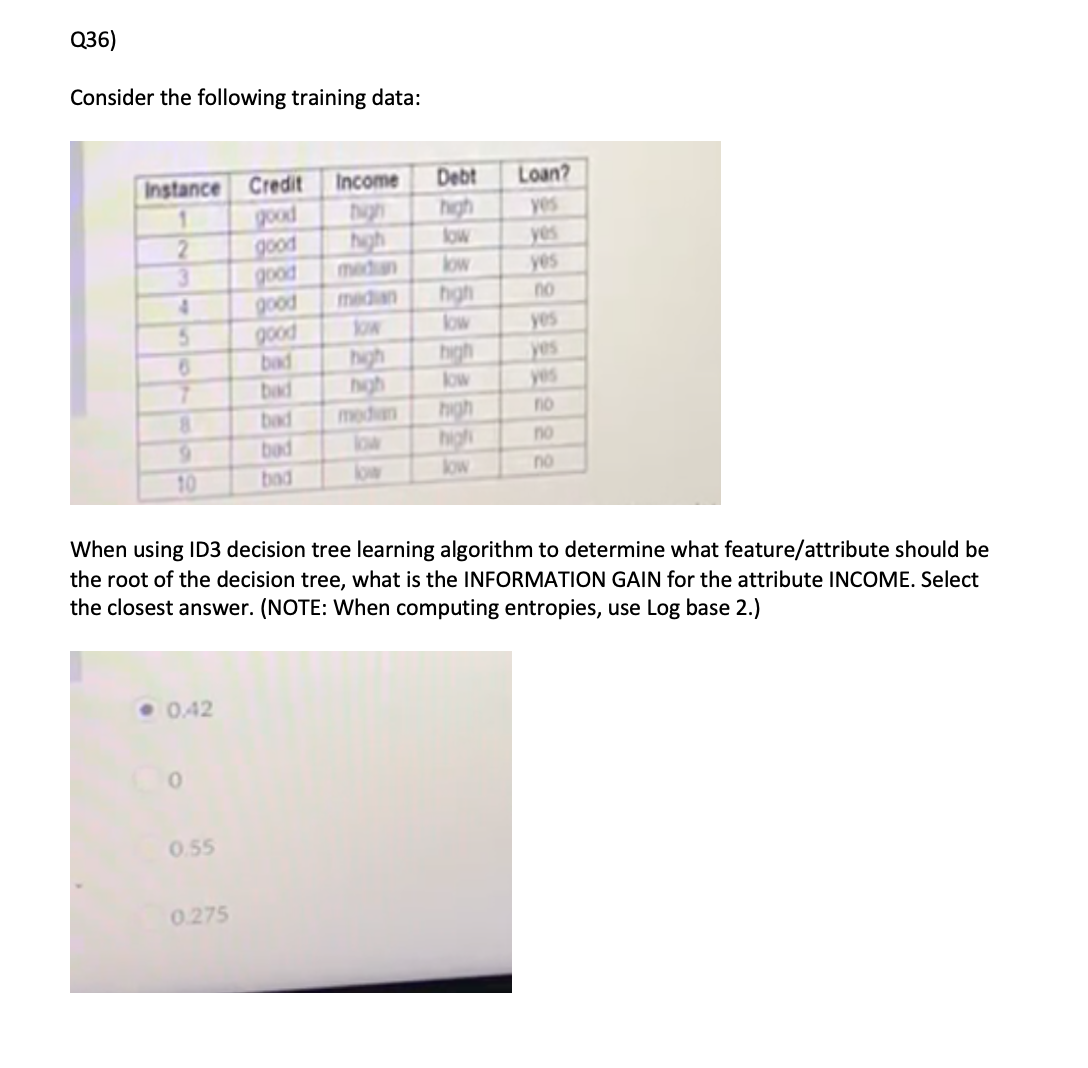

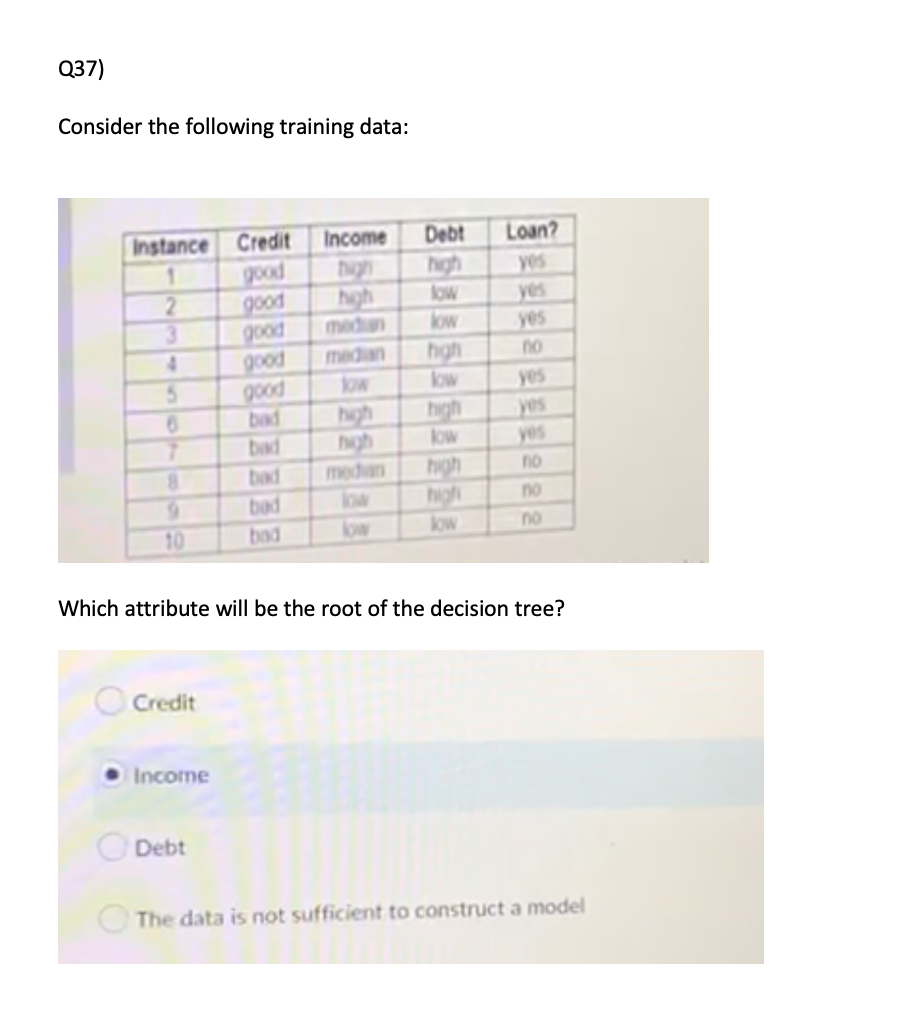

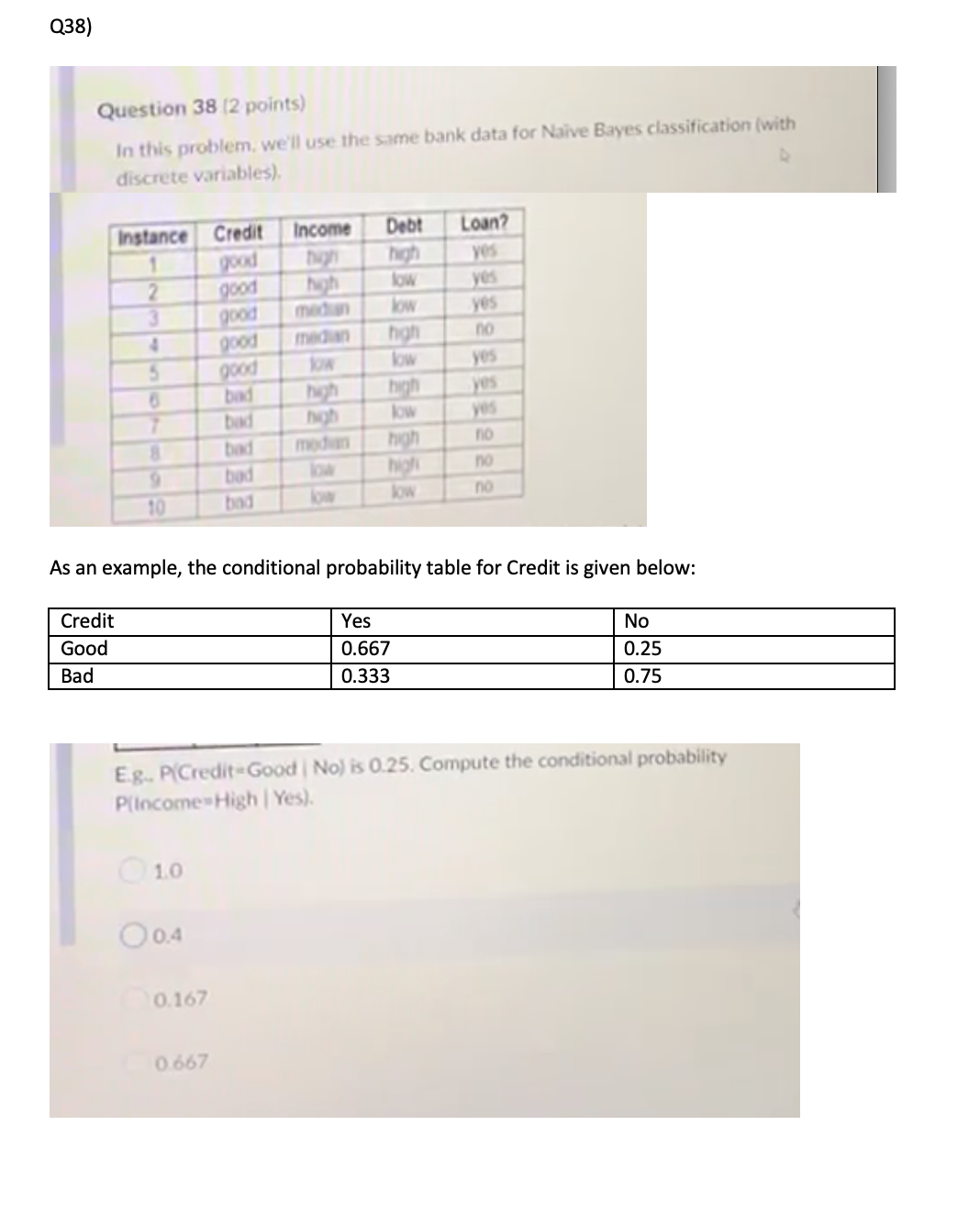

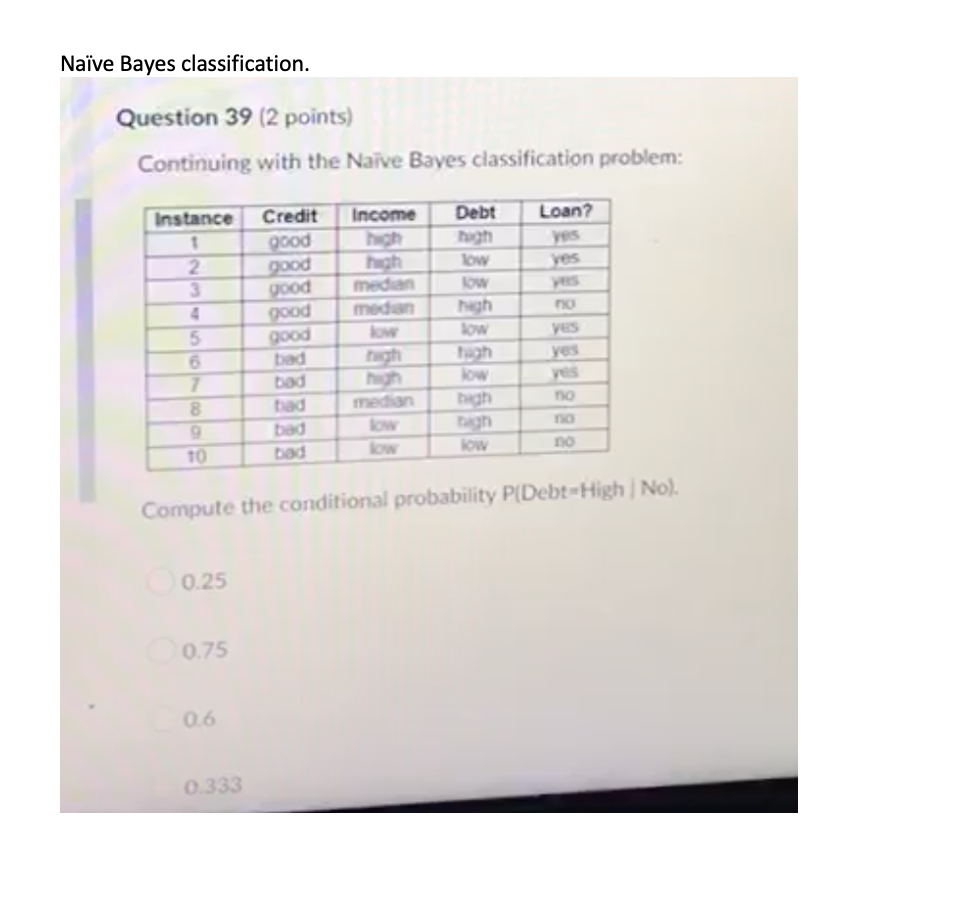

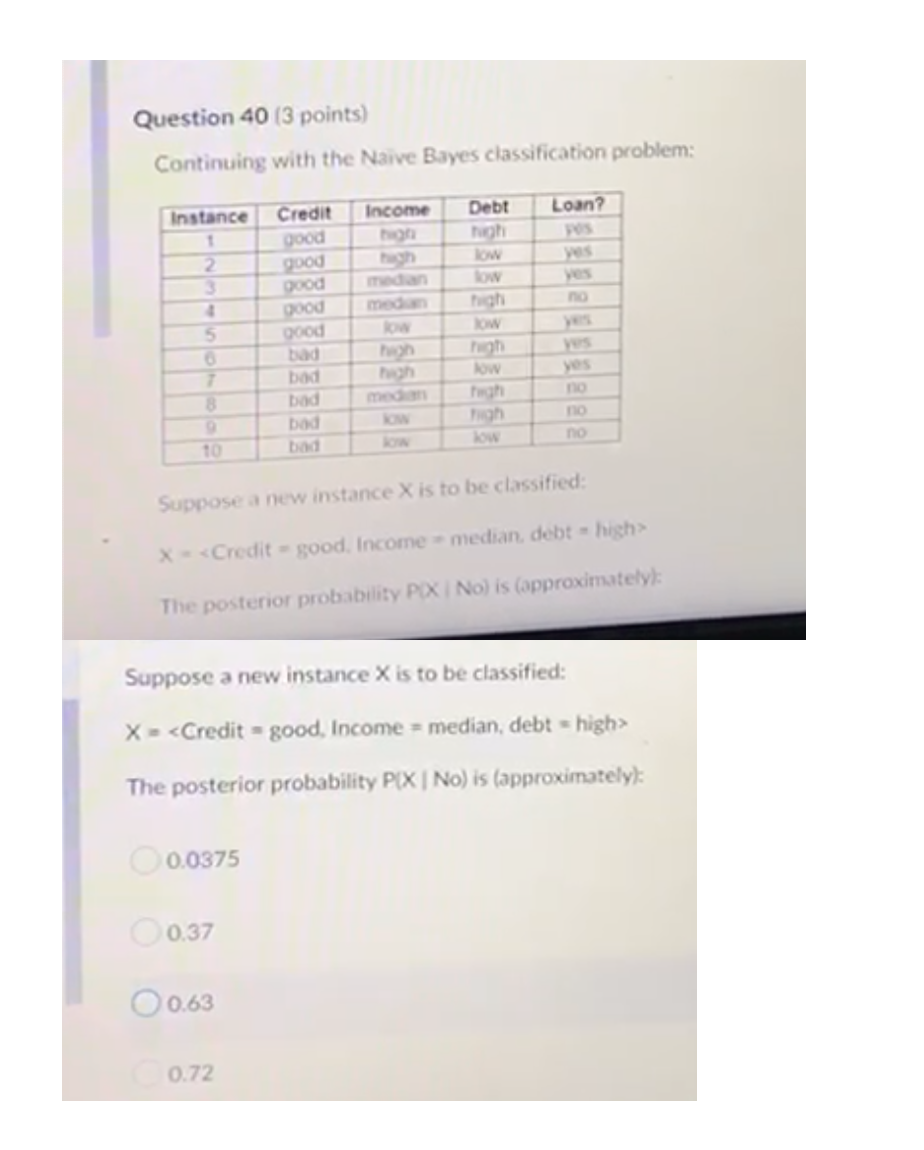

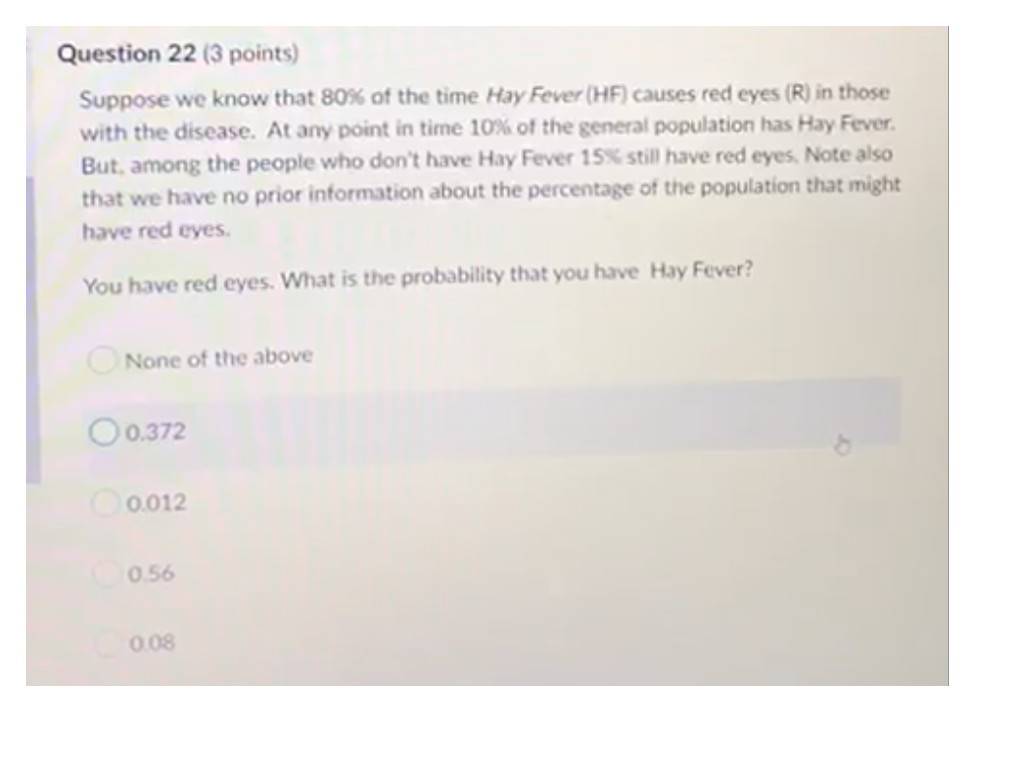

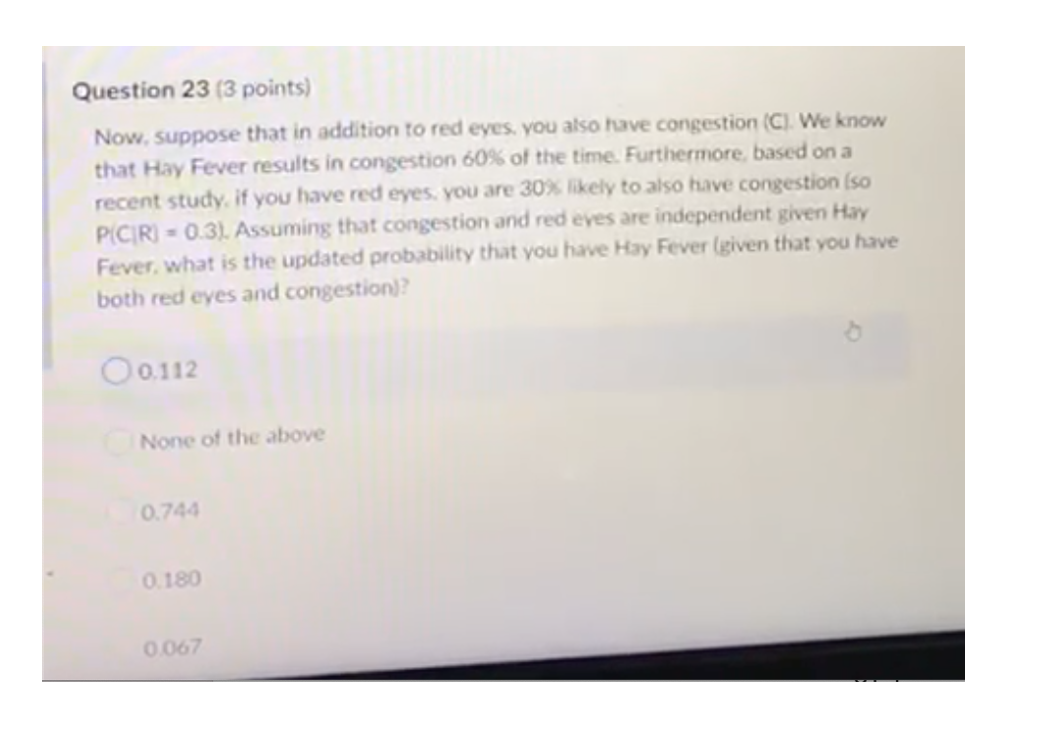

Question 35 (3 points) > Saved Consider the following training data indicating whether bank customers received loans based on their credit history, income level. and debt. Instance Credit Income Debt Loan? good high yes good high low yes good medan low yes good median high no good JOW low Yes 6 bad high high yes bad low yes 8 bad modww no bad low high no low low no We want to use the ID3 decision tree learning algorithm to determine what feature/attribute should be the root of the decision tree. What is the information gain for the attribute Credit (Le. Gain(Credit)). Select the closest answer. [Note: when computing entropies, use Log base 21. O 0.845 . 0.12 0.97 ONone of the aboveQ36) Consider the following training data: Inux-:0 C'lldil income [km lan'? When using |D3 decision tree learning algorithm to determine what feature/attribute should be the root of the decision tree, what is the INFORMATION GAIN for the attribute INCOME. Select the closest answer. (NOTE: When computing entropies, use Log base 2.) Q37) Consider the following training data: Instance Credit Income Debt Loan? good high yes 2 good high low yes good medun low yes 4 good madian high no 5 good low low yes 6 bad high high yes bad high low yes bad modwan high no bad low high no bod Tow low no Which attribute will be the root of the decision tree? Credit Income Debt The data is not sufficient to construct a modelQ38) Question 38 (2 points) In this problem, we'll use the same bank data for Naive Bayes classification (with discrete variables). Instance Credit Income Debt Loan? good thyn high yes good high low yes good medan low yes good median high no good NOW low VOS bad high high YUS bad high low YES bad median high no bad low no Tow low no As an example, the conditional probability table for Credit is given below: Credit Yes Good No 0.667 Bac 0.25 0.333 0.75 E.g. P(Credit Good | No) is 0.25. Compute the conditional probability P(Income=High | Yes). 10 Oo.4 0.167 0.667Naive Bayes classification. Question 39 (2 points) Continuing with the Naive Bayes classification problem: Instance Credit Income Debt Loan? good high hugh yes good high low yes median NOW good medar high good low NOW YES bad ragh high yes bad Now Yes bad DA median high no Low no bad no Compute the conditional probability P(Debt-High | No). 0.25 0.75 0.6 0.333Question 40 (3 points) Continuing with the Naive Bayes classification problem: Instance Credit Income Debt Loan? good high hugh FOS 2 good high low yes good median low yes good media high no good low yes bad high yes bad high NOW yes bad median hugh no 9 bad JOW no bad low no Suppose a new instance X is to be classified: X - The posterior probability PIX | No) is (approximately): Suppose a new instance X is to be classified: X -

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts