Question: Question 5. The basic idea behind many reinforcement learning algorithms is to estimate the action-value function Q(s,a) by using the Bellman equation as an iterative

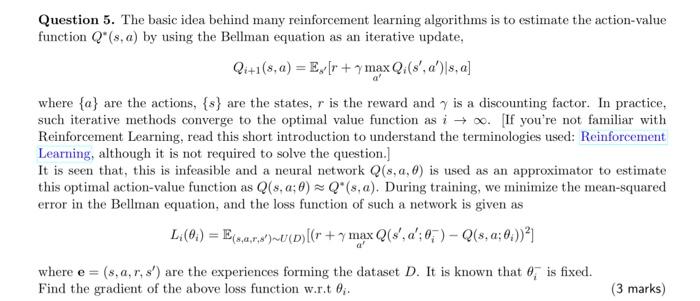

Question 5. The basic idea behind many reinforcement learning algorithms is to estimate the action-value function Q(s,a) by using the Bellman equation as an iterative update, Qi+1(s,a)=Es[r+maxaQi(s,a)s,a] where {a} are the actions, {s} are the states, r is the reward and is a discounting factor. In practice, such iterative methods converge to the optimal value function as i. [If you're not familiar with Reinforcement Learning, read this short introduction to understand the terminologies used: Reinforcement Learning, although it is not required to solve the question.] It is seen that, this is infeasible and a neural network Q(s,a,) is used as an approximator to estimate this optimal action-value function as Q(s,a;)Q(s,a). During training, we minimize the mean-squared error in the Bellman equation, and the loss function of such a network is given as Li(i)=E(s,a,r,s)U(D)[(r+maxaQ(s,a;i)Q(s,a;i))2] where e=(s,a,r+s) are the experiences forming the dataset D. It is known that iis fixed. Find the gradient of the above loss function w.r.t

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts