Question: Question 7 . 0 { points: 1 } Next, we'll use cross - validation on our training data to choose k . In k -

Question

points:

Next, we'll use crossvalidation on our training data to choose In nn classification, we used accuracy to see how well our predictions matched the true labels.

In the context of nn regression, we will use RMSPE as the scoring instead. Interpreting the RMSPE value can be tricky but generally speaking, if the prediction

values are very close to the true values, the RMSPE will be small. Conversely, if the prediction values are not very close to the true values, the RMSPE will be quite

large.

Let's perform a crossvalidation and choose the optimal

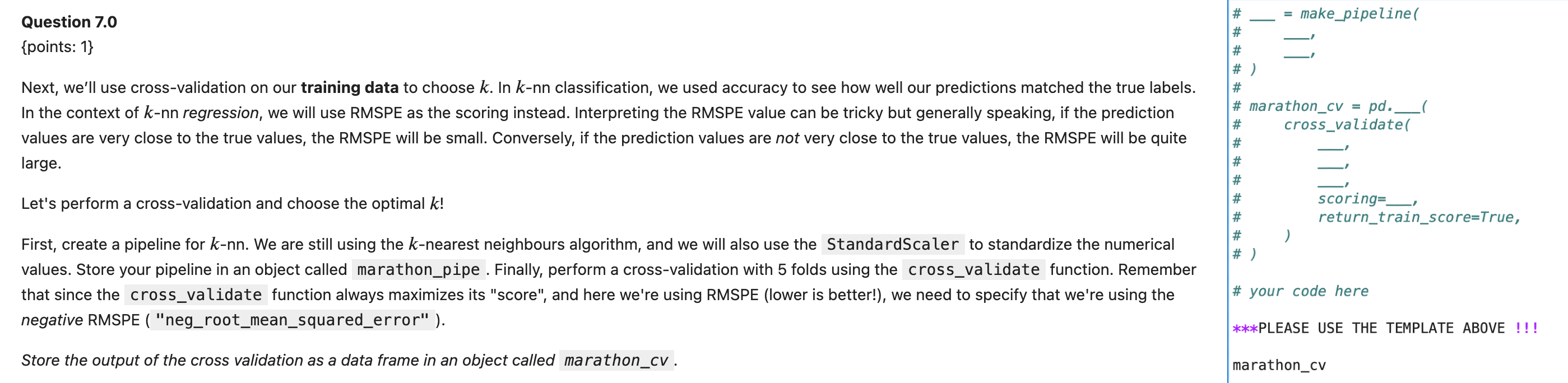

First, create a pipeline for nn We are still using the nearest neighbours algorithm, and we will also use the StandardScaler to standardize the numerical

values. Store your pipeline in an object called marathonpipe. Finally, perform a crossvalidation with folds using the crossvalidate function. Remember

that since the crossvalidate function always maximizes its "score", and here we're using RMSPE lower is better! we need to specify that we're using the

negative RMSPE "negrootmeansquarederror"

marathoncv

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock