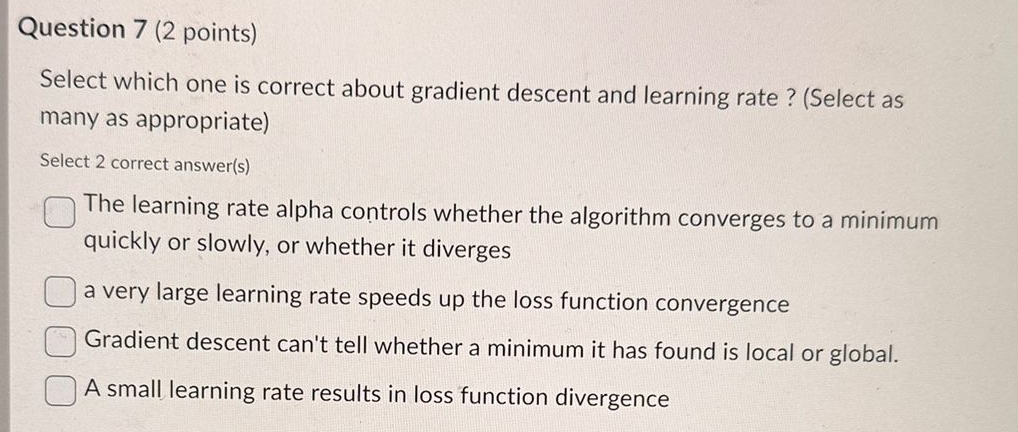

Question: Question 7 ( 2 points ) Select which one is correct about gradient descent and learning rate? ( Select as many as appropriate ) Select

Question points

Select which one is correct about gradient descent and learning rate? Select as many as appropriate

Select correct answers

The learning rate alpha controls whether the algorithm converges to a minimum quickly or slowly, or whether it diverges

a very large learning rate speeds up the loss function convergence

Gradient descent can't tell whether a minimum it has found is local or global.

A small learning rate results in loss function divergence

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock