Question: Question 8: Word Embedding Training (7 Points) Apply the nave training algorithm to train a word-embedding model on the following context: - context window size:

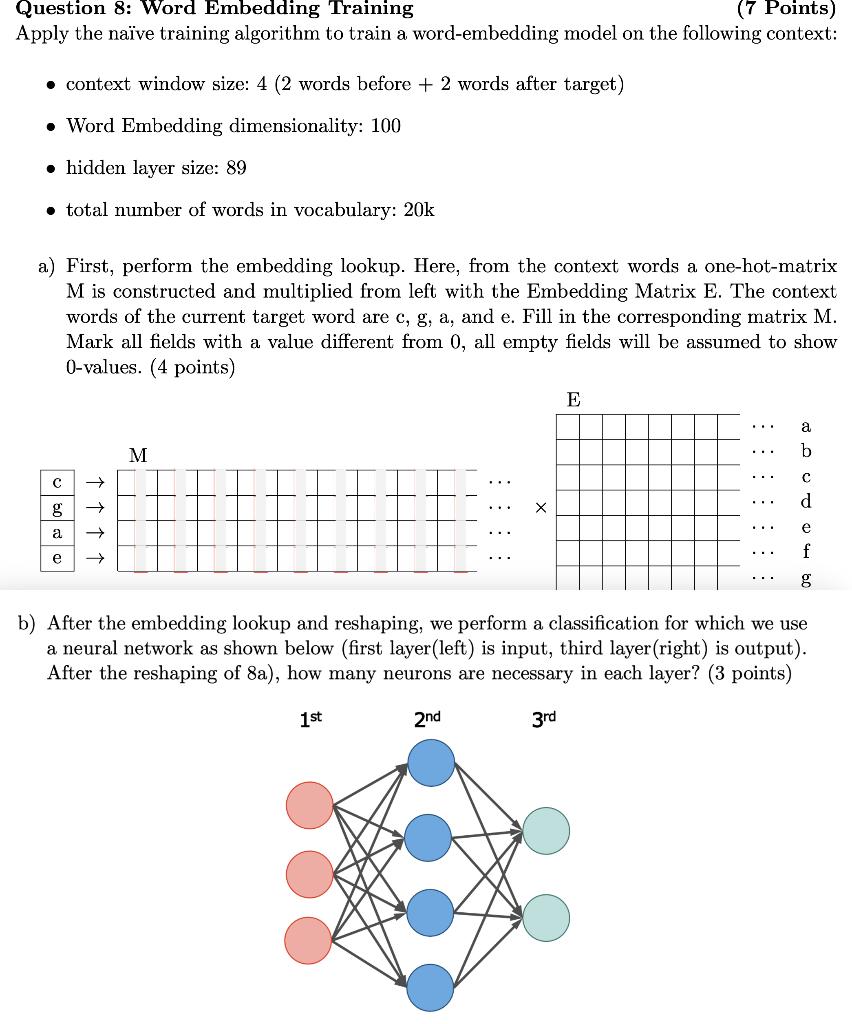

Question 8: Word Embedding Training (7 Points) Apply the nave training algorithm to train a word-embedding model on the following context: - context window size: 4 ( 2 words before +2 words after target) - Word Embedding dimensionality: 100 - hidden layer size: 89 - total number of words in vocabulary: 20k a) First, perform the embedding lookup. Here, from the context words a one-hot-matrix M is constructed and multiplied from left with the Embedding Matrix E. The context words of the current target word are c,g, a, and e. Fill in the corresponding matrix M. Mark all fields with a value different from 0 , all empty fields will be assumed to show 0 -values. (4 points) b) After the embedding lookup and reshaping, we perform a classification for which we use a neural network as shown below (first layer(left) is input, third layer(right) is output). After the reshaping of 8a ), how many neurons are necessary in each layer? (3 points)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts