Question: Quick but detailed solution with the tree please Question 2 B - Decision Tree Learning [ 2 5 % ] You have been given 5

Quick but detailed solution with the tree please

Question B Decision Tree Learning

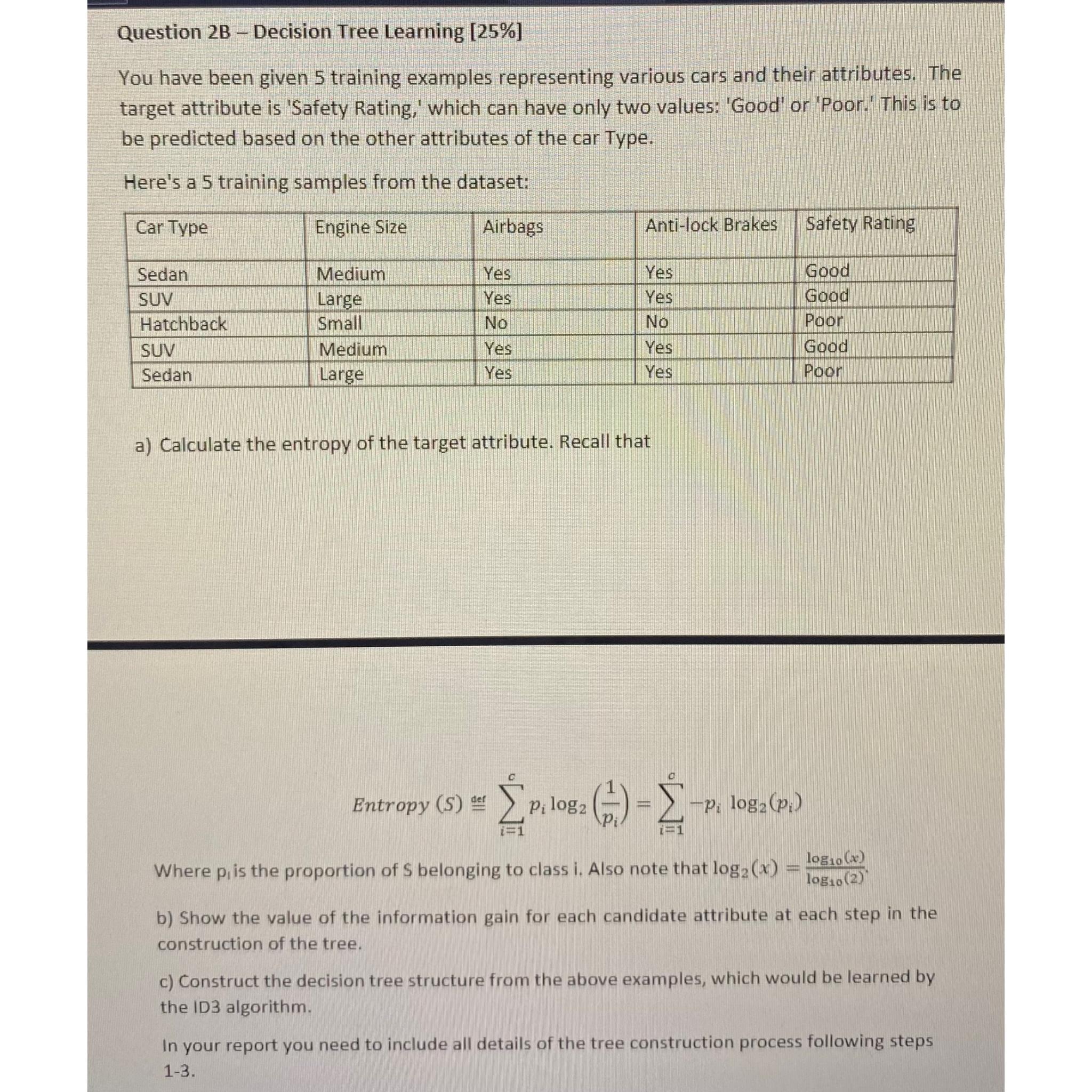

You have been given training examples representing various cars and their attributes. The target attribute is 'Safety Rating, which can have only two values: 'Good' or 'Poor. This is to be predicted based on the other attributes of the car Type.

Here's a training samples from the dataset:

tableCar Type,Engine Size,Airbags,Antilock Brakes,Safety RatingSedanMedium,Yes,Yes,GoodSUVLarge,Yes,Yes,GoodHatchbackSmall,NoNoPoorSUVMedium,Yes,Yes,GoodSedanLarge,Yes,Yes,Poor

a Calculate the entropy of the target attribute. Recall that

Entropy

Where is the proportion of belonging to class i Also note that

b Show the value of the information gain for each candidate attribute at each step in the construction of the tree.

c Construct the decision tree structure from the above examples, which would be learned by the ID algorithm.

In your report you need to include all details of the tree construction process following steps

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock